[ad_1]

Media playback is unsupported on your device

“We are already at the point where you can’t tell the difference between deepfakes and the real thing,” Professor Hao Li of the University of Southern California tells the BBC.

“It’s scary.”

We are at the computer scientist’s deepfake installation at the World Economic Forum in Davos which gives a hint of what he means.

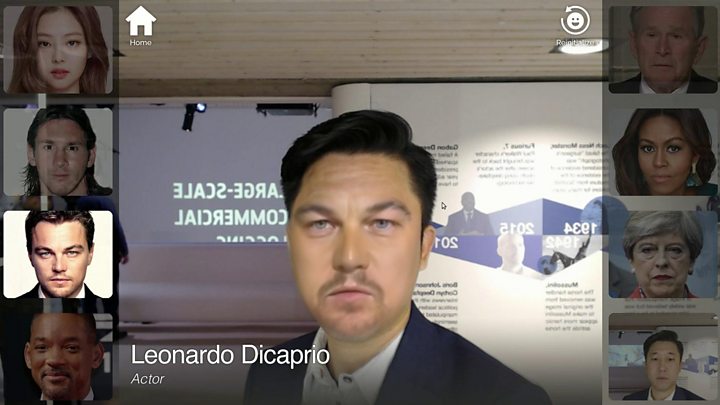

Like other deepfake tools, his software creates computer-manipulated videos of people – often politicians or celebrities – that are designed to look real.

Most often this involves “face swapping”, whereby the face of a celebrity is overlaid onto the likeness of someone else.

As I sit, a camera films my face and projects it onto a screen in front of me; my features are then digitally mapped.

Prof Hao Li says deepfakes are ‘dangerous’

One after the other the faces of actor Leonardo DiCaprio, former UK Prime Minister Theresa May and footballer Lionel Messi are superimposed onto the image of my own face in real time – their features and expressions merging seamlessly with mine.

The effects are more comical than sinister but feasibly could confuse some viewers. However, when the professor shows me another deepfake video he has been working on which is yet to be unveiled to the public, I totally understand what he means.

It shows a famous world leader giving a speech and is impossible to distinguish from the real thing.

“Just think of the potential for misuse and disinformation we could see with this type of thing,” says Prof Li.

Deepfakes only hit the headlines in 2017 after crudely produced videos began to surface on the internet, typically involving celebrity face swapping or manipulation.

Image copyright

Hao Li

Alec Baldwin impersonating President Trump: The real Alec Baldwin is on the left – could you tell?

Some were send-ups of well-known figures, voiced by impressionists or comedians. But in the vast majority of cases, famous people’s faces were superimposed onto those of porn stars, much to the alarm of those targeted.

Since then the technology – which relies on complex machine learning algorithms – has evolved rapidly and deepfakes have become more common. Some have been used as “fake news”, such as the doctored video designed to make US House Speaker Nancy Pelosi look incoherent.

Others have been cited in cases of online fraud. Facebook has even banned them from its platform for fear they could be used to manipulate people.

Prof Li’s own software was never designed to trick people and will be sold exclusively to businesses, he says. But he thinks a dangerous genie could be about to escape its bottle as deepfake technology falls into the wrong hands – and democracy is at threat.

“The first risk is that people are already using the fact deepfakes exist to discredit genuine video evidence. Even though there’s footage of you doing or saying something you can say it was a deepfake and it’s very hard to prove otherwise.”

Image copyright

Getty Images

Deepfake tech uses thousands of still images of the person

Politicians around the world have already been accused of using this ploy, one being Joao Doria, the governor of Sao Paulo in Brazil. In 2018 the married politician claimed a video allegedly showing him at an orgy was a deepfake – and no one has been able to prove conclusively that it wasn’t.

However, the greater threat is the potential for deepfakes to be used in political disinformation campaigns, says Prof Li. “Elections are already being manipulated with fake news, so imagine what would happen if you added sophisticated deepfakes to the mix?”

So far clips such as the one of Ms Pelosi are not hard to spot as fakes. But done subtly, he says, people could put start to words into the mouths of politicians and no one would know – or at least by the time it was corrected it would be too late.

“It could be even more dangerous in developing countries where digital literacy is more limited. There you could really impact how society would react. You could even spread stuff that got people killed.”

But some, like the Dutch cyber security firm Deeptrace, which tracks the technology, feel the panic over deepfakes has been overblown.

Director Giorgio Patrini says it is relatively easy to pull off a convincing deepfake when the person being mimicked is someone you don’t know. But if they are a politician or celebrity familiar to millions it’s much harder. “People are just too familiar with their voices and facial expressions,” he tells the BBC.

“You would also need to be able to impersonate their voice and make them say things they would credibly say, which limits what you can do.”

‘Dark day’

In addition, while he accepts the most sophisticated – and dangerous – deepfake tools are freely available in open source on the internet, he says they still require expertise to use. “That’s not to say they won’t become more widely commodified and accessible, but I don’t think it’ll happen so quickly. I think it will take years.”

Nevertheless, Mr Patrini thinks we will see deepfakes in the future that are indistinguishable from the real thing – and it’s likely to be a dark day for democracy when that happens.

Offering a taste of what this might look like, Facebook in December removed a network of more than 900 fake accounts from its platforms that allegedly used deceptive practices to push right wing ideology online.

Notably, the accounts had used fake profile photos of fake faces generated using artificial intelligence.

Both Prof Li and Deeptrace have created deepfake detection tools, although they admit cyber criminals will work tirelessly try to get around them.

However, Mr Patrini is optimistic: “Even when deepfakes are so sophisticated humans cannot tell the difference, I believe we will be able to build more sophisticated tools to spot them. It’s like anti-virus software – it will keep being updated and improved.”

[ad_2]

Source link