[ad_1]

DirectX has been with us for 25 years, providing developers with the tools to make incredible games. The latest version, DX12 was released in 2015 but in the past couple of years, Microsoft have been expanding the software library to cover ray tracing and machine learning, and eventually, data management.

Big next-gen titles like Cyberpunk 2077, Far Cry 6 and Watch Dogs: Legion are using some of these DirectX 12 extras. So what exactly are they? What can these enhancements do and how will they make games better for us all?

All good questions and we’ve got you covered: read on to find out!

What exactly is DirectX 12?

Let’s start with a quick explanation of what is DirectX. Take any modern game, be it one of the latest blockbusters or a tiny indie offering, and you’ll find that it’s been coded in a general purpose language, such as C++. All of the graphics, sound, gameplay mechanics are in the thousands or million of lines of instructions — take this data, multiply it with that data, put the result here, and so on.

But much of this work will be common, no matter what the game is or what platform it gets played on. So to make the process of making a game easier, Microsoft created a set of low-level APIs back in 1995. Think of these as an enormous collection of books, in which all kinds of rules and structures are set out about how about to issue instructions and what data types to use, as well as offering simplified commands that can be used in place of long sequences of code.

DirectX provides APIs for graphics, sound, video, music, and input devices — pretty much everything you’re going to need to play a game on a PC. The drivers for the devices that generate or use these multimedia functions will translate the instructions from the API, turning them into sequences of code that the hardware understands.

Microsoft has continually updated this massive library over the past 25 years, and the latest version called DirectX 12, was released to the public in late July 2015, with the launch of Windows 10. But as mentioned before, it’s kept receiving additions…

DirectX Raytracing

The first addition to the DirectX 12 collective was a software structure for ray tracing, The ‘holy grail’ of graphics and something that’s been a near constant source of wonder and criticism, in equal measure.

DXR a.k.a. DirectX Raytracing first appeared in late 2018 and has been created in conjunction with Nvidia, AMD, and Intel.

Nvidia was the first to offer hardware support for it, with their Volta-based GPUs, even though these chips didn’t have any specific features to handle ray tracing. That changed with the launch of Turing-powered GeForce RTX graphics cards in September 2018, and Nvidia’s marketing team heavily promoted RTX as being the next step in the evolution of graphics.

AMD followed suit in November 2020 with the launch of their RDNA 2 architecture, as used in Radeon RX 6000 graphics cards, the Xbox Series X/S and PlayStation 5 consoles.

We’ve covered the difference between ray tracing and traditional rasterization before, so we’ll focus specifically on what DXR is doing.

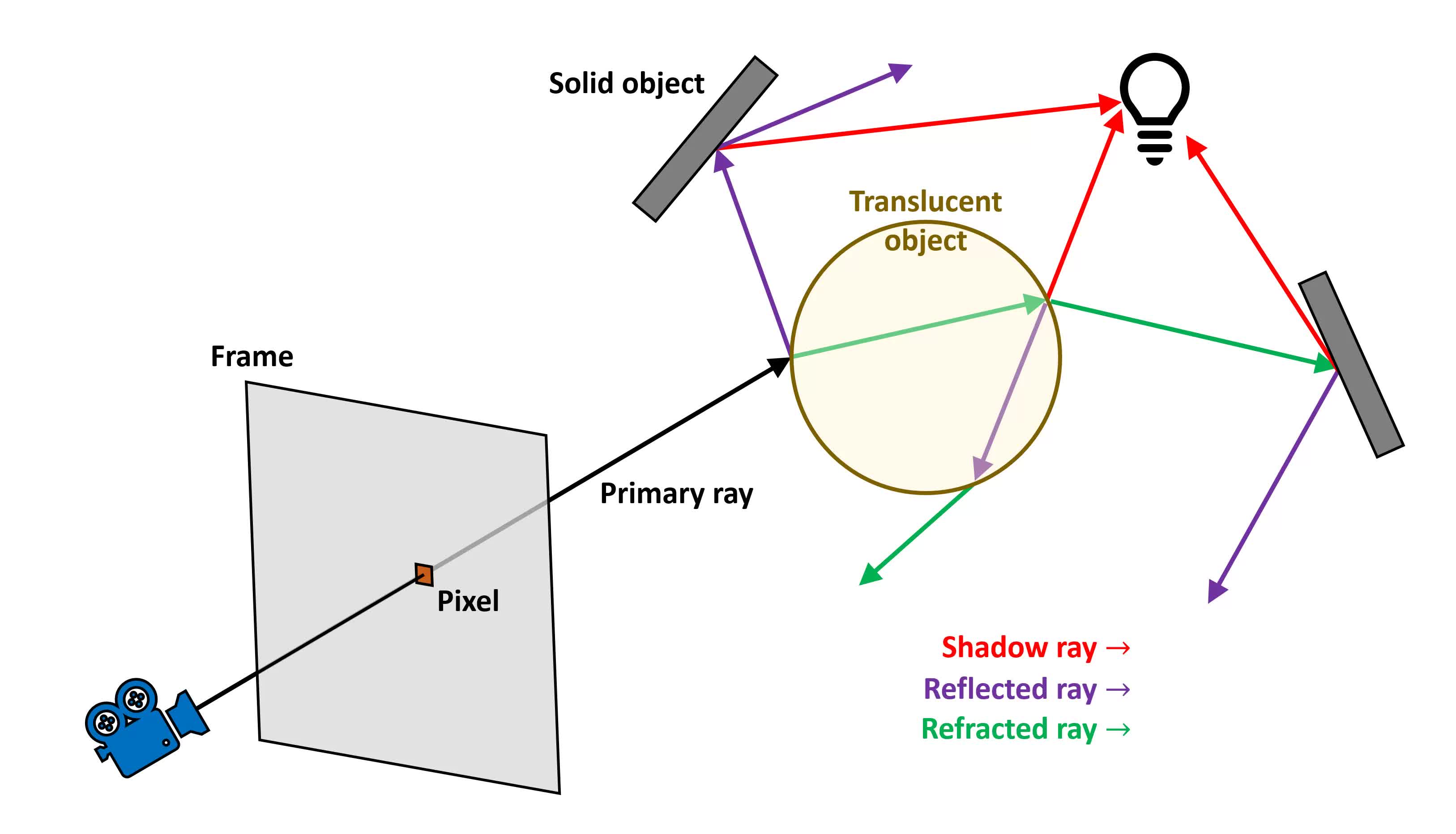

For decades, 3D games have been using a variety of techniques to simulate how the color and brightness of an object will appear, based on the impact of light sources and shadows. But it’s always been somewhat of a trick — an estimation of how light actually interacts with surfaces. Ray tracing works by following the path of a light ray, and seeing what objects it interacts with. Then, based on the information about that surface, the color and intensity of the light is calculated.

The ray might be reflected, absorbed, or refracted (i.e. passes through, but at an angle), and so the process can continue to follow the ray, as it ricochets around the scene. With today’s hardware, doing this for one ray isn’t super difficult, but for it to produce realistic results, each pixel in the frame needs at least one ray to be cast out and traced.

And if the frame has a resolution of 1920 x 1080 pixels, that would result in a little over 2 million rays. That’s no small amount of work to handle, so anything that can be done to help out becomes compulsory, rather than optional.

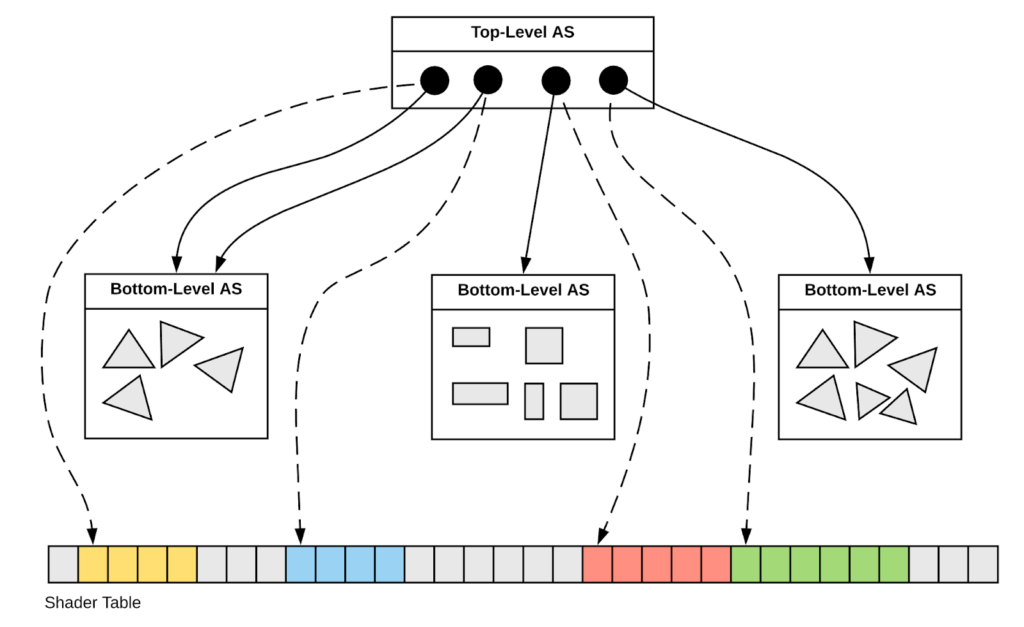

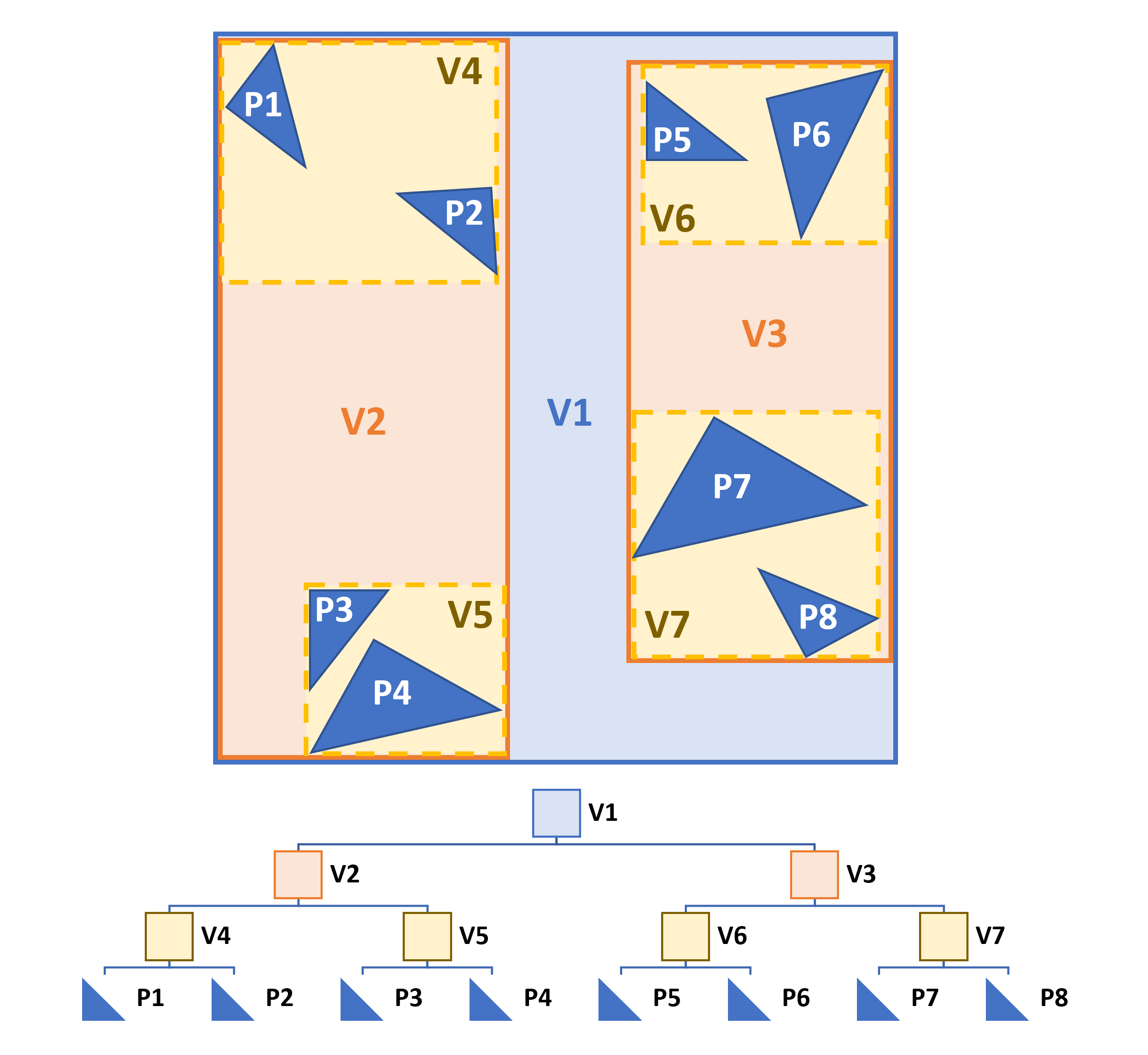

There are various techniques, called acceleration structures, that can be employed to help speed up the process of following the rays through the scene. DXR sets out the overall rules behind these structures, splitting them into two types: bottom level and top level. Bottom level acceleration structures (BLAS, for short) contain data about the primitives — the shapes that are used to build up the environment.

Setting up each BLAS takes time, but they provide a quick system for checking whether or not a ray interacts with a shape. However, recreating all the different BLAS for every new frame is very time consuming, and this is where the top level ones (TLAS) come into play.

Rather than containing all of the information about the geometry of the shapes, TLAS contain pointers (references to one or more specific BLAS) or instances (several references to the same BLAS). TLAS also contain data about how the primitives in the BLAS may have be transformed (e.g. moved, rotated, scaled) and other aspects, such as the primitive’s material properties (e.g. transparency).

This makes TLAS much quicker to setup than BLAS, but excessive use of them affects performance. DXR doesn’t state what methodology should be used to create the acceleration structures — it leaves that to the hardware/driver to interpret the API’s instruction to make them.

AMD and Nvidia use bounding volume hierarchies (BVH) and these are managed entirely by the GPU. Why? Because their processors contain specialized circuits for whipping through such structures (called BVH traversal), speeding up the process for determining if a ray gets anywhere near a given primitive.

The idea behind them is that the volumes are organized with respect to how the primitives are set from the perspective of the camera, so that the first volume to intersected by a ray is the one that gets analyzed further; the others are automatically rejected. The testing algorithm then checks through successive layers of volumes until the last level is reached.

The DXR pipeline requires the BLAS/TLAS to be created first, and then for each pixel in a frame, run a shader to generate a ray. This shader then uses the acceleration structure to determine whether or not it directly hits a surface, and ray tracing GPUs also have additional units to speed this up too.

The outcome of the ray-primitive intersection check depends on what instructions have been coded to take place. One or more of the following shaders can be made to run:

- Hit shader

- Any-hit shader

- Closest-hit shader

- Miss shader

The first and last ones are obvious: if the ray hits or misses a primitive, then the shader will return a specific color, or even activate another ray. Any-hit shaders can be activated if the ray blasts through multiple things, useful for handling translucent surfaces such as water or glass. Closest-hit does what the name suggests and activates the routine based on which primitive is closest to the ray, and can be used for determining the color of a shadow, for example.

This cycle of generating a ray, checking for an intersection, and figuring out of the color can be looped as many times as required, to achieve ever more realistic results. All of this scaled over millions of rays would grind a game’s performance into the dirt however.

So most implementations of DXR involve a hybrid approach: use the normal Direct3D graphics and compute pipelines to create the scene, apply textures, general lighting and post-processing effects, and switch to the DXR pipeline for very specific things, such as reflections or shadows.

Another common technique that’s used to keep the performance hit as low as possible, is to limit the number of rays cast into the scene — typically done by using one ray per block of pixels, rather than one ray per pixel — or only using a primary ray, and not tracing the bounces.

The downside to these approaches is that the end result contains lots of artifacts or noise.

There are lots of possible solutions to countering this, collectively known as denoising. With graphics cards, this is typically done with shaders to sample and then filter the completed frame (we’ll say more on this in a moment).

Now that the latest consoles and graphics cards sport ray tracing features, Microsoft is clearly going to continue developing and expanding DXR, to give more control and flexibility as to how rays are generated from shaders and how to manage them. The API’s first update appeared in May 2020, adding a group features under the title of DXR Tier 1.1. Unlike most DirectX updates, these additions don’t require new hardware, just updated drivers.

DirectML

The next enhancement, DirectML, became available in the Windows 10 May 2019 update. This one is all about Machine Learning, a loose term used to describe a whole range of programming paradigms and algorithms.

In the case of Microsoft’s API, it provides a uniform structure with which to accelerate the processing of inference models on a GPU. Like DXR, it doesn’t specify exactly how the hardware should do it — you just write your code and let the drivers handle it for you.

Machine learning used to be the preserve of powerful multi-CPU computers, but as GPUs have become more capable, they’ve taken over this roll. When Nvidia released Volta in mid 2017, they introduced a new hardware feature in their chips: Tensor Cores.

These units exclusively handle so-called tensor operations, a branch of mathematics that involves handling large sets of related numbers at the same time. Tensors are heavily used in neural network operations, one of the key elements in inference models.

A GPU doesn’t need to have tensor cores in order to support DirectML — in fact, you don’t even need a GPU. This is all thanks to a Direct3D 12 feature called meta commands. These are code objects that allow hardware vendors to use specific features in their devices to perform the calculations.

So even though DirectML is said to be hardware-agnostic (i.e. it’s oblivious to the nature of the hardware handling the instructions), meta commands provide a means for a GPU to use its own unique way of doing things.

In the case of Nvidia and their Volta/Turing/Ampere GPUs, if the meta commands are set up in the right way, then the Tensor Cores will kick in and process the math; if not, then the GPU falls back to running the shaders on the ‘standard’ cores. And if they’re not available, then the CPU handles the operations instead.

This is all very nice, but what exactly can developers do with DirectML?

There are three particular applications of note:

- Anti aliasing

- Upscaling and so-called ‘super resolution’

- Denoising

None of these require DirectML, but the combination of the API’s features and use of meta commands means that Intel, AMD, and Nvidia can all provide some kind of hardware acceleration for these techniques. In the case of the latter, they’ve already been using their Tensor Cores for a proprietary algorithm called Deep Learning Super Sampling (DLSS).

DLSS allows a game to run at a lower resolution, and reap all the performance benefits that brings, but present the final rendered frame at a much higher resolution. This might sound like a simple upscaling process, as used by Blu-ray players when they convert a DVD movie to a HD output.

But neural networks can provide results with better quality because it can adjust the color of pixels to correctly account for motion, based on where objects were and where they’re moving to. The likes of DLSS can produce amazing results and DirectML can be used to produce similar algorithms. This technique has picked up the name Super Resolution and it will be especially useful in games that are heavily using DXR

By reducing the frame resolution, fewer rays are required to shade the scene and thus the performance hit from using ray tracing becomes more acceptable. Then scaling the image back up for the monitor means you don’t have to look at a pixelated mess.

DirectML can also be used to apply high quality denoising, so even fewer rays can be cast about, without affecting the image quality too much. In the above image, you can see a ray traced image on the left, where just one primary ray per pixel has been used, and then the same process again, but with Intel’s neural network Open Image Denoiser applied, on the right.

Since its release, the machine learning API has received two updates, bringing a wealth of additional operations and extra data type support. Unlike DXR, no games at the moment appear to be using DirectML for anything, but it won’t be long before they do.

DirectStorage

The third enhancement we’re going to cover hasn’t been released yet, and it’s still yet to hit the developer preview stage. That means details are still scarce besides what Microsoft has told us via their developer blog. DirectX DirectStorage is another API, but one that’s not dedicated to graphics.

This software library was originally created for Xbox Series X / S consoles and is being brought across to Windows 10 computers to offer similar advantages. DirectStorage will allow games and other programs more direct access to resources on a primary storage drive, than how things are currently done.

When you play a PC game today, all the textures and models needed to create the graphics are first copied from the local storage and written into the system memory. Then, this is all copied again and written into the graphics card’s memory.

This, in itself, isn’t that much of a problem, as the transfer from the local storage to RAM is usually the slowest part of the whole sequence. However, a modern AAA title might have an enormous amount of resources on the drive, and so rather than trying to copy everything it needs, or might need, the game just requests small chunks of it.

This is especially true in open world games, where new textures and models are requested on a regular basis. Every time this happens, the CPU has to process what are called I/O requests (input/output), so if there are lots of requests constantly taking place, the CPU is going to be kept rather busy dealing with them all. This, in turn, limits the amount of system compute resources available for everything else — for the game, this might be handling AI, path-finding, netcode, input monitoring, etc., but it also has an impact on the game’s resources, too.

Many titles these days store textures, sound, and model files in a compressed format (especially if it’s digitally delivered). Before such resources can be used, they need to be decompressed by the CPU and if that’s already busy handling lots of I/O requests, then transferring data from storage to the graphics card just gets bogged down.

We experience such things in the form of long load times, or stuttering during gameplay, and anything that can be done to improve such matters can only be a good thing.

The key tricks DirectStorage employs to help out are bundling I/O requests into batches, rather than handling them in a serial manner, and letting games decide when they need to be told that a request has been completed. The CPU still has to work through of all this, of course, but it can now apply multiple threads in parallel to the task.

Another reason why this change is needed, is the adoption of faster NVMe SSDs for local storage. These are blindingly fast, sporting huge amounts of data bandwidth, compared to SATA drives. The latter take quite a comparatively long time to complete an I/O request, whereas the former can blast through them.

DirectStorage probably won’t provide any boost for older PCs and games, but for the latest machines and titles to come, the use of this API will help to give us quicker loading times, faster data streaming, and a bit more CPU breathing space. All thanks to a new software library!

Unfortunately, developers won’t be able to get their hands on DirectStorage for beta testing until next year, and there’s no indication as to when it will be publicly released.

Meanwhile, you may have heard that Nvidia is developing something they’re calling RTX IO. This system is not the same DirectStorage under a proprietary name, as it’s about having a means to bypass copying resources to the system memory. Instead, a game could directly transfer data from the storage drive to the graphics card’s local memory.

However, it is being designed to be used in conjunction with Microsoft’s forthcoming API, to further reduce the amount of I/O requests the CPU manages. And where one vendor has started something, others soon follow.

When 12 became Ultimate

Before we close off this look at DirectX 12’s next-gen enhancements, it’s worth commenting on an update to DirectX that Microsoft released in March 2020.

In previous DX iterations, minor releases followed the convention of version numbering. For example, DirectX 11 came out in the Fall of 2009, 11.1 in 2012, and 11.2 a year later. However, DirectX 12 remained as ’12’ for nearly five years, until they announced DirectX 12 Ultimate.

This revision brought significant updates to Direct3D and DirectX Raytracing (which we’ve now covered), so let’s take a quick look at the former’s new features:

- Variable Rate Shading (VRS)

- Mesh Shaders

- Sampler Feedback

The first one essentially allows developers to control how frequently shaders get applied to a whole scene, part of it, or even just an individual primitive. Normally, shaders are applied to each pixel in a frame, but Variable Rate Shading allows you to select a block of pixels (e.g. 2 x 2) and run the shaders on that.

Although this results in a decrease in visual quality, the performance benefit is potentially huge, so if you don’t need something to look super accurate because it’s in the distance or moving past really quickly, decreasing the shader rate is a sensible thing to do.

Mesh shaders are a logical enhancement to geometry and vertex shaders, the tools used to move and scale the objects and environment in a scene.

Traditionally, this area of vertex processing has been quite rigid in terms of programming flexibility and control, but mesh shaders pushes it towards the realm of compute shaders.

The benefit for us will be in the form of having more complex models and scenery in our games, without suffering lower performance. This is something that we’ve heard before, over the years, especially with tessellation and geometry shaders, but this time round it’s a genuine improvement.

Sampler Feedback operates on a similar theme: giving the developer more information and control, but about what textures they’re using. The advantages this can potentially bring is reducing the amount of textures that need to be streamed from memory into the GPU, and for where a game has rendered objects as textures, it permits them to be used more effectively and thus more quickly.

The final feature of DirectX 12 Ultimate isn’t a cool new graphics trick or an API to speed up data handling. Microsoft have unified their APIs for Windows and the Xbox platform into one cohesive library — in theory, this should make it far easier to create a new title that will require minimal work to port it from one system to the other.

This was the idea behind the original Xbox, but the 360 veered away from this (as did the One, but to a lesser extent), by using a hardware and software design that wasn’t overly PC-like. With the Series X and S, the internals are about as close to being a PC, without actually being one, so it made sense for the software to follow suit.

What’s good for developers is good for us

Although everything we’ve covered here is really for developers to utilize and take advantage of, we’re the ones who ultimately benefit, even though it will take some time before all such features are commonplace in games.

While the focus is still about creating ever more realistic graphics, especially as far as ray tracing is concerned, Microsoft have kept a keen eye on performance. This is because it’s becoming increasingly more difficult for hardware vendors to create new products that are significantly more powerful that their predecessors, for a price tag that appeals to the majority of consumers.

Not everyone can afford nor wants to spend a $1,000 on a graphics card, just to run games at their highest settings and still get good frame rates. And publishers typically want their titles to reach as many consumers as possible, rather than just being for a select few.

All of which means that DirectX 12 Ultimate, and all its nifty enhancements, are going to be used to make better looking games, that all of us can enjoy.

Keep Reading. Hardware at TechSpot

Shopping Shortcuts:

[ad_2]

Source link