The big picture: A big challenge in analyzing a rapidly growing company like Nvidia is making sense of all the different businesses it participates in, the numerous products it announces, and the overall strategy it’s pursuing. Following the keynote speech by CEO Jensen Huang at the company’s annual GTC Conference this year, the task was particularly daunting. As usual, Huang covered an enormous range of topics over a lengthy presentation and, frankly, left more than a few people scratching their heads.

However, during an enlightening Q&A session with industry analysts a few days later, Huang shared several insights that suddenly made all the various product and partnership announcements he covered, as well as the thinking behind them, crystal clear.

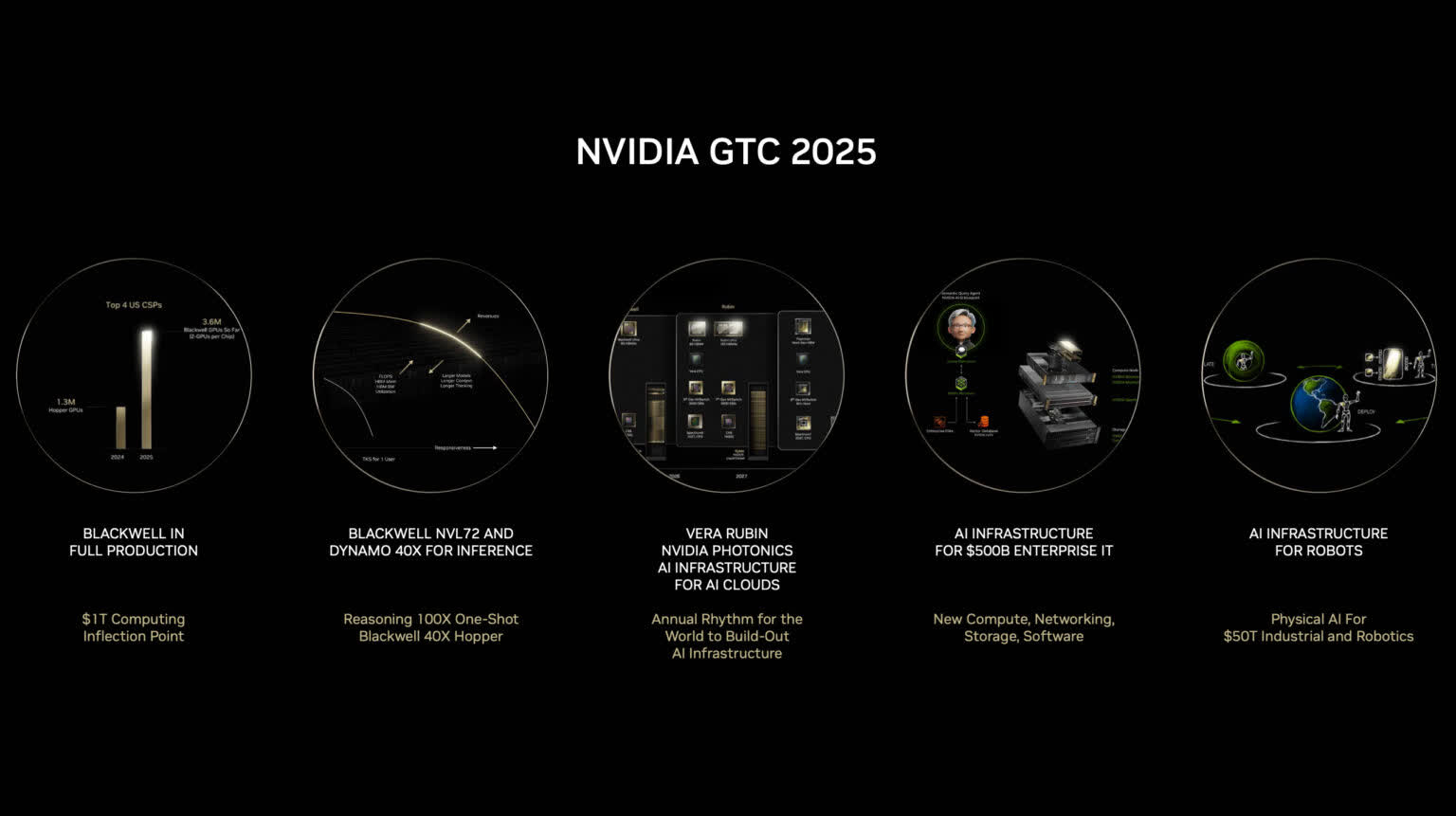

In essence, he said that Nvidia is now an AI infrastructure provider, building a platform of hardware and software that large cloud computing providers, tech vendors, and enterprise IT departments can use to develop AI-powered applications.

Needless to say, that’s an extraordinarily far cry from its role as a provider of graphics chips for PC gaming, or even from its efforts to help drive the creation of machine learning algorithms. Yet, it unifies several seemingly disparate announcements from recent events and provides a clear indication of where the company is heading.

Nvidia is moving beyond its origins and its reputation as a semiconductor design house into the critical role of an infrastructure enabler for the future world of AI-powered capabilities – or, as Huang described it, an “intelligence manufacturer.”

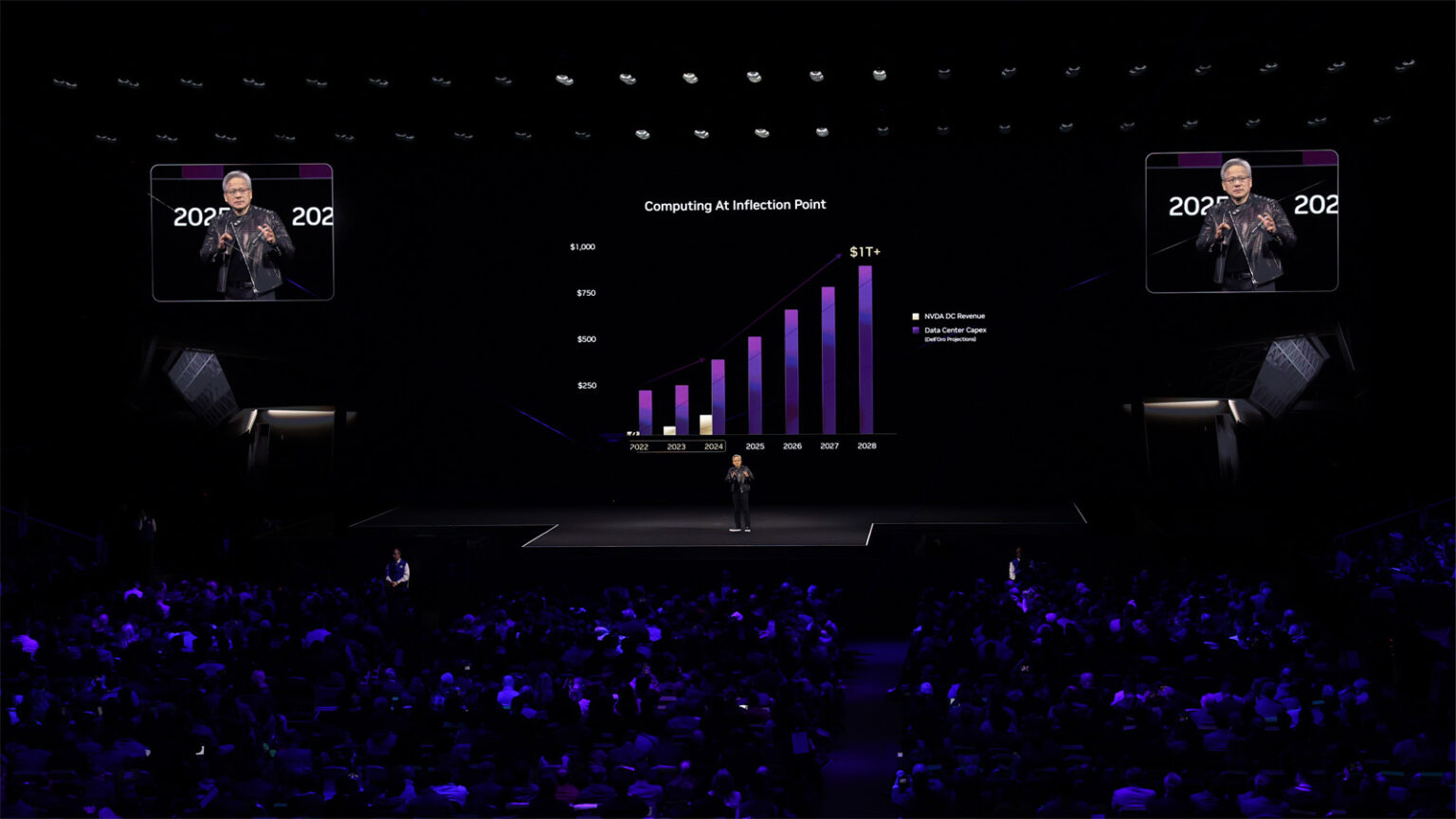

In his GTC keynote, Huang discussed Nvidia’s efforts to enable efficient generation of tokens for modern foundation models, linking these tokens to intelligence that organizations will leverage for future revenue generation. He described these initiatives as building an AI factory, relevant to an extensive range of industries.

Although ambitious, the signs of an emerging information-driven economy – and the efficiencies AI brings to traditional manufacturing – are becoming increasingly evident. From businesses built entirely around AI services (like ChatGPT) to robotic manufacturing and distribution of traditional goods, we are undoubtedly moving into a new economic era.

In this context, Huang extensively outlined how Nvidia’s latest offerings facilitate faster and more efficient token creation. He initially addressed AI inference, commonly considered simpler than the AI training processes that initially brought Nvidia into prominence. However, Huang argued that inference, particularly when used with new chain-of-thought reasoning models such as DeepSeek R1 and OpenAI’s o1, will require approximately 100 times more computing resources than current one-shot inference methods. Consequently, there’s little concern that more efficient language models will reduce the demand for computing infrastructure. Indeed, we remain in the early stages of AI factory infrastructure development.

One of Huang’s most important yet least understood announcements was a new software tool called Nvidia Dynamo, designed to enhance the inference process for advanced models. Dynamo, an upgraded version of Nvidia’s Triton Inference Server software, dynamically allocates GPU resources for various inference stages, such as prefill and decode, each with distinct computing requirements. It also creates dynamic information caches, managing data efficiently across different memory types.

Operating similarly to Docker’s orchestration of containers in cloud computing, Dynamo intelligently manages resources and data necessary for token generation in AI factory environments. Nvidia has dubbed Dynamo the “OS of AI factories.” Practically speaking, Dynamo enables organizations to handle up to 30 times more inference requests with the same hardware resources.

Of course, it wouldn’t be GTC if Nvidia didn’t also have chip and hardware announcements and there were plenty this time around. Huang presented a roadmap for future GPUs, including an update to the current Blackwell series called Blackwell Ultra (GB300 series), offering enhanced onboard HBM memory for improved performance.

He also unveiled the new Vera Rubin architecture, featuring a new Arm-based CPU called Vera and a next-generation GPU named Rubin, each incorporating significantly more cores and advanced capabilities. Huang even hinted at the generation beyond that – named after mathematician Richard Feynman – projecting Nvidia’s roadmap into 2028 and beyond.

During the subsequent Q&A session, Huang explained that revealing future products well in advance is crucial for ecosystem partners, enabling them to prepare adequately for upcoming technological shifts.

Huang also emphasized several partnerships announced at this year’s GTC. The significant presence of other tech vendors demonstrated their eagerness to participate in this growing ecosystem. On the compute side, Huang explained that fully maximizing AI infrastructure required advancements in all traditional computing stack areas, including networking and storage.

To that end, Nvidia unveiled new silicon photonics technology for optical networking between GPU-accelerated server racks and discussed a partnership with Cisco. The Cisco partnership enables Cisco silicon in routers and switches designed for integrating GPU-accelerated AI factories into enterprise environments, along with a shared software management layer.

For storage, Nvidia collaborated with leading hardware providers and data platform companies, ensuring their solutions could leverage GPU acceleration, thus expanding Nvidia’s market influence.

And finally, building on the diversification strategy, Huang introduced more work that the company is doing for autonomous vehicles (notably a deal with GM) and robotics, both of which he described as part of the next big stage in AI development: physical AI.

Nvidia knows that being an infrastructure and ecosystem provider means that they can benefit both directly and indirectly as the overall tide of AI computing rises, even as their direct competition is bound to increase

Nvidia has been providing components to automakers for many years now and, similarly, has had robotics platforms for several years as well. What’s different now, however, is that they’re being tied back to AI infrastructure that can be used to better train the models that will be deployed into those devices, as well as providing the real-time inferencing data that’s needed to operate them in the real world. While this tie back to infrastructure is arguably a relatively modest advance, in the bigger context of the company’s overall AI infrastructure strategy, it does make more sense and helps tie together many of the company’s initiatives into a cohesive whole.

Making sense of all the various elements that Huang and Nvidia unveiled at this year’s GTC isn’t simple, particularly because of the firehose-like nature of all the different announcements and the much broader reach of the company’s ambitions. Once the pieces do come together, however, Nvidia’s strategy becomes clear: the company is taking on a much larger role than ever before and is well-positioned to achieve its ambitious objectives.

At the end of the day, Nvidia knows that being an infrastructure and ecosystem provider means that they can benefit both directly and indirectly as the overall tide of AI computing rises, even as their direct competition is bound to increase. It’s a clever strategy and one that could lead to even greater growth for the future.

Bob O’Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech

Source link