[ad_1]

The big picture: The European Organization for Nuclear Research (CERN) hosts one of the most ambitious engineering and scientific endeavors ever undertaken by humans. The Large Hadron Collider (LHC) is the world’s largest and highest-energy particle accelerator, which is used by scientists to analyze evidence of the structure of the subatomic world – in that process, the LHC is capable of generating tens of petabytes of data every year.

CERN recently had to upgrade its backend IT systems in preparation for the new experimental phase of the Large Hadron Collider (LHC Run 3). This phase is expected to generate 1 petabyte of data daily until the end of 2025. The previous database systems were no longer sufficient to handle the “high cardinality” data produced by the collider’s primary experiments, such as CMS.

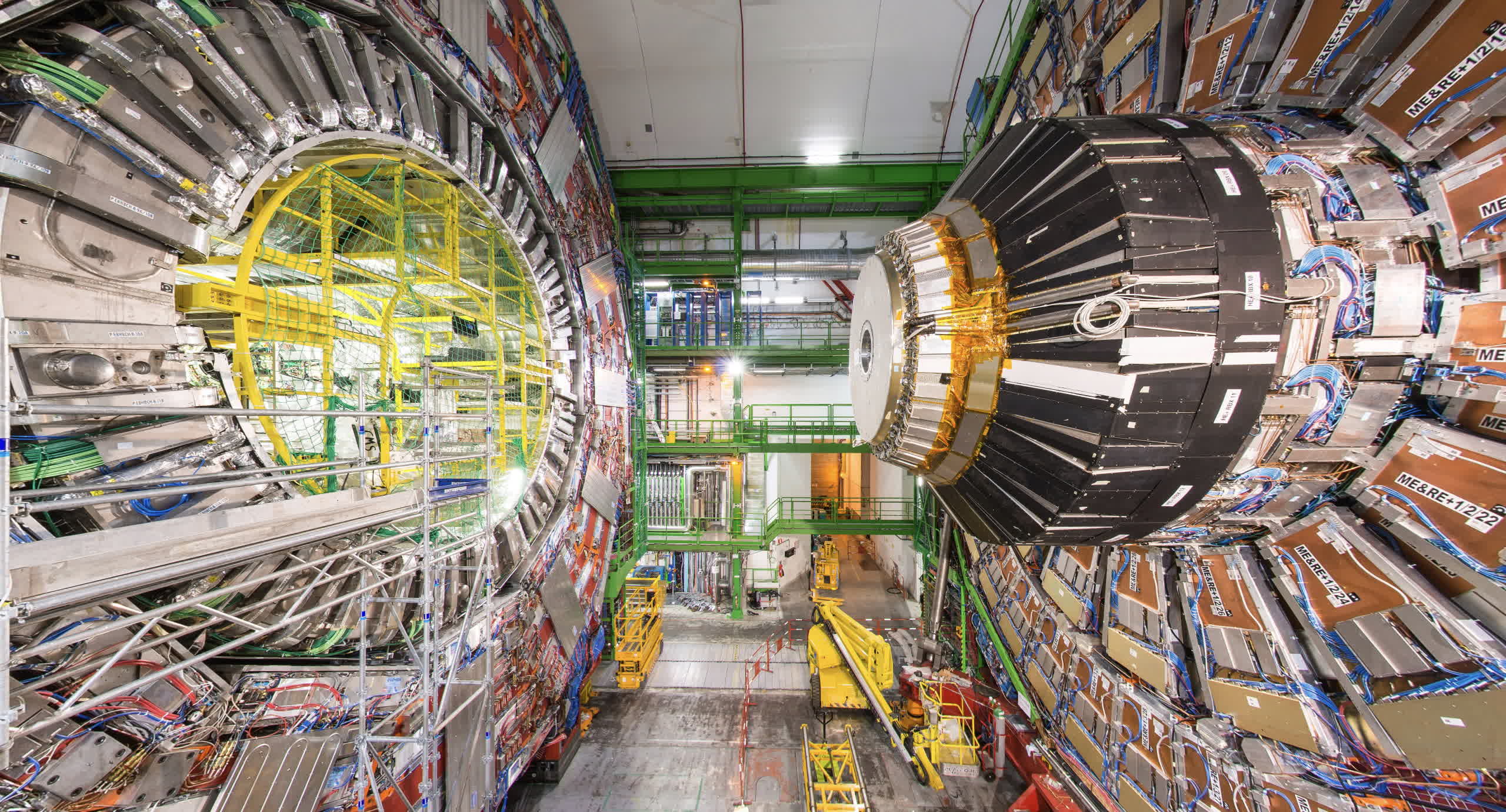

The Compact Muon Solenoid (CMS) is LHC’s general-purpose detector with a wide-ranging physics program. It encompasses the study of the Standard Model (including the Higgs boson) and the search for extra dimensions and particles that may constitute dark matter. CERN describes this experiment as one of the largest scientific collaborations in history, involving approximately 5,500 individuals from 241 institutes in 54 different countries.

CMS and other LHC experiments underwent a significant upgrade phase from 2018 to 2022 and are now prepared to resume colliding subatomic particles during the three-year “Run 3” data collection period. During the shutdown, CERN experts also performed substantial upgrades to the detector systems and computing infrastructures supporting CMS.

Brij Kishor Jashal, a scientist collaborating with CMS, mentioned that his team was aggregating 30 terabytes of data over a 30-day period to monitor infrastructure performance. He explained that Run 3 operations generate higher luminosity, resulting in a substantial increase in data volume. The previous backend monitoring system relied on the open-source time series database (TSDB) InfluxDB, which utilizes compression algorithms to efficiently handle this data, along with the monitoring database Prometheus.

However, InfluxDB and Prometheus encountered performance, scalability, and reliability issues, particularly when dealing with high cardinality data. High cardinality refers to the prevalence of repeated values and the ability to redeploy applications multiple times across new instances. To address these challenges, the CMS monitoring team opted to replace both InfluxDB and Prometheus with the VictoriaMetrics TSDB database.

VictoriaMetrics now serves as both the backend storage and the monitoring system for CMS, effectively addressing the cardinality issue that was encountered before. Jashal noted that the CMS team is currently satisfied with the performance of clusters and services. While there is still room for scalability, the services are operating in “high availability mode” within CMS’s dedicated Kubernetes clusters to provide enhanced reliability guarantees. CERN’s data center relies on an OpenStack service that runs on a cluster of robust x86 machines.

[ad_2]

Source link