Despite continuous improvements and incremental upgrades with each new generation, processors haven’t seen any industry-shifting advancements in a long time. The transition from vacuum tubes to transistors was revolutionary. The move from individual components to integrated circuits was another major leap. However, since then, there haven’t been any paradigm shifts of that magnitude. Yes, transistors have become smaller, chips have gotten faster, and performance has increased exponentially, but we are beginning to see diminishing returns.

This is the fourth and final installment in our CPU design series, providing an overview of computer processor design and manufacturing. Starting from the top down, we explored how computer code is compiled into assembly language and then converted into binary instructions that the CPU can interpret. We examined how processors are architected and how they process instructions. Then, we delved into the various structures that make up a CPU.

Going even deeper, we explored how these structures are built and how billions of transistors work together inside a processor. We also examined how processors are physically manufactured from raw silicon, learned the basics of semiconductors, and uncovered what the inside of a chip truly looks like.

Moving on to part four. Since companies don’t publicly share the details of their research or current technology, it’s difficult to determine exactly what’s inside the CPU in your computer. However, we can examine ongoing research and industry trends to get a sense of where things are headed.

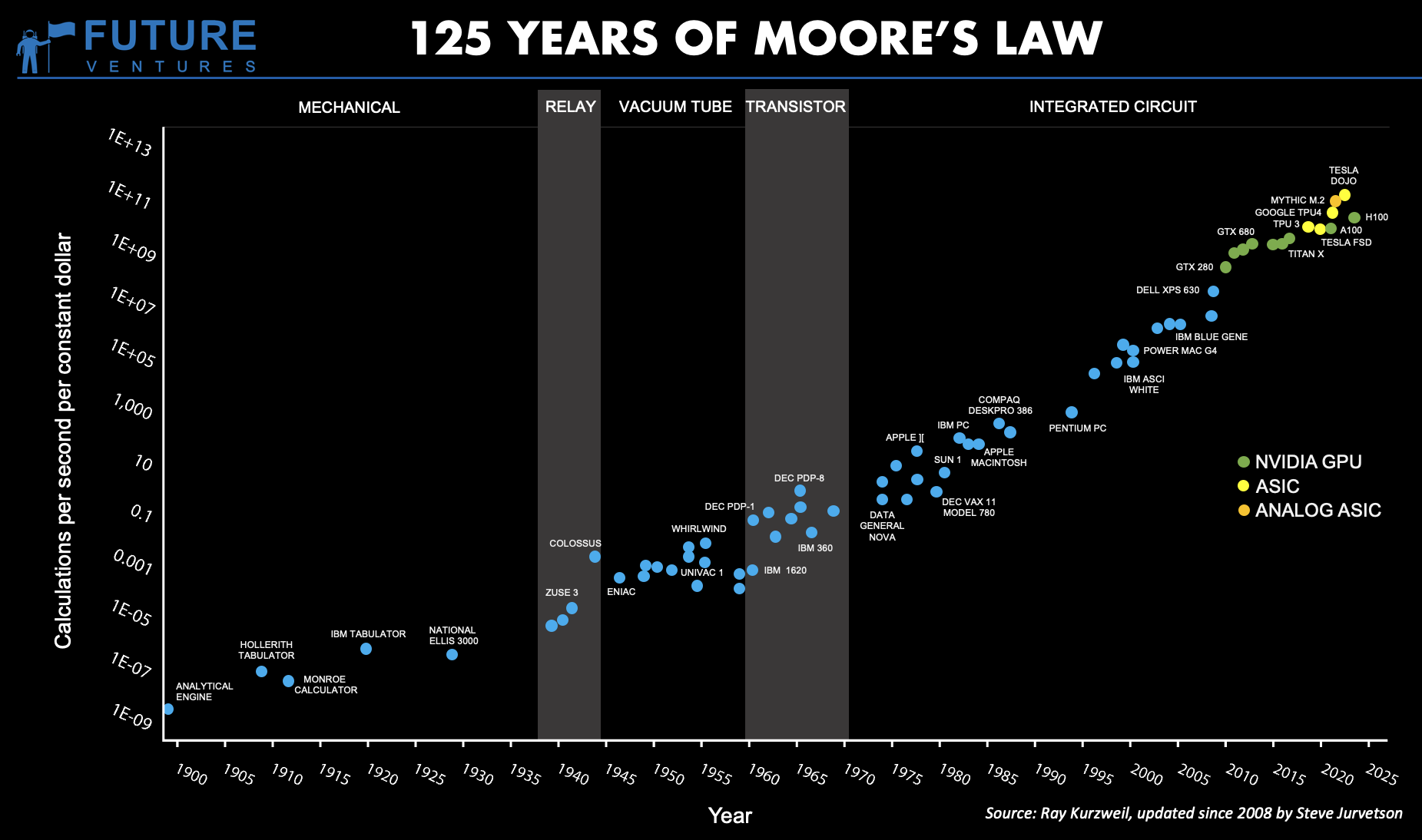

Moore’s Law Over 125 Years

One of the most well-known concepts in the processor industry is Moore’s Law, which states that the number of transistors on a chip doubles approximately every 18 months. This held true for a long time but has slowed significantly – arguably to the point of ending.

Transistors have become so small that we are approaching the fundamental limits of physics. For traditional silicon-based CPUs, Moore’s Law is effectively over. The rate of transistor scaling has diminished considerably, leading chipmakers like Intel, AMD, and TSMC to shift their focus toward advanced packaging, chiplet architectures, and 3D stacking.

One direct result of this breakdown is that companies have started to increase core count rather than frequency to improve performance. This is the reason we are seeing octa-core processors becoming mainstream rather than 10GHz dual core chips. There simply isn’t a whole lot of room left for growth beyond just adding more cores.\

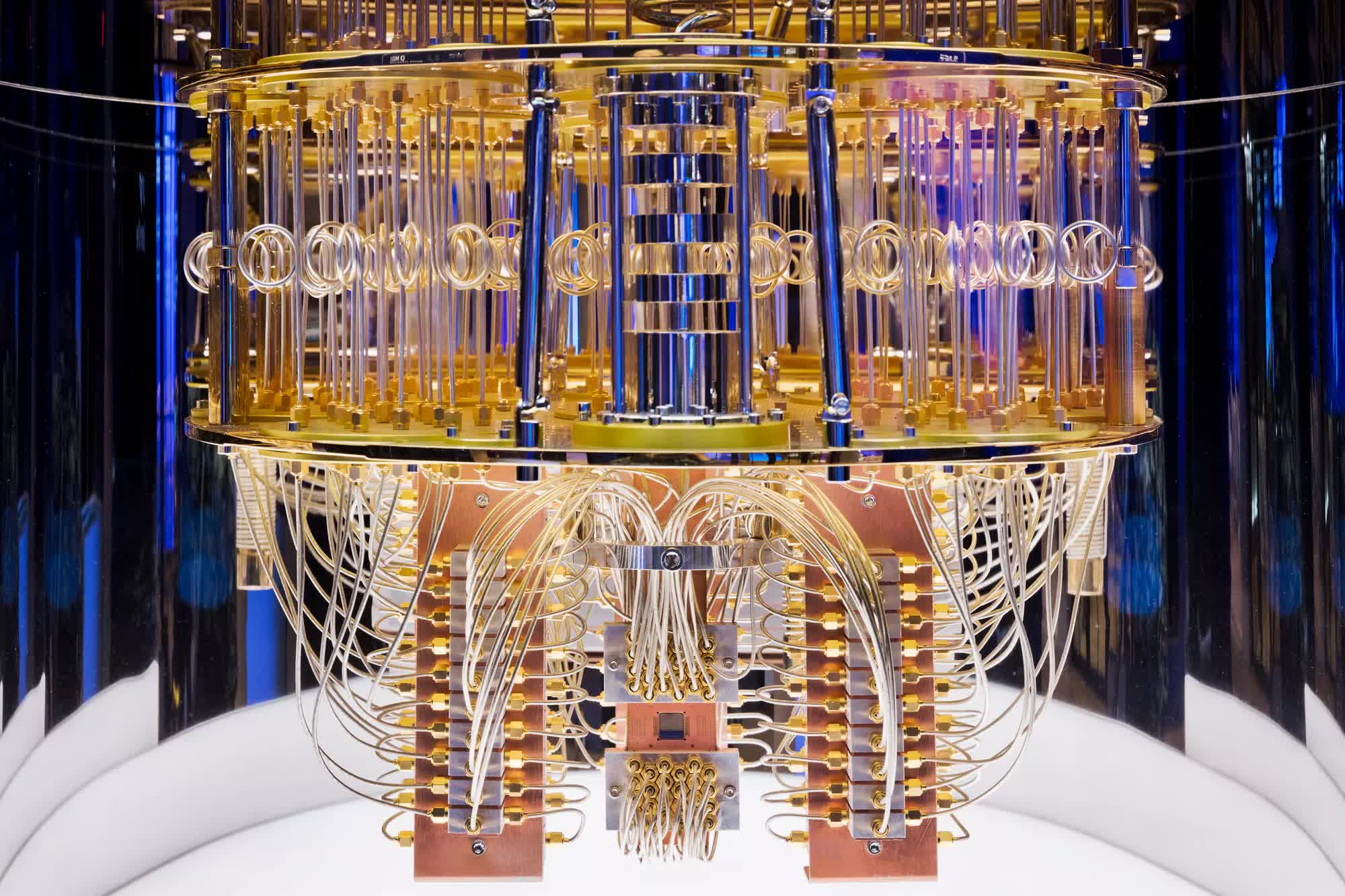

Quantum Computing

On a completely different note, Quantum Computing is an area that promises lots of room for growth in the future. We won’t pretend to be experts on this and since the technology is still being created, there aren’t many real “experts” anyway. To dispel any myths, quantum computing isn’t something that will provide you with 1,000fps in a real-life like render or anything like that. For now, the main advantage to quantum computers is that it allows for more advanced algorithms that were previously not feasible to process with traditional computers.

Inside an IBM Quantum System One

In a traditional computer, a transistor is either on or off which represents a 0 or a 1. In a quantum computer, superposition is possible which means the bit can be both 0 and 1 at the same time. With this new capability, computer scientists can develop new methods of computation and will be able to solve problems we don’t currently have the compute capabilities for. It’s not so much that quantum computers are faster, it’s that they are a new model of computation that will let us solve different types of problems.

The technology for this is still a decade or two away from the mainstream (depending on who you ask), so what are some trends we are starting to see in real processors right now? There are dozens of active research areas but I’ll touch on a few that are the most impactful in my opinion.

High-Performance Computing Trends and AI

A growing trend that we’ve been impacted by is heterogeneous computing. This is the method of including multiple different computing elements in a single system. Most of us benefit from this in the form of a dedicated GPU in our systems.

A CPU is very customizable and can perform a wide variety of computations at a reasonable speed. A GPU, on the other hand, is designed specifically to perform graphics calculations like matrix multiplication. It is really good at that and is orders of magnitude faster than a CPU at those types of instructions. By offloading certain graphics calculations from the CPU to the GPU, we can accelerate the workload. It’s easy for any programmer to optimize software by tweaking an algorithm, but optimizing hardware is much more difficult.

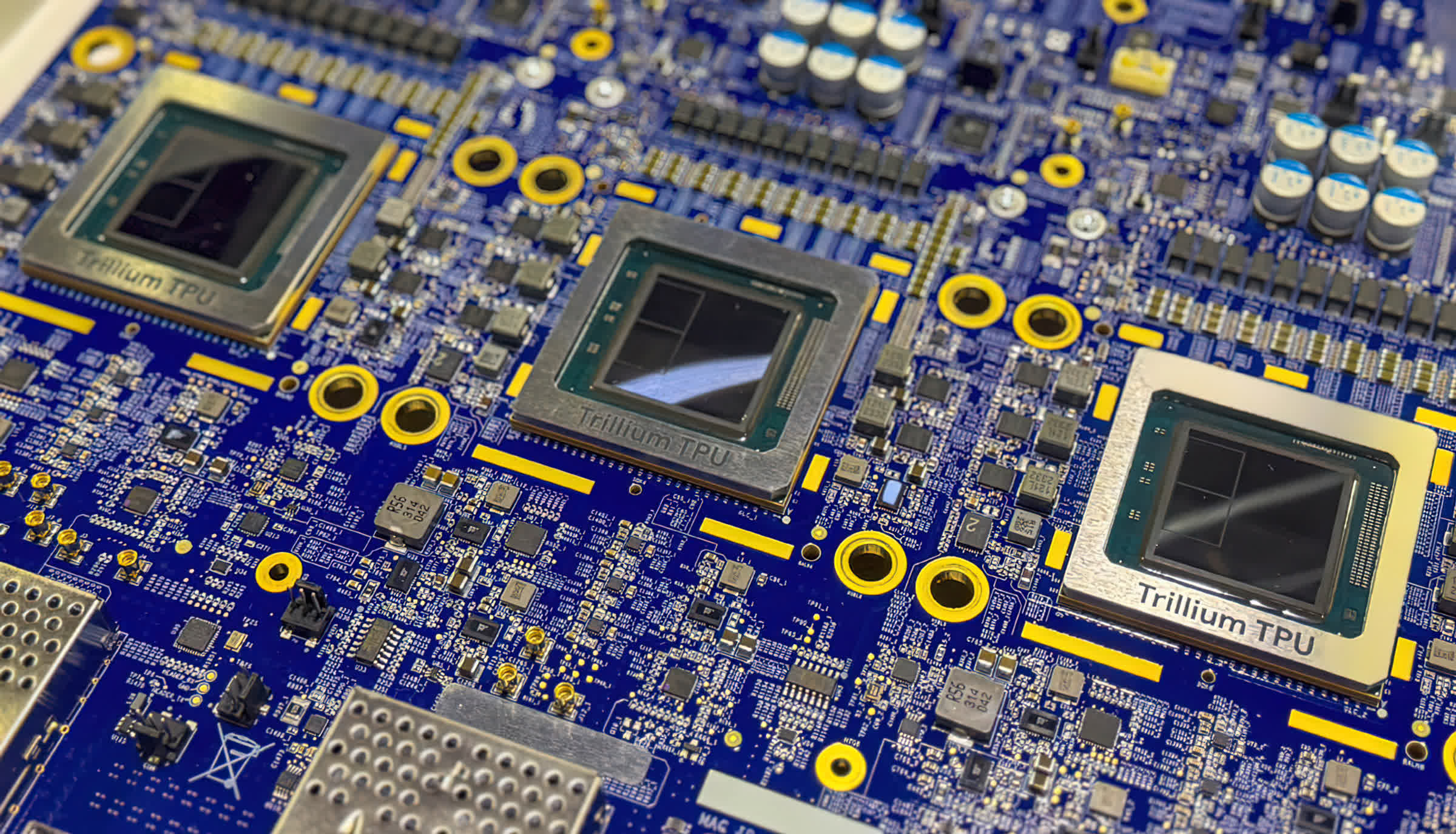

However, GPUs aren’t the only area where accelerators are becoming common. With the rise of AI and machine learning workloads, we are seeing a surge in custom AI processors. For example, Google’s Tensor Processing Units (TPUs) and Nvidia’s Tensor Cores are designed specifically for deep learning computations. Likewise, AMD’s Instinct MI300 and Intel’s Gaudi AI accelerators are shaping the AI landscape, offering more specialized performance for training and inference workloads.

Google Cloud TPU V6e Trillium 3

In addition to AI, specialized accelerators are now integral to mobile and cloud computing. Most smartphones have dozens of hardware accelerators designed to speed up very specific tasks. This computing style is known as a Sea of Accelerators, with examples including cryptography processors, image processors, machine learning accelerators, video encoders/decoders, biometric processors, and more.

As workloads get more and more specialized, hardware designers are incorporating even more accelerators into their chips. Cloud providers like AWS now offer FPGA instances for developers to accelerate workloads in the cloud. While traditional computing elements like CPUs and GPUs have a fixed internal architecture, an FPGA (Field-Programmable Gate Array) is flexible – it’s almost like programmable hardware that can be configured to fit specific computing needs.

For example, if you want to accelerate image recognition, you can implement those algorithms in hardware. If you want to simulate a new hardware design, you can test it on the FPGA before actually building it. While FPGAs offer more performance and power efficiency than GPUs, they are still outperformed by custom-built ASICs (application-specific integrated circuits), which are being developed by companies like Google, Tesla (Dojo), and Cerebras to optimize deep learning and AI processing.

Another emerging trend in high-performance computing and chip architecture is the shift toward chiplets (read our explainer), which we discussed in Part 3 of the series. Traditional monolithic chips are becoming increasingly difficult to scale, leading companies like AMD, Intel, and Apple to explore modular designs where smaller processing units (chiplets) are combined to function as a single processor. AMD’s Zen 4 and Zen 5 architectures, along with Intel’s Meteor Lake and Foveros 3D packaging, demonstrate how breaking up the CPU into separate chiplets can improve performance and efficiency.

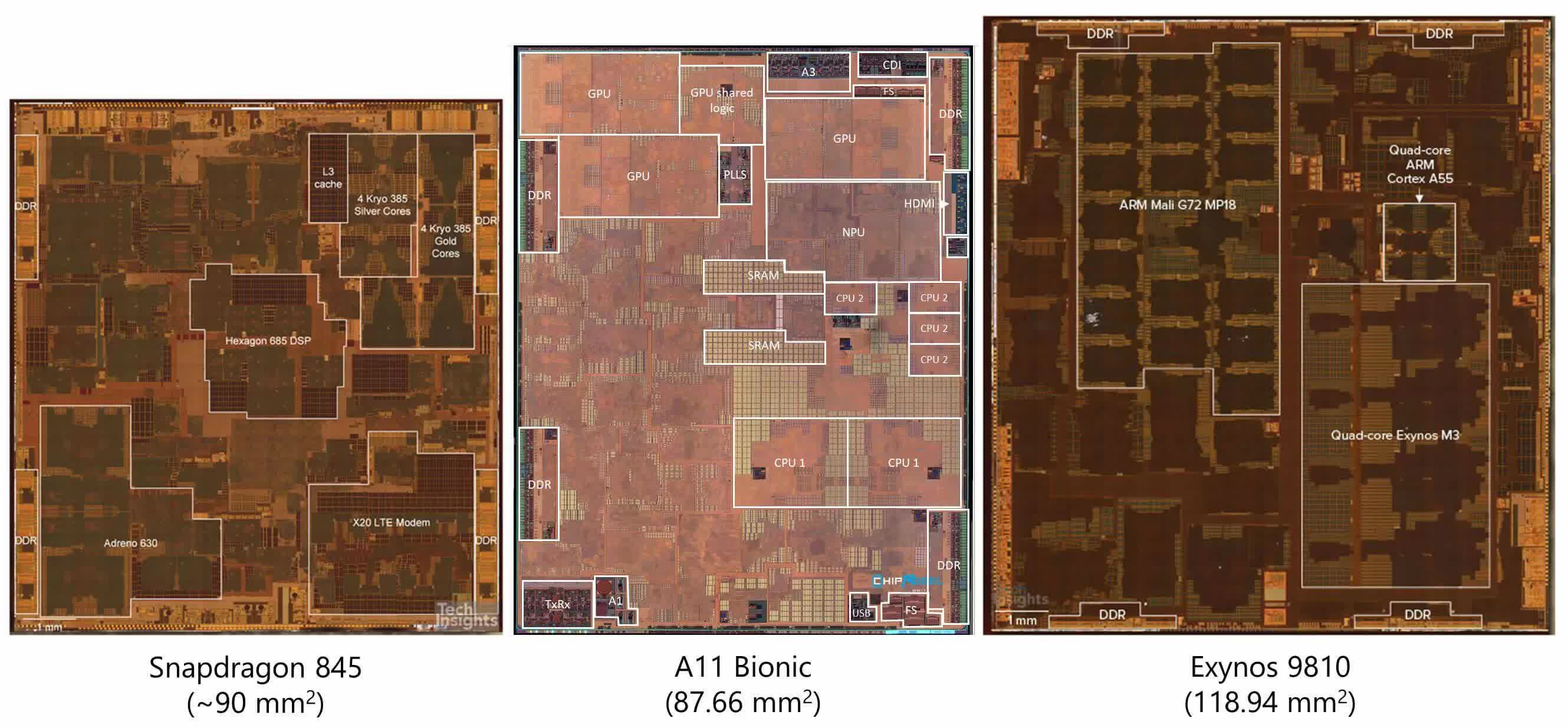

Looking at the die shots of some fairly recent processors, we can see that most of the area of the CPU is not actually the core itself. A growing amount is taken up by accelerators of all different types, including AI cores, NPUs, and DSPs. This shift has significantly sped up specialized workloads while also yielding major power savings, a crucial factor in data centers and mobile computing.

Die shots showing the makeup of several common mobile processors

Historically, if you wanted to add video processing to a system, you’d just add a separate chip to do it. That is hugely inefficient. Every time a signal has to go off-chip on a physical wire, there is a large amount of energy required per bit. While a tiny fraction of a Joule may not seem like much, it can be three to four orders of magnitude more efficient to communicate within the same chip rather than going off-chip. This has driven the growth of ultra-low-power chips, integrating accelerators directly into CPUs and SoCs to improve power efficiency.

Accelerators aren’t perfect, though. As we add more of them, chips become less flexible, sacrificing overall general-purpose performance in favor of peak performance in certain workloads. At some point, the entire chip could become just a collection of accelerators, which would make it less useful as a general-purpose processor. The tradeoff between specialized and generalized performance is always being fine-tuned. This ongoing challenge is known as the specialization gap – the delicate balance between making hardware efficient for specific tasks while keeping it adaptable for different workloads.

Until a few years ago, some argued that we were reaching the peak of the GPU/machine learning accelerator boom, but reality has clearly set in a completely different path and as AI models become larger and more complex, and cloud computing continues to expand, we will likely see even more computations being offloaded to specialized accelerators.

3D Integration and Memory Innovations

Another area where designers are looking for more performance is memory. Traditionally, reading and writing values has been one of the biggest bottlenecks for processors. While fast, large caches can help, accessing data from RAM or SSD can take tens of thousands of clock cycles. Because of this, engineers often view memory access as more expensive than computation itself.

If your processor wants to add two numbers, it first needs to calculate the memory addresses, determine where the data is located in the hierarchy, fetch it into registers, perform the computation, calculate the destination address, and then write the result back. For simple operations that may only take a cycle or two to complete, this is extremely inefficient.

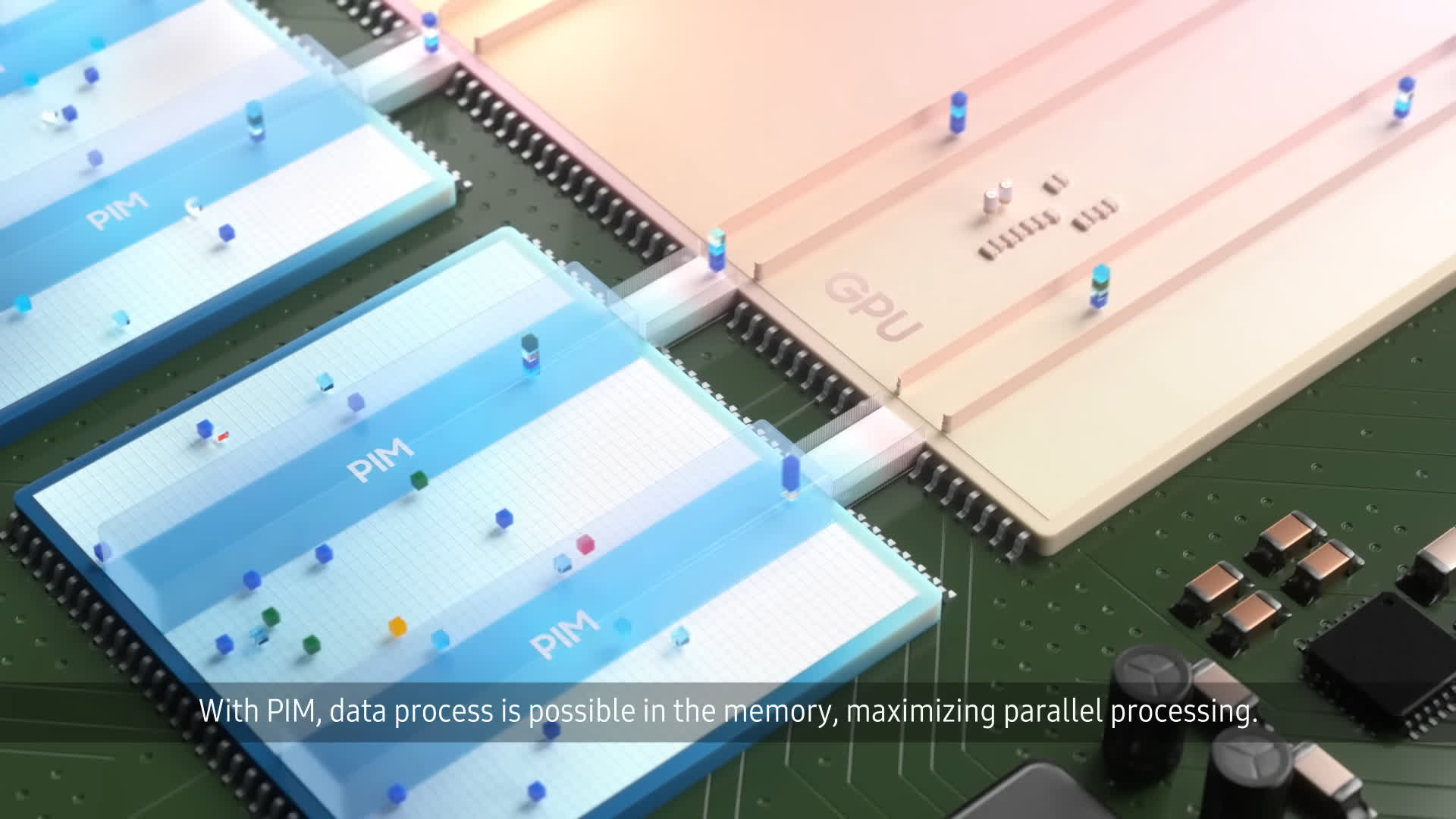

A novel idea that has seen a lot of research is a technique called Near Memory Computing (NMC). Instead of fetching small bits of data from memory to bring to a fast processor for computation, researchers are flipping this idea around: they are embedding compute capabilities directly inside memory controllers, RAM modules, or storage devices like SSDs. Processing-in-Memory (PIM), a subset of NMC, aims to perform operations directly where the data resides, eliminating much of the latency and energy costs of traditional memory access.

Major semiconductor companies such as Samsung, SK Hynix, and Micron are already developing HBM-PIM (High Bandwidth Memory Processing-In-Memory) solutions that integrate small compute units within memory stacks. For example, Samsung’s HBM-PIM prototype has demonstrated double-digit performance improvements in AI, cloud computing, and HPC workloads by reducing the amount of data movement required.

Another emerging memory innovation is Compute Express Link (CXL), a high-speed, cache-coherent interconnect technology that enables memory pooling and near-memory processing. Companies like Intel, AMD, and Nvidia are already integrating CXL-based memory expansion into datacenter and AI workloads, allowing multiple processors to share large memory pools efficiently. This technology helps reduce bottlenecks in traditional architectures where memory access is constrained by CPU limitations.

One of the hurdles to overcome with Near Memory Computing is manufacturing process limitations. As covered in Part 3, silicon fabrication is extremely complex with dozens of steps involved. These processes are typically specialized for either fast logic elements (for compute) or dense storage elements (for memory). If you tried to create a memory chip using a compute-optimized fabrication process, the density would suffer. Conversely, if you built a processor using a storage fabrication process, it would have poor performance and timing.

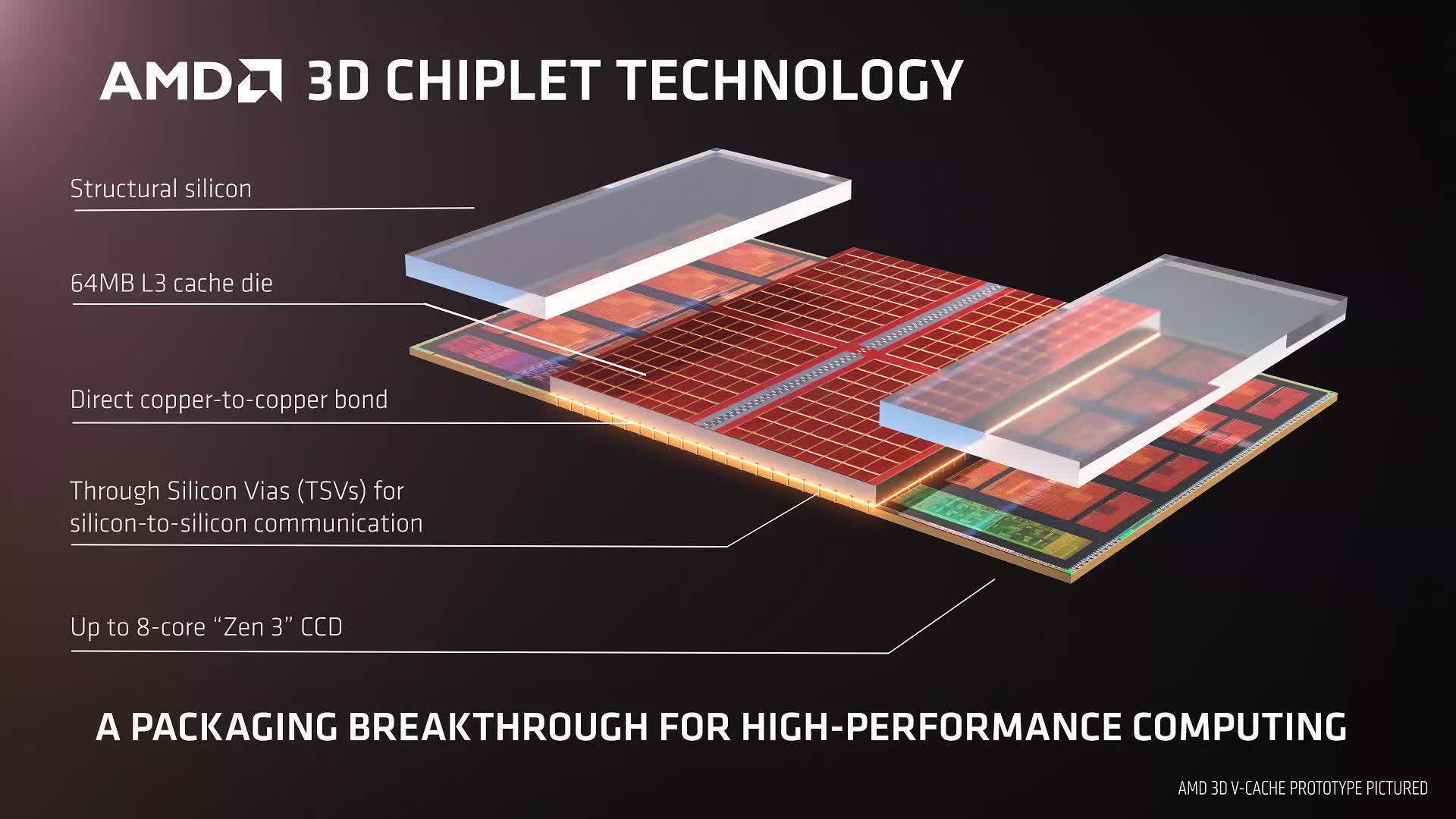

3D Integration: The Next Evolution in Chip Design

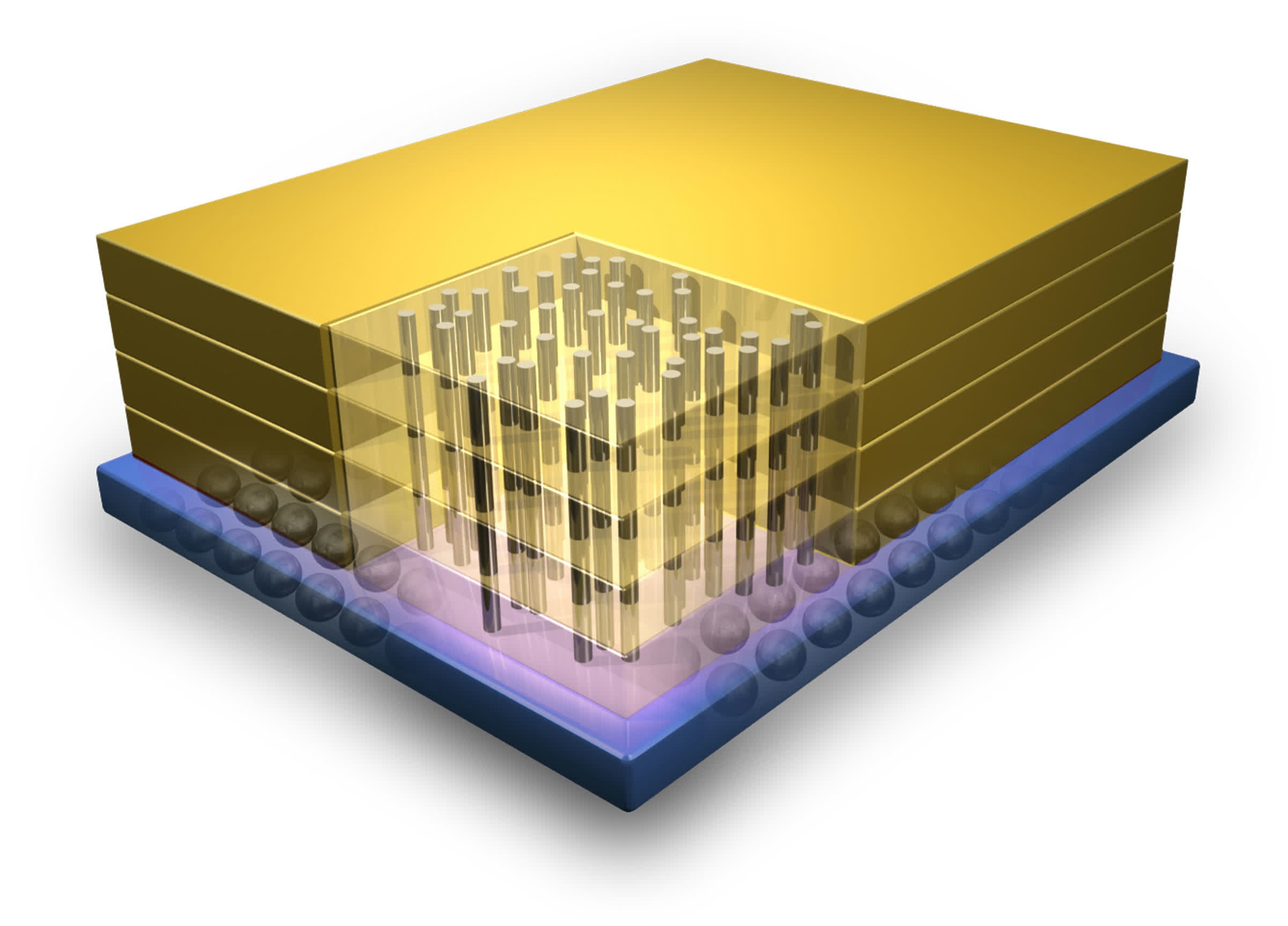

One potential solution to memory and performance bottlenecks is 3D Integration. Traditional processors have a single-layer transistor layout, but this approach has limitations. 3D stacking is the process of vertically layering multiple tiers of transistors to improve density, bandwidth, and latency. These stacked layers can be manufactured using different fabrication processes and connected using Through-Silicon Vias (TSVs) or hybrid bonding techniques.

An example of 3D integration showing the vertical connections between transistor layers.

3D NAND storage technology was an early commercial success of 3D stacking, but now high-performance processors are adopting similar concepts. AMD’s 3D V-Cache technology, first introduced in Ryzen 7 5800X3D, successfully stacked additional layers of L3 cache on top of a traditional CPU, delivering significant performance gains in gaming and latency-sensitive applications. Similarly, Intel’s Foveros packaging is enabling stacked logic dies, allowing different chip components to be fabricated separately and later integrated into a single package.

High Bandwidth Memory (HBM) is another widely used form of 3D-stacked memory, where multiple DRAM dies are stacked on top of each other and connected via TSVs. This has become the standard for AI accelerators, GPUs, and HPC processors due to its higher bandwidth and lower power consumption compared to traditional DDR memory. Nvidia’s H100 Tensor Core GPU and AMD’s Instinct MI300 AI accelerator both leverage HBM technology to handle the massive data throughput required by AI workloads.

Future Outlook

In addition to physical and architectural changes, one trend shaping the semiconductor industry is a greater focus on security. Until recently, security in processors was somewhat of an afterthought. Similar to how the internet, email, and many other systems we rely on were designed with almost no regard to security. Any security present on chips was usually bolted on after the fact to make us feel safer.

With processors, this eventually came back to bite companies. The infamous Spectre and Meltdown vulnerabilities were early examples of speculative execution flaws, and more recently, side-channel attacks like Zenbleed, Downfall, and Hertzbleed have shown that modern processor architectures still have major security gaps. As a result, processor manufacturers are now designing chips with built-in security features such as confidential computing, memory encryption, and secure enclaves.

In previous parts of this series, we touched on techniques such as High-Level Synthesis (HLS), which allows designers to specify hardware designs in high-level languages before using AI-driven optimization algorithms to generate the best possible circuit implementations. As chip development costs continue to skyrocket, the semiconductor industry is increasingly relying on software-aided hardware design and AI-assisted verification tools to optimize manufacturing.

However, as traditional computing architectures approach their limits, researchers are exploring entirely new computing paradigms that could redefine the way we process information. Two of the most promising directions are neuromorphic computing and optical computing, which aim to overcome the fundamental bottlenecks of conventional semiconductor-based chips.

Neuromorphic computing is an emerging field that mimics the way the human brain processes information, using networks of artificial neurons and synapses rather than traditional logic gates. Meanwhile, optical computing replaces traditional electronic circuits with photonic processors that use light instead of electricity to transmit and process information. Because photons travel faster and experience less resistance than electrons, optical computers have the potential to outperform even the most advanced semiconductor chips in certain tasks.

While it’s impossible to predict the future, the innovative ideas and research fields we have talked about here should serve as a roadmap for what we can expect in future processor designs. What we do know for sure is that we are approaching the end of traditional manufacturing scaling. To continue increasing performance each generation, designers will need to come up with even more complex solutions.

We hope this four-part series has piqued your interest in the fields of processor design, manufacturing, verification, and more. There’s a never-ending amount of material to cover and each one of these articles could fill an upper level university course if we tried to cover it all. Hopefully you’ve learned something new and have a better understanding of how complex computers are at every level.

Masthead credit: Processor with abstract lighting by Dan74

Source link