[ad_1]

Why it matters: Groq is the hundredth startup to take a shot at making an AI accelerator card, the second to market, and the first to have a product reach the 1 quadrillion operations per second threshold. That’s quadruple the performance of Nvidia’s most powerful card.

The Groq Tensor Streaming Processor (TSP) demands 300W per core, so luckily, it’s only got one. Even luckier, Groq has turned that from a disadvantage into the TSP’s greatest strength.

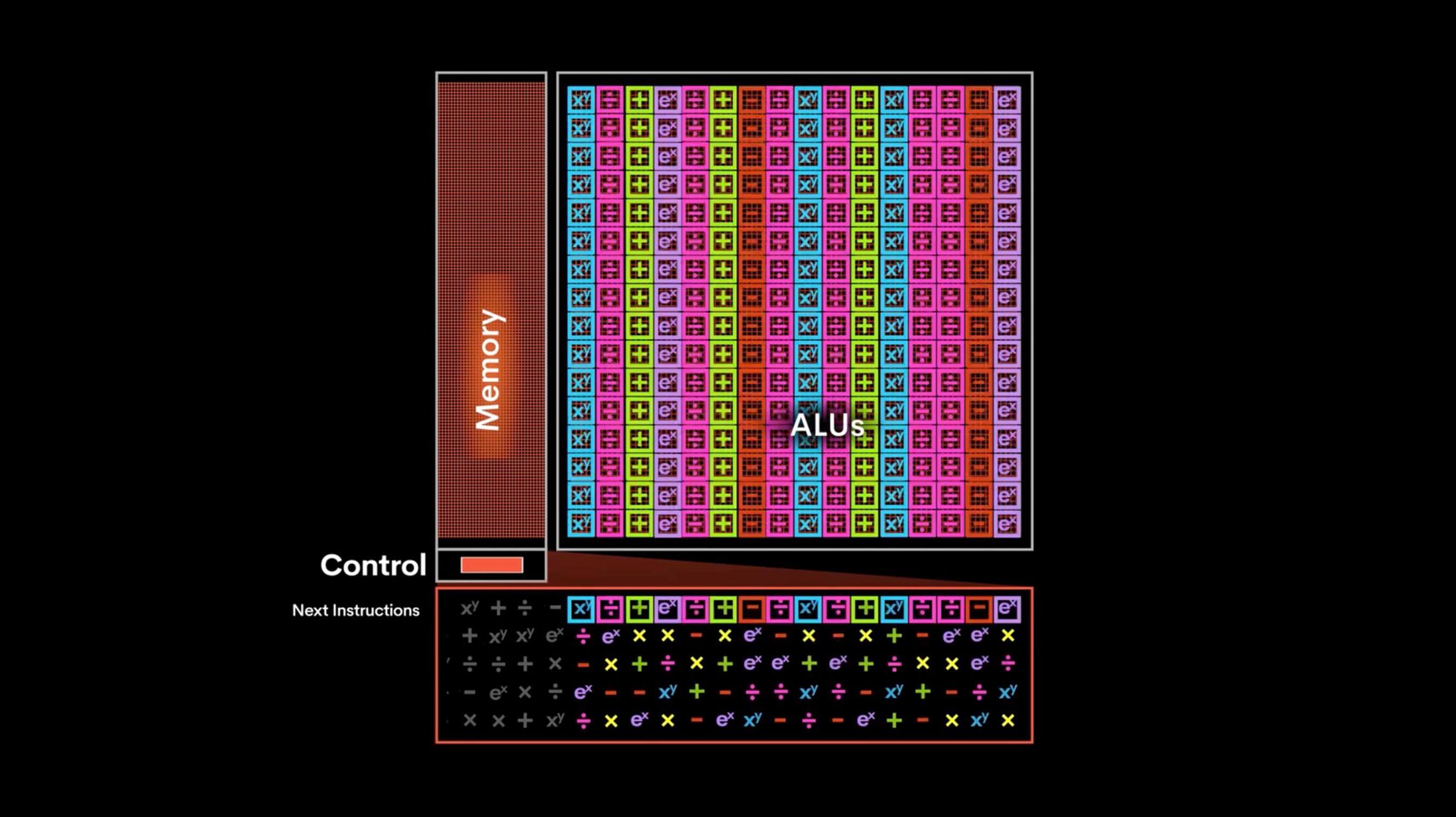

You should probably throw everything you know about GPUs or AI processing out the window, because the TSP is just plain weird. It’s a giant piece of silicon with almost nothing but Vector and Matrix processing units and cache, so no controllers or backend whatsoever. The compiler has direct control.

The TSP is divided into 20 superlanes. Superlanes are built from, in order of left to right: a Matrix Unit (320 MACs), Switch Unit, Memory Unit (5.5 MB), Vector Unit (16 ALUs), Memory Unit (5.5 MB), Switch Unit, Matrix Unit (320 MACs). You’ll notice that the components are mirrored around the Vector Unit, this divides the superlane into two hemispheres that can act almost independently.

The instruction stream (there is only one) is fed into every component of superlane 0, with 6 instructions for the Matrix Units, 14 for the Switch Units, 44 for the Memory Units, and 16 for the Vector Unit. Every clock cycle, the units perform their operations and move the piece of data to where it’s going next within the superlane. Each component can send and receive 512B from its next-door neighbors.

Once the superlane’s operations are complete, it passes everything down to the next superlane and receives whatever the superlane above (or the instruction controller) has. Instructions are always passed down vertically between the superlanes, while data only transfers horizontally within a superlane.

| Groq TSP | Nvidia Tesla V100 | Nvidia Tesla T4 | |

| Cores | 1 | 5120 | 2560 |

| Maximum Frequency | 1250 MHz | 1530 MHz | 1590 MHz |

| FP16 TFLOPS | 205 TFLOPS | 125 TFLOPS | 65 TFLOPS |

| INT8 TOPS | 1000 TOPS | 250 TOPS | 130 TOPS |

| Chip Cache (L1) | 220 MB | 10 MB | 2.6 MB |

| Board Memory | N/A | 32 GB HBM2 | 16 GB GDDR6 |

| Board Power (TDP) | 300W | 300W | 70W |

| Process | 14nm | 12nm | 12nm |

| Die Area | 725 mm² | 815 mm² | 545 mm² |

All that makes for a processor that is extremely good at neural network training and inferencing, and incapable of anything else. To put some benchmarks to it, in ResNet-50 it can perform 20,400 Inferences per Second (I/S) at any batch size, with an inference latency of 0.05 ms.

Nvidia’s Tesla V100 can perform 7,907 I/S at a batch size of 128, or 1,156 I/S at a batch size of one (batch sizes generally aren’t this low, but it demonstrates TSP’s versatility). Its latency at batch 128 is 16 ms and 0.87 ms at batch one. Obviously, the TSP outperforms Nvidia’s most equivalent card in this workload.

One of TSP’s strengths is that it has so much L1 cache, but it also doesn’t have anything else. If a neural network expands beyond that volume or if it is dealing with very large inputs, it will seriously suffer. Nvidia’s cards have gigabytes of memory that can handle that scenario.

This sums up the TSP really well. In specific workloads it’s more than twice as powerful as the Tesla V100, but if your workload varies, or if heaven-forbid you want to do something with more than half-precision, you can’t. The TSP definitely has a future in areas like self-driving cars, where the volume of input is predictable, and the neural network can be guaranteed to fit. In this case its spectacular latency, 320x better than Nvidia’s, means the car can respond faster.

The TSP is presently available to select customers as an accelerator within the Nimbix Cloud.

[ad_2]

Source link