You’ve just bought a new CPU or graphics card, and fired it up in your PC. It seems to run pretty cool, so you try a bit of overclocking. The GHz climbs higher and higher, and it looks like you’ve got yourself something special. It’s surely not supposed to be like this?

So you rush to the internet to share your excitement of hitting the silicon jackpot, and within a few posts, somebody proclaims that you’ve got yourself a binned chip.

Now, if you’re picturing an engineer rummaging about in a trash can and proudly pulling out a golden ticket, then you really need to read this explainer! Welcome to the magical world of processor fabrication and chip binning.

Wafers to die for

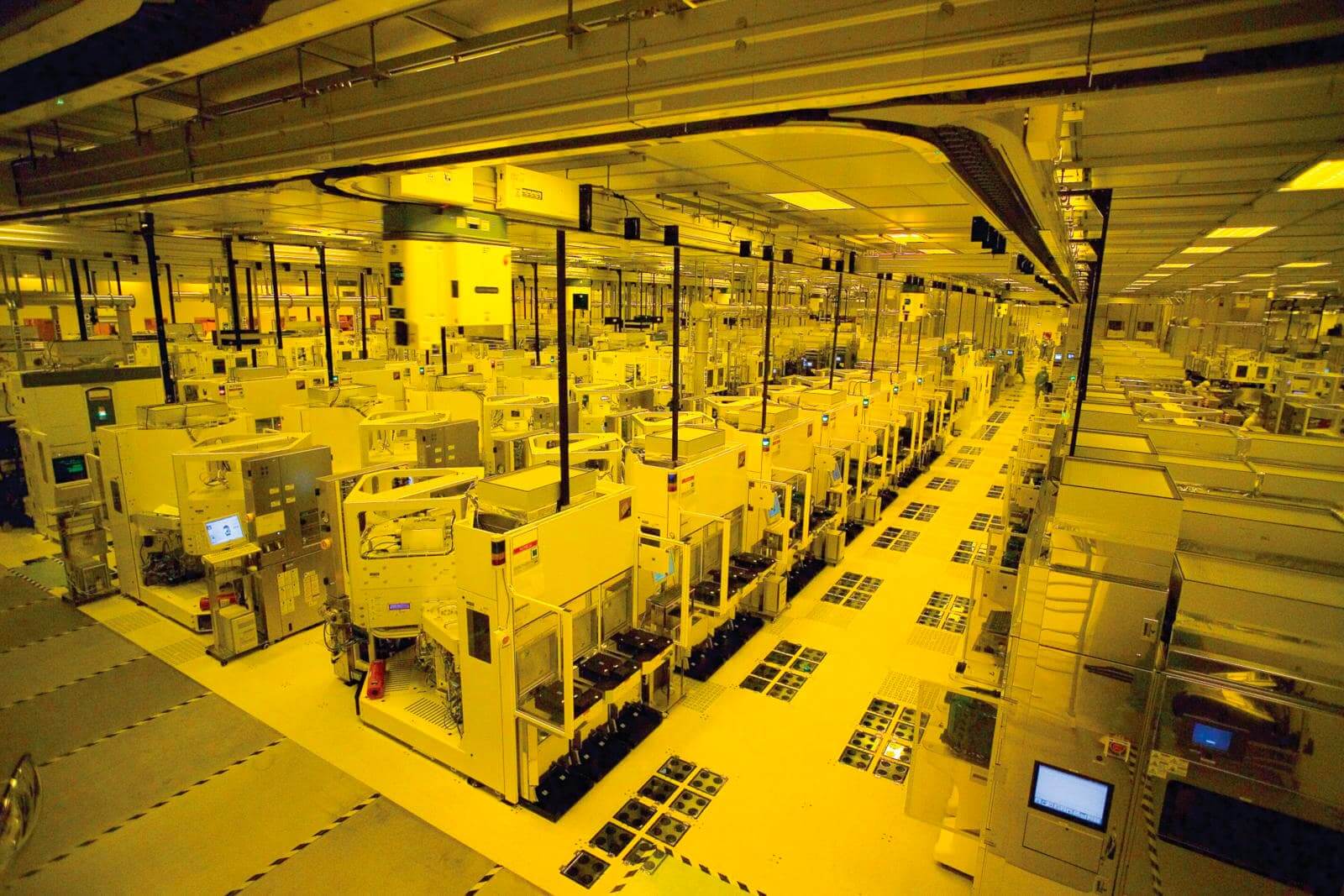

All chips are made out of discs of ultra-pure silicon, layered with metals, insulators, and semi-conducting materials, whether it’s a standard CPU, specialized graphics processor, or DRAM to become system memory. The whole process is hugely complex and the manufacturing plants required to build the latest chips in huge volumes, cost billions of dollars.

These discs are known as wafers and the likes of Intel, GlobalFoundries, and TSMC churn out millions of them every year. The highest quality tools are needed to ensure that the final product matches the ultra-accurate plans from the engineers who designed the chips.

To keep everything as near to perfection as it can be, factories’ production areas are slightly pressurized to keep airborne bacteria and dust particles out of the rooms. Workers wear protective gear to ensure as little of their skin cells and hair can enter the machinery.

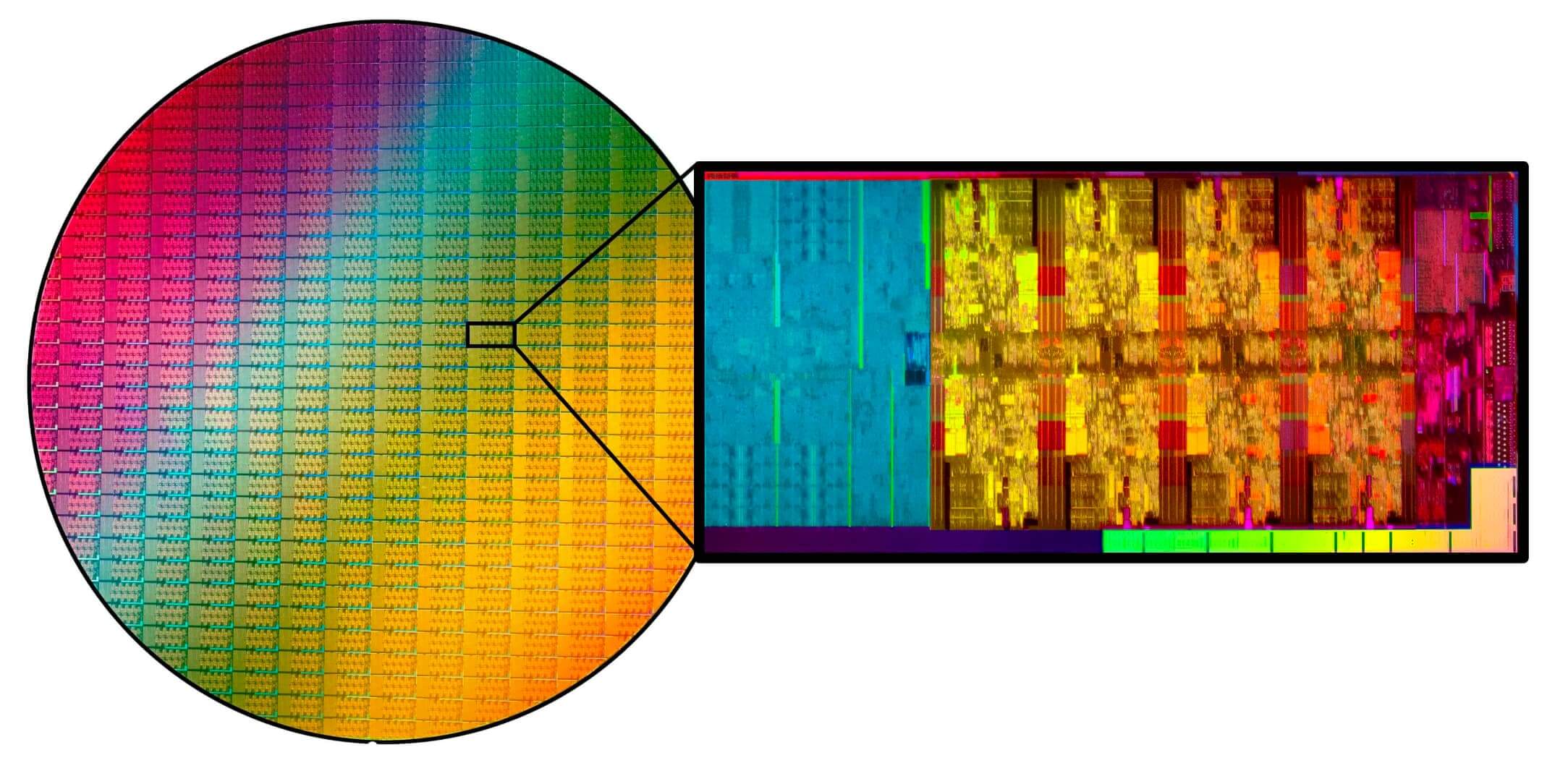

A finished wafer is a thing of beauty, and incredibly valuable, too. Each one costs thousands of dollars to manufacture, and the whole fabrication process — from silicon ingot to product — takes months from start to end. Each chip (also known as a die) that can be taken from the disc and sold is vital to recuperating the money spent to make them.

To get them out, the wafer is sliced up using a diamond saw, but a reasonable percentage of it is totally scrap, as chips along the edge just aren’t complete. Anywhere from 5 to 25% of the wafer (the amount depends a lot on the size of the chip) will get thrown away.

The remainder then gets mounted on a circuit board package and possibly covered with a heat spreader, to ultimately become the CPU we’re all familiar with.

Core (in)equality

Let’s take a look at Intel’s latest processor in their Core family of models — the most powerful of which is the Core i9-10900K, which has 10 cores and an integrated GPU.

The photo below shows how we usually know and see such PC components, but if we could prise off the heat spreader and use a battery of tools to delve into the guts of the chip, it would look very different.

The actual CPU is a cityscape of logic blocks, SRAM storage, interfaces, and communication buses — in one chip alone, there are billions of individual electronic components, all working in synchronized harmony.

This labelled image highlights some of the key areas — on the far left is the I/O system, containing the DDR4-SDRAM memory, PCI Express, and display controllers. Also packed in there is the system that manages the communication ring for all of the cores. Just above the I/O section is in the interface for the system memory and on the other side of the die, we can see the integrated graphics chip, the GPU. No matter what Intel Core processor you get, these 3 parts will all be present.

Stuffed between all of these are the CPU cores. Each one is a carbon copy of the other, full of units to crunch numbers, move data around, and predict future instructions. Lying either side of a core are two strips of Level 3 cache (the lower levels are deep inside the core), with each offering 1 MB of high speed storage.

You might think that Intel makes a new wafer for every CPU they sell, but a single ‘i9-10900’ disc will produce chips that can potentially end up in any one of the following models:

| Model | # Cores | # Threads | Base Clock | All Core Turbo | Turbo Boost | Total L3 Cache | PL1 TDP |

| i9-10900K | 10 | 20 | 3.7 | 4.8 | 5.1 | 20 | 125 |

| i9-10900KF | 10 | 20 | 3.7 | 4.8 | 5.1 | 20 | 125 |

| i9-10900 | 10 | 20 | 2.8 | 4.5 | 5.0 | 20 | 65 |

| i9-10900F | 10 | 20 | 2.8 | 4.5 | 5.0 | 20 | 65 |

| i9-10900T | 10 | 20 | 1.9 | 3.7 | 4.5 | 20 | 35 |

| i7-10900K | 8 | 16 | 3.8 | 4.7 | 5.0 | 16 | 125 |

| i7-10900KF | 8 | 16 | 3.8 | 4.7 | 5.0 | 16 | 125 |

| i7-10900 | 8 | 16 | 2.9 | 4.6 | 7.7 | 16 | 65 |

| i7-10900F | 8 | 16 | 2.9 | 4.6 | 4.7 | 16 | 65 |

| i7-10900T | 8 | 16 | 2.0 | 3.7 | 4.4 | 16 | 35 |

| i5-10600K | 6 | 12 | 4.1 | 4.5 | 4.8 | 12 | 125 |

| i5-10600K | 6 | 12 | 4.1 | 4.5 | 4.8 | 12 | 125 |

| i5-10600 | 6 | 12 | 3.3 | 4.4 | 4.8 | 12 | 65 |

| i5-10600T | 6 | 12 | 2.4 | 3.7 | 4.0 | 12 | 35 |

| i5-10500 | 6 | 12 | 3.1 | 4.2 | 4.5 | 12 | 65 |

| i5-10500T | 6 | 12 | 2.3 | 3.5 | 3.8 | 12 | 35 |

| i5-10400 | 6 | 12 | 2.9 | 4.0 | 4.3 | 12 | 65 |

| i5-10400F | 6 | 12 | 2.9 | 4.0 | 4.3 | 12 | 65 |

| i5-10400T | 6 | 12 | 2.0 | 3.2 | 3.6 | 12 | 35 |

The ‘Base Clock’, measured in GHz, is the lowest guaranteed frequency the chip will run at, no matter what load it is under. The ‘All Core Turbo’ is the maximum frequency that all of the cores can run at together, but not necessarily stay at for very long. It’s a similar thing for ‘Turbo Boost’ except this is just 2 cores.

PL1 TDP stands for Power Level 1 – Thermal Design Power. It’s how much heat the CPU will create while running at its Base Clock under any load. It can create a lot more than this, but it will limit what speeds the chip will run at and when plugged into a motherboard, the designers of them may limit how much power the chip can take in, to prevent this.

Models with codes ending with an F have a disabled GPU; K indicates it has an unlocked clock system (so you can easily overclock it), and T denotes low power. These are just the desktop CPUs — some will end up as Xeons, processors aimed at the professional market, in the form of workstations or small servers.

So that’s 19 models from just one design — how and why does a single chip end up becoming so many different types?

It’s an imperfect world

As incredible as chip fabrication plants are, neither they nor the technology and materials used are 100% perfect. There will always been some nano-scale motes of detritus, either within the plant or deep inside the raw silicon and metals used. No matter how hard they try, manufacturers can’t make them totally clean and pure.

And when you’re trying to build up components that are so small, that only high powered electron microscopes let you see them, nothing quite behaves exactly as it should do. Down in the nanometer world, quantum behaviour becomes far more noticeable and randomness, noise, and other glitches do their best to upset the delicate game of chip-Jenga. All of these issues conspire against processor makers, and the end results are classed as defects.

Not all defects are serious — they may just cause a specific section of the chip to run hotter than it should, but if it’s really bad, then an entire section might be completely junk. The first thing manufacturers do is scan the wafers to look the defects in the first place.

Machines dedicated to hunting out these problems are used after a wafer has been fabricated, but before it’s sliced up into the individual chips. The dies or entire wafers that show up as having problems are flagged, so they can be set aside for further examination.

But even these steps aren’t going to catch every minor blemish and glitch, so after the silicon pieces are cut from the wafer and mounted onto their packages, every one of them goes off for even more testing.

Not all bins store rubbish

When Intel and others sit down to check the quality of their processors, they set the chips up to run with a set voltage and at a certain clock speed; while the die goes through a range of benchmarks, designed to stress all of the various sections, the amount of electrical power consumed and heat produced are carefully measured.

What they will find is that some chips run exactly as required, whereas others are better or worse.

Some chips may need a higher voltage to be fully stable, other chips’ insides may produce too much heat, and likely some simply won’t reach the required standards full stop.

Similar explorations are done to the processors identified with having defects, but before this is carried out, extra checks are performed to see what sections of the chip still work and what bits are scrap.

The end result of this is that the useful output of a wafer, called its yield, generates a range of dies that they can be categorized on the basis of their functioning parts, stable clock frequencies, required voltage, and heat output. The name for this sorting procedure? Chip binning.

No dies are actually thrown into large plastic bins — the phrase comes from statistics, where a distribution of numbers can be organized into groups called bins. For example, population surveys about age distribution might use the bins 0 to 5 years old, 6 to 10, 11 to 16, and so on.

The same is done for wafers, and in the case of our i9-10900K example, some of the bins would be for number of working cores, clock frequency range in which the CPU is stable, and heat output at a certain clock.

Let’s imagine that a Core i9-10900 chip is thoroughly tested and found to have a couple of serious defects, as indicated above. Two of the cores and the GPU are damaged to a level where they just can’t function properly.

Intel would then disable the kaput sections and flag it up as being a chip for the Core i7-10700 range, specifically an F model. But then it needs to be tested for clock speeds, power, and stability. If the chip hit the required targets it would stay as an i7, but if it couldn’t quite reach those targets, another 2 cores could be disabled and the die used for a Core i5 model instead.

All things considered, chip binning massively improves the yield of a wafer because it means that more dies can be utilized and sold.

In the case of the 10th-gen range of Core processors, Intel does have a separate wafer design for the Core i5, i3, and Pentium/Celeron ranges. These start as 6 core chips and then get binned right down into 2 core offerings.

Product demand can often outstrip production capability, hence why the 10 core wafers are used to help fill in orders. Sometimes, perfectly functional dies have sections switched off, just to ensure there is sufficient output from the factories. That does mean it’s a game of silicon lottery as to what die you’re actually getting, when buying a particular model.

All things considered, chip binning massively improves the yield of a wafer because it means that more dies can be utilized and sold. Without it, Intel’s actual rubbish bins would be overflowing with scrap silicon.

Aren’t binned CPUs special?

Like so many terms in computing, chip binning has become synonymous with something other than its original meaning. Online stores sometimes sell hand-picked, special CPUs (those that overlock to an insane level or run cooler than the surface of Pluto) as ‘binned CPUs’. The reality is all chips are binned, simply because they have to be.

Of course, there’s nothing to stop a retailer from binning the chips they buy: binned-binned CPUs, anyone?

AMD and Intel processors have to be purchased in bulk (trays that contains dozens, if not hundreds, of chips), and you could sit down with a test computer and check out each one — overclock or undervolt them, record their temperatures, and so on. The best of the batch could then be sold on as being special, and the retailer could rightly class them as ‘binned CPUs.’ Naturally, all of this extra testing costs time and effort, so the retail price of the product is increased to reflect this.

Are these so-called binned chips extra special in some way? Yes, and no. Every single chip used in your PC, phone, car, and so on have all been through some kind of selection process. It’s just another stage in the manufacturing of all microprocessors and DRAM chips. That means your beloved CPU or GPU that runs surprisingly cool or overclocks like mad is just another die, from one of the hundreds of thousands of wafers, churned out by factories around the world.

Shopping Shortcuts:

Source link