[ad_1]

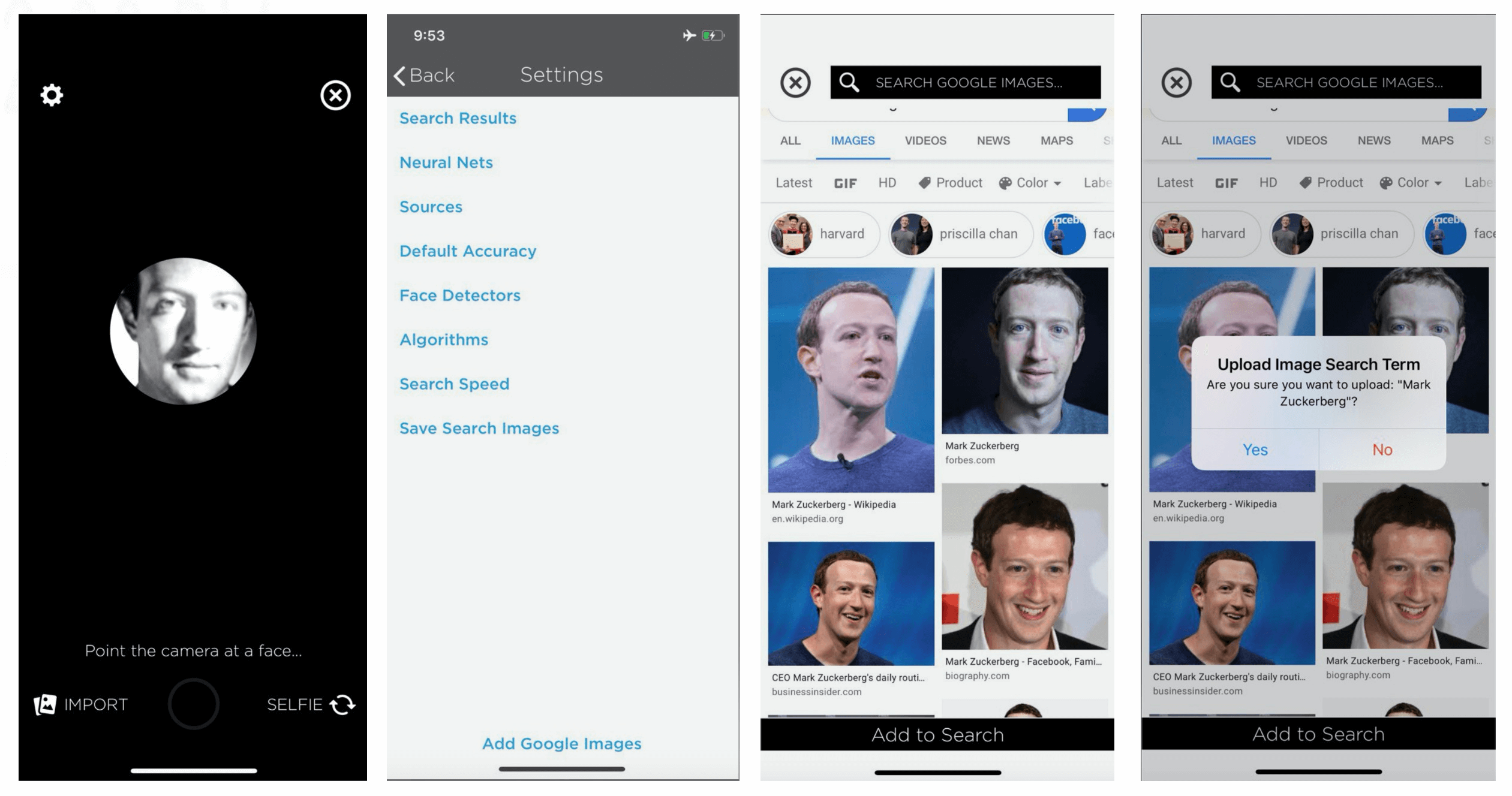

Facepalm: Facial recognition company Clearview AI continues to stir up controversy. This time, a lapse of proper security hygiene has exposed the source code of the company’s app, secret keys, and cloud storage credentials.

Dubai-based cybersecurity firm SpiderSilk recently uncovered a misconfigured Clearview server. Although the repository was password protected, it was setup to allow anyone to create a new account and log into the system, which is precisely what the company’s security experts did.

In addition to source code, private keys, and cloud storage credentials, SpiderSilk’s Cheif Security Officer Mossab Hussein told TechCrunch the server contained working versions of its Windows, macOS, iOS, and Android apps free for the taking. What’s more, once downloaded, the apps worked witout any security checks, meaning anyone could use the app out of the box to search the facial recognition database.

“[We have] experienced a constant stream of cyber intrusion attempts, and have been investing heavily in augmenting our security,” said Clearview CEO Hoan Ton-That in a statement. “We have set up a bug bounty program with HackerOne whereby computer security researchers can be rewarded for finding flaws in Clearview AI’s systems. SpiderSilk, a firm that was not a part of our bug bounty program, found a flaw in Clearview AI and reached out to us. This flaw did not expose any personally identifiable information, search history, or biometric identifiers.”

Ton-That also told TechCrunch that SpiderSilk is attempting to extort his company. However, the security firm shared its email correspondence, and it appears that it reported the problem to Clearview and turned down a bug bounty reward. Hussain’s reasoning for refusing the bounty was that it would have bound him to a non-disclosure agreement (NDA). He did not think that would have been in the best interest of the public.

Perhaps even more concerning than the vulnerable company assets was the discovery of a cache of over 70,000 videos recorded from a security camera in a Manhattan residential building. The footage shows people entering and exiting the lobby. Ton-That claims the videos are from Clearview AI’s Insight Camera prototype tests, a program that has since been abandoned.

“As part of prototyping a security camera product, we collected some raw video strictly for debugging purposes, with the permission of the building management,” explained the CEO.

The real estate company representing the building did not return calls for comment.

The security lapse is just the latest in a string of controversies involving Clearview AI. In January, NY Times journalists discovered that the company trained its facial recognition software using images from numerous internet sites. Several social media platforms demanded the company stop scraping their users’ profiles. The following month, an “intruder” stole Clearview’s entire client list from an unsecured database. Ton-That claimed there was “no compromise of servers, systems, or networks” during that attack. Then in March, Vermont’s Attorney General filed a lawsuit against the startup for violating the state’s Data Broker Law and Biometric Information Privacy Act.

Image credit: SpiderSilk via TechCrunch

[ad_2]

Source link