[ad_1]

Amazon Web Services unveiled a comprehensive three-layer GenAI strategy and offerings at re:Invent 2023 that happened to feature the intriguing new Q digital assistant at the top of the stack. And while Q got most of the attention, there were lots of interconnected elements underneath it.

At each of the three layers – Infrastructure, Platform/Tools and Applications – AWS debuted a combination of new offerings and enhancements to existing products that tie together to form a complete solution in the red-hot field of GenAI. Or, at least, that’s what they were supposed to do. However, the volume of announcements in a field that is not widely understood led to considerable confusion about what exactly the company had assembled. A quick skimming of the news from re:Invent reveals divergent coverage, indicating that AWS still needs to clarify its offerings.

Given the luxury of a day or two to think about it, as well as the opportunity to ask a lot of questions, it’s apparent to me now that Amazon’s new approach to GenAI is a comprehensive and compelling strategy – even in its admittedly early days. It is also evident that AWS’ endeavors over the past few years have involved introducing a range of products and services that at first glance might not have seemed related, but they were the building blocks for a larger strategy that is now beginning to emerge.

The company’s latest efforts start at its core infrastructure layer. At this year’s re:Invent, AWS debuted the second generation Trainium AI accelerator chip, which offers 4x improvements in AI model training workloads over its predecessor. They also discussed their Inferentia 2 chip, which is optimized for AI inferencing efforts. Together, these two chips – along with the fourth-gen Graviton CPU – give Amazon a complete line of unique processors that it can use to build differentiated compute offerings.

AWS CEO Adam Selipsky also had Nvidia CEO Jensen Huang join him onstage to announce further partnerships between the companies. They discussed the debut of Nvidia’s latest GH200 GPU in several new EC2 compute instance from AWS, and the first third-party deployment of Nvidia’s DGX Cloud systems. In fact, the two even discussed a new version of Nvidia’s NVLink chip interconnect technology that allows up to 32 of these systems to function together as a giant AI computing factory (codenamed Project Ceiba) that AWS will host for Nvidia’s own AI development purposes.

Moving on to Platform and Tools, AWS announced critical enhancements to its Bedrock platform. Bedrock consists of a set of services that allow you to do everything from picking the foundation model of choice, figuring out how you choose to train or fine-tune a model, determine levels of access that different people in an organization have access to, choosing what types of information is allowed and what is blocked (Bedrock Guardrails), and create actions based on what the model generates.

In the area of model tuning, AWS announced support for fine tuning, continuous pre-training and most critically, RAG (Retrieval Augmented Generation). All three of these have burst onto the scene relatively recently and are being actively explored by organizations to integrate their own custom data into GenAI applications. These new approaches are important because many companies have started to realize they aren’t interested in (or, frankly, capable of) building their own foundation models from scratch.

On the foundation model side of things, the range of new options supported within Bedrock include Meta’s Llama 2, Stable Diffusion, and more versions of Amazon’s own family of Titan models. Given AWS’ recent investment in Anthropic AI, it wasn’t a surprise to see a particular focus on Anthropic’s new Claude 2.1 model as well.

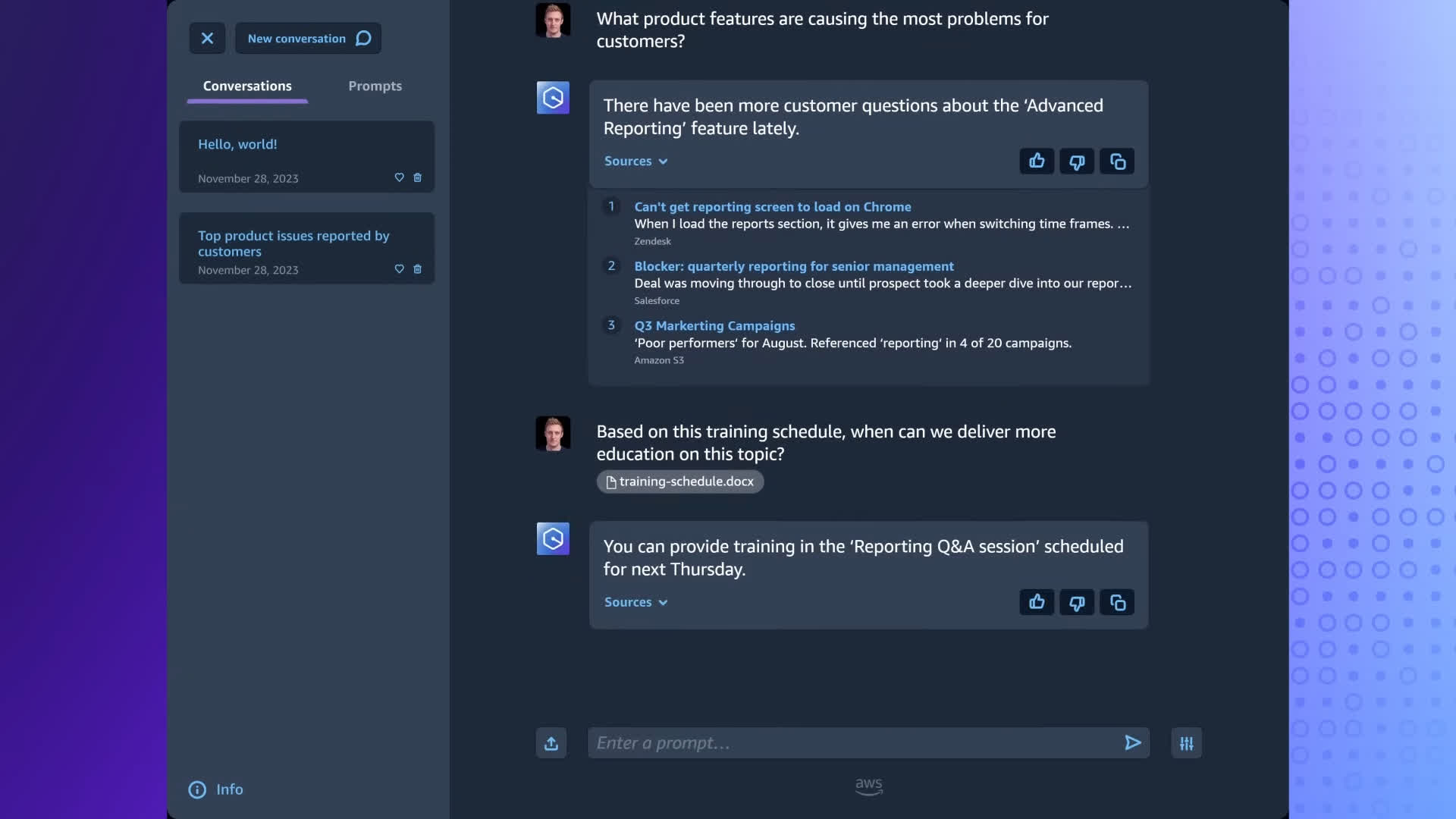

The final layer of the AWS GenAI story is the Q digital assistant. Unlike most of AWS’ offerings, Q can be used as a high-level finished GenAI application that companies can start to deploy. Developers can customize Q for specific applications via APIs and other tools in the Bedrock layer.

What’s interesting about Q is that can take many forms. The most obvious version is a chatbot-style experience similar to what other companies currently offer. Not surprisingly, most of the early news stories focused on this chatbot UI.

But even in this early iteration, Q can offer a variety of functionalities. For example, AWS showed how Q could enhance the code-generating experience in Amazon’s Code Whisperer, act as a call transcriber and summarizer for the Amazon Connect customer service platform, simplify the creation of data dashboards in Amazon QuickSight analytics, and serve as a content generator and knowledge management guide for business users. Q can utilize different underlying foundation models for various applications, which represents a more extensive and capable type of digital assistant application than those offered by some competitors, but it’s also a lot harder for people to get their heads around.

Digging deeper into how Q works and its connections to the other parts of AWS, it turns out that Q was built via a set of Bedrock Agents. So, what this means is that companies who are looking for a more “easy button” solution for getting GenAI applications deployed in their company can use Q as is.

Companies who are interested in doing more customized solutions, on the other hand, can create some of their own Bedrock Agents. This concept of pre-built versus customizable capabilities also applies to Bedrock and Amazon’s SageMaker tool for building custom AI models. Bedrock is for those who want to leverage a range of already built foundation models, while SageMaker is for who want to build models of their own.

Taking a step back, you can begin to appreciate the comprehensive framework and vision AWS has assembled. However, it’s also clear that this strategy is not the most intuitive to grasp. Looking ahead, it’s crucial that Amazon refines its messaging to make their GenAI narrative more accessible and understandable to a broader audience. This would enable more companies to leverage the full range of capabilities that are currently obscured within the framework.

Bob O’Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on X @bobodtech

[ad_2]

Source link