[ad_1]

Today we’re revisiting AMD’s budget-oriented Ryzen 3 3300X. This Ryzen 3 CPU thoroughly impressed us when it launched about two months ago, however since that day-one review we’ve barely looked back to see where it stands. In perspective, many things have happened in the past few months, in and outside of tech. Supply of components has been tight and you may recall that at the time AMD had decided to axe support for all 400-series motherboards.

If you followed that drama you’ll know we spent quite a bit of time and energy fighting AMD on that one and eventually helped get them to walk back that decision.

By the time that was resolved we were moving on to Intel 10th-gen Core testing, then we had the Z490 motherboards which morphed into B550 testing, though that’s largely on hold now until stock arrives.

The plan was always to go back for some detailed Ryzen 3 testing and we began to lay the groundwork for this GPU scaling content many weeks ago. Timing hasn’t been great though, with stock virtually non-existent and therefore we’ve been sitting on the data. Ultimately we decided not to delay the blue bar graphs any longer, so when eventually things come back to normal you’ll be armed with even more information.

For this test we’re comparing the 3300X against the Ryzen 5 3600 and Ryzen 5 2600. In previous GPU scaling benchmarks we had also tested the 3900X and 9900K, though this time we decided to leave those out in an effort to declutter the graphs, but we’ll call on some of that data towards the end of the article.

Before we get to it, some basic test notes… we paired the CPUs with G.Skill’s FlareX DDR4-3200 CL14 memory and a Corsair H115i Pro cooler. Any auto overclocking features such as MCE or PBO have been disabled and the memory hasn’t been tuned, just XMP loaded, so it’s just out of the box performance with a quality AIO and low latency memory.

Do note the test setup for this article is a bit different to what we’ve used for our recent CPU reviews and that will influence the results. We’re using just two memory modules for single rank operation, whereas we’ve used four modules in the most recent CPU reviews for dual rank memory. Some of the areas used for testing the games have also been updated in those articles.

Benchmarks

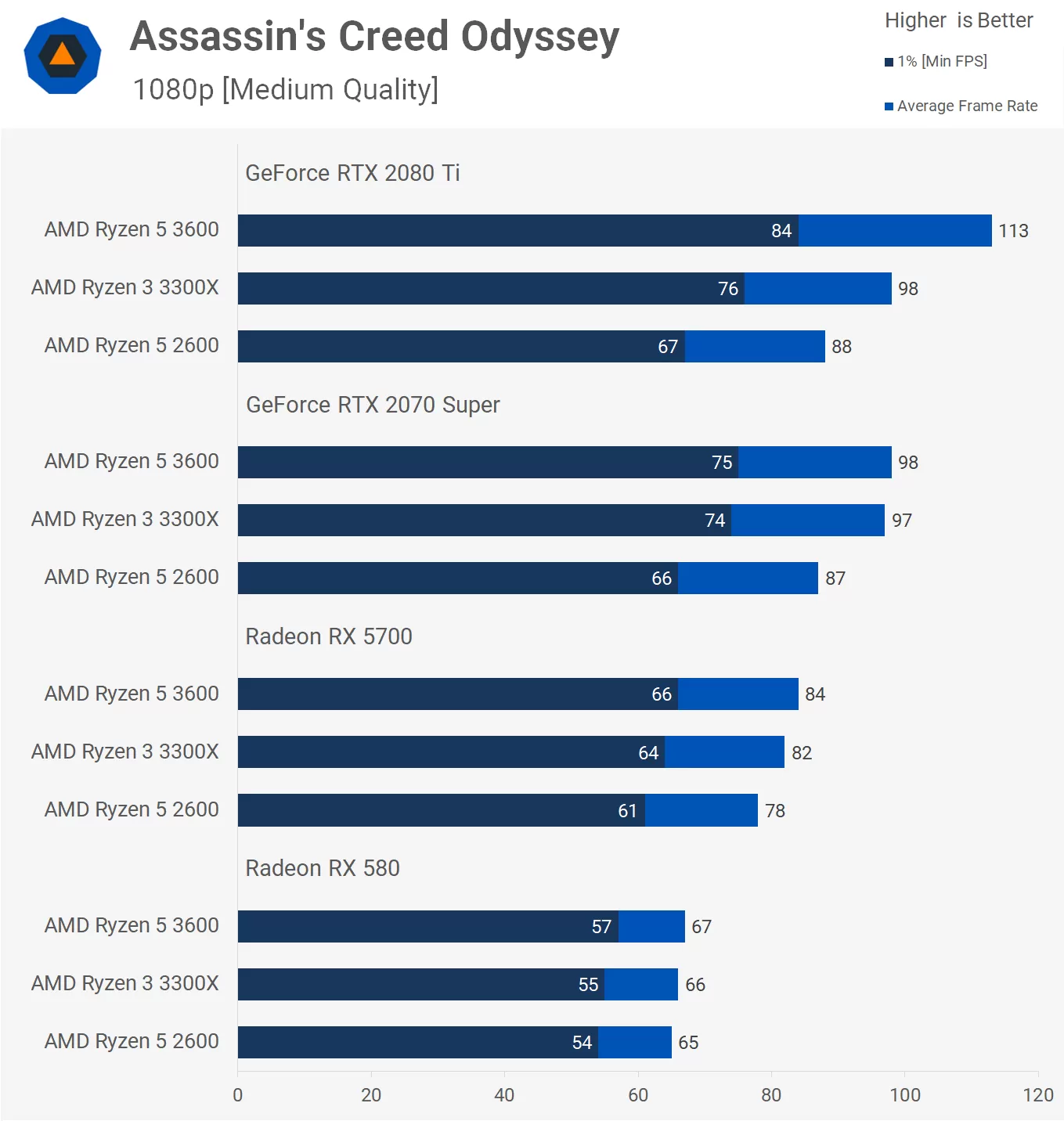

Starting with Assassin’s Creed Odyssey using the medium quality preset at 1080p, we find that the 3300X is positioned between the 2600 and 3600 when using the RTX 2080 Ti, making the R5 3600 around 15% faster. However, dropping down to the RTX 2070 Super eliminates that margin almost entirely, now the 3600 is just a single frame faster. The 3300X was still 11% faster than the 2600 though, so a decent little lead over the 2nd-gen part.

By the time we hit the Radeon RX 5700 though, we’re looking at very similar performance for all three processors. The 3300X is only 5% faster than the 2600 and ~2% slower than the 3600 as we become more GPU limited.

Once we drop down to the Radeon RX 580, we’re entirely bound by the GPU and the results we see here are largely within the margin of error. Needless to say, you won’t spot any differences between the 3600 and 3300X when gaming under these conditions.

If we increase the quality preset a few notches to the max setting, unsurprisingly the results become far more GPU bound and homogeneous. Remember we’re still only testing at 1080p. It won’t matter what kind of graphics card you have, RX 580, 5700, 2070 Super or even the 2080 Ti, you’ll be looking at virtually identical frame rates with any of these CPUs.

Same game but we’re looking at results using 1440p resolution and the medium preset. This data is significantly more GPU limited with the GeForce GPUs. The 3300X and 3600 delivered identical, or virtually identical performance using all four GPUs, making them a little faster than the 2600.

Finally we have the Assassin’s Creed Odyssey results using the ultra high + 1440p. No surprises here, we’re almost entirely GPU bound and within this group of CPUs, there’s no difference in gameplay performance. If you plan on playing this and other similar games with maximum quality settings, you’re mostly going to find the system is bound by GPU performance.

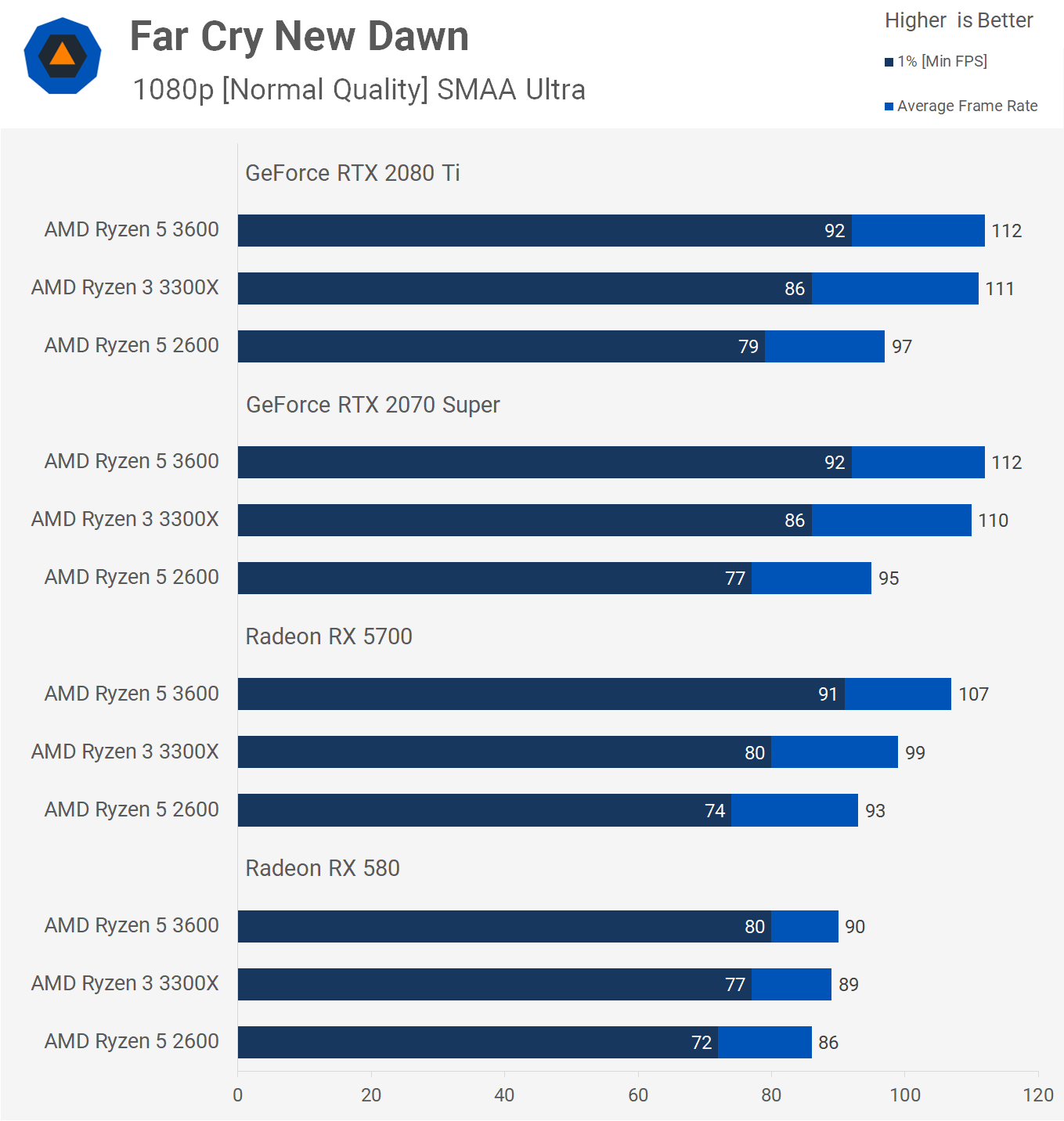

Moving on to Far Cry New Dawn, here we find similar average frame rate performance between the 3300X and 3600, but the 6-core processor does deliver better 1% low results, suggesting we’re getting slightly more consistent performance with the higher-end CPU. It’s also interesting to note that although slower for both the average and 1% low performance, the Ryzen 5 2600 sees just a 23% variation between the two metrics whereas the 3300X sees up to a 29% difference, suggesting once more that the experience won’t be as smooth.

Interestingly, using the Radeon RX 5700 the 3300X’s average frame rate falls away from the 3600. We’ve found in the past that AMD’s drivers produce more overhead, so perhaps that’s what we’re seeing here. By the time we drop down to the RX 580 things start to come together, but even here we still see that the increased latency of the Zen+ architecture is a bit of a problem for the 2600.

We find more interesting Far Cry New Dawn results at 1080p when increasing the preset quality to ‘Ultra’. This appears to increase CPU load and the 3300X is now up to 10% slower than the 3600 when comparing average frame rates.

We also see a substantial drop off for the 2600 when comparing 1% low data. The performance trends for the RX 5700, 2070 Super and 2080 Ti look similar and it’s not until we drop down to the more modest RX 580 that we see similar frame rates.

Now for the 1440p results using the normal quality settings, compared to what we saw at 1080p there’s a smaller gap between the 3300X and 3600 when using the RX 5700 and we also see a significant difference in performance between the RX 5700 and RX 580. Of course, this is because the game is more GPU limited at the higher resolution.

The 1440p ultra quality data shows very little difference between the 3300X and 3600 using even the RTX 2080 Ti. At 1080p the 3600 was up to 10% faster, here that margin has been halved to 5%.

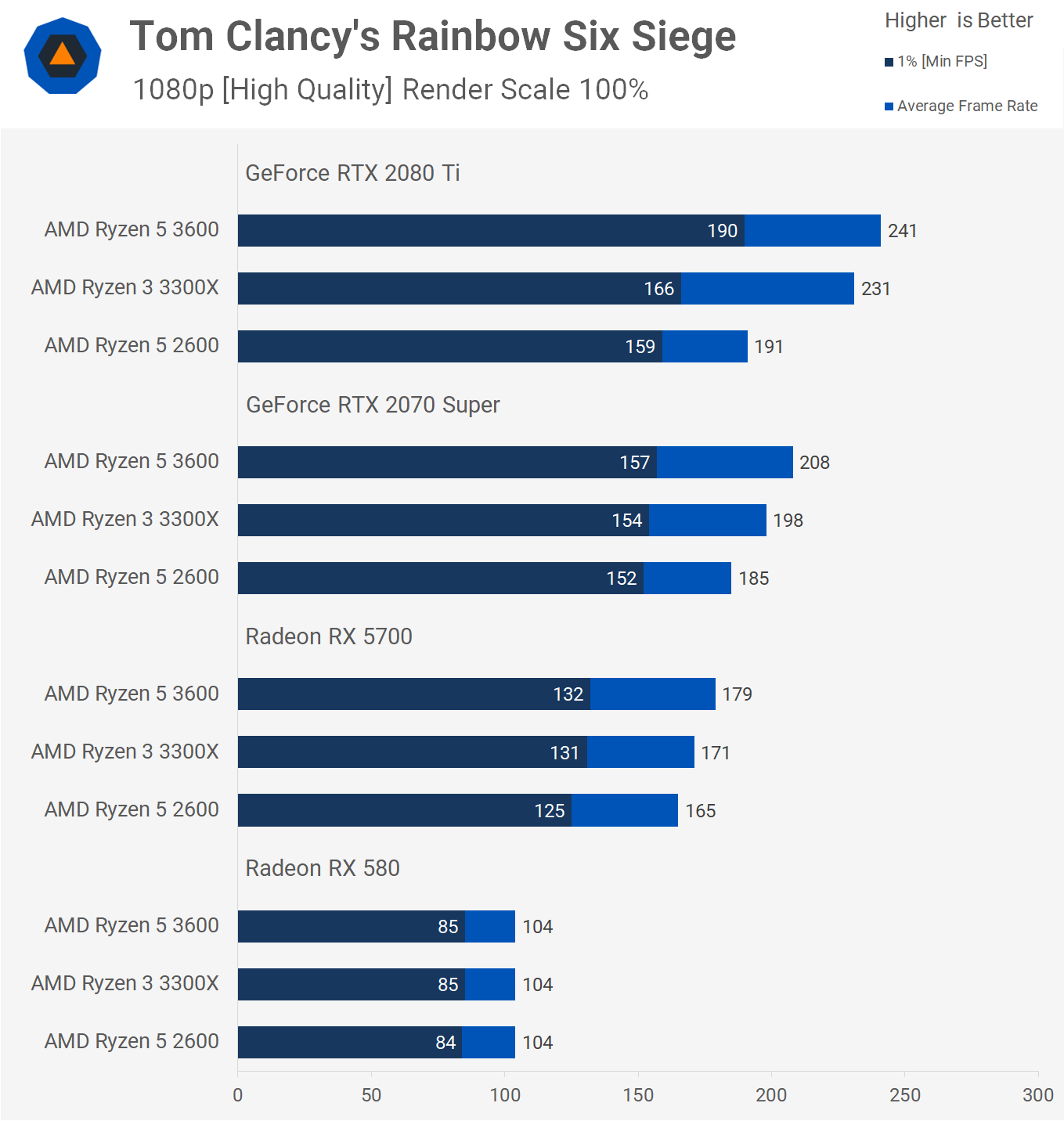

Testing with Tom Clancy’s Rainbow Six Siege and 1080p medium quality data (labeled as ‘high’ in this game), we have some more interesting data. With the RTX 2080 Ti the 3600 is just 4% faster than the 3300X when comparing averages, but 14% faster when comparing 1% lows. As a result, the 3600 sees a 27% performance disparity between its average and 1% low figure, while the 3300X sees almost 40% disparity, suggesting that the 6-core processor is delivering a smoother more consistent gaming experience.

That difference is somewhat neutralized with the slower RTX 2070 Super and in fact here the 3300X produces a tighter grouping of frames due to a stronger GPU bottleneck. We see this effect continue as we lower the GPU horsepower.

If we increase the graphics preset to ultra while still maintaining a 1080p resolution, we find with GPUs such as the RTX 2070 Super, Radeon RX 5700 and RX 580 that the 3300X and 3600 deliver the same level of performance as both are heavily GPU limited.

We see a big hit to performance by simply increasing the resolution, now at 1440p using the ‘High’ quality setting, the RTX 2080 Ti has dropped down to under 200 fps with the Ryzen 5 3600. As a result we see very little performance difference between the tested processors. The 3600 is a few percent faster with the RTX 2080 Ti and then identical performance is seen with the 2070 Super.

For those of you gaming at 1440p using ultra quality type of settings, here’s more evidence that the CPU isn’t as relevant as the graphics chip powering your game. It’s impossible to distinguish between any of the CPUs used here and the 3300X was capable of maintaining well over 100 fps at all times with the RTX 2080 Ti.

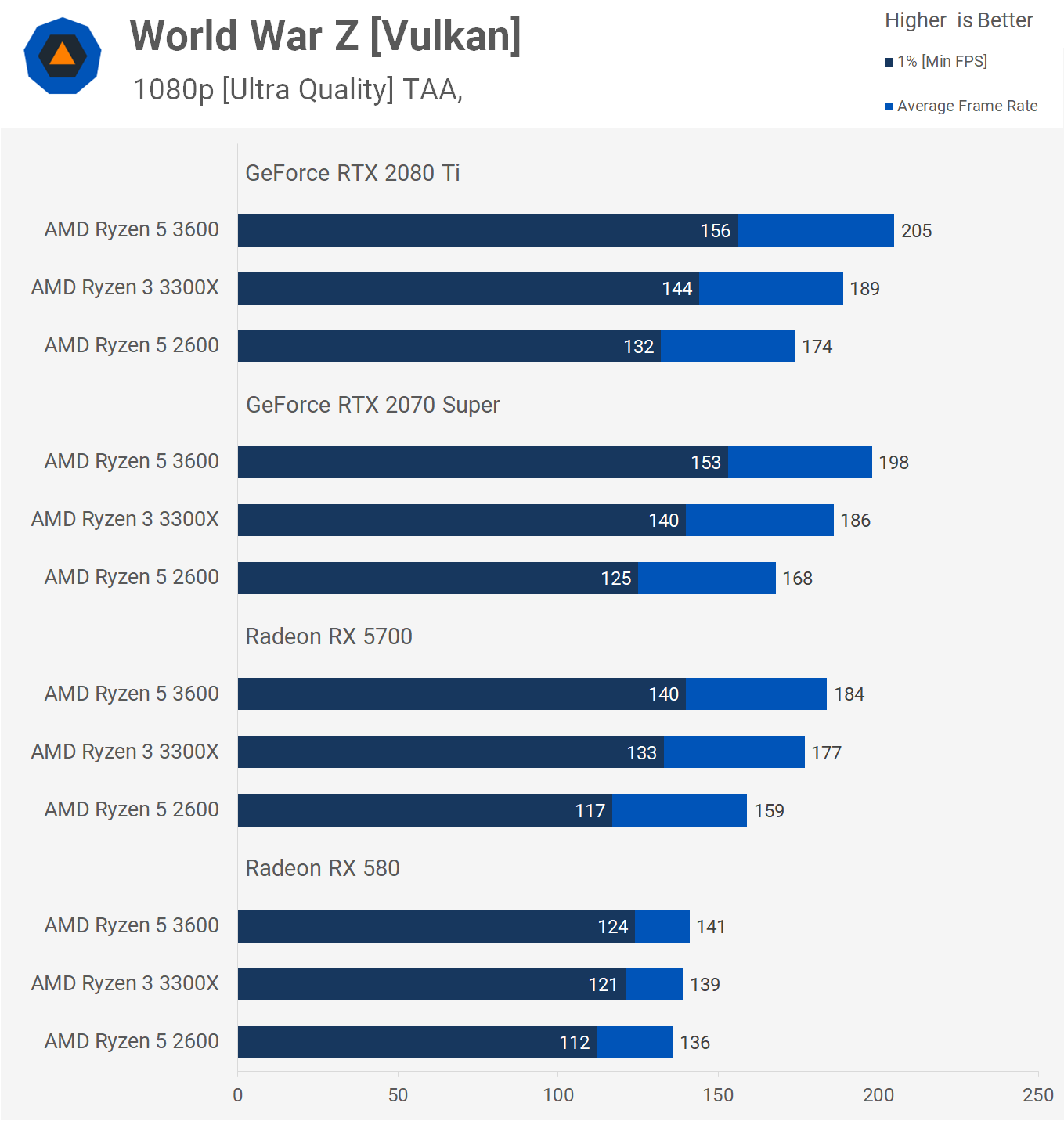

The last game we’re checking out is World War Z. Testing at 1080p with the medium quality preset sees virtually no performance difference between the 2080 Ti, 2070 Super and even the RX 5700, while the RX 580 isn’t far behind. Here we find a scenario that is almost entirely CPU limited.

In all this data the 3300X was up to 11% slower than the 3600 with similar margins between the average and 1% low data. So it’s important to note that while the 3600 is clearly faster, the gaming experience wasn’t any better, at least not to the degree that you’d be able to notice.

Even when stepping up to the ultra quality settings we see virtually the same results.

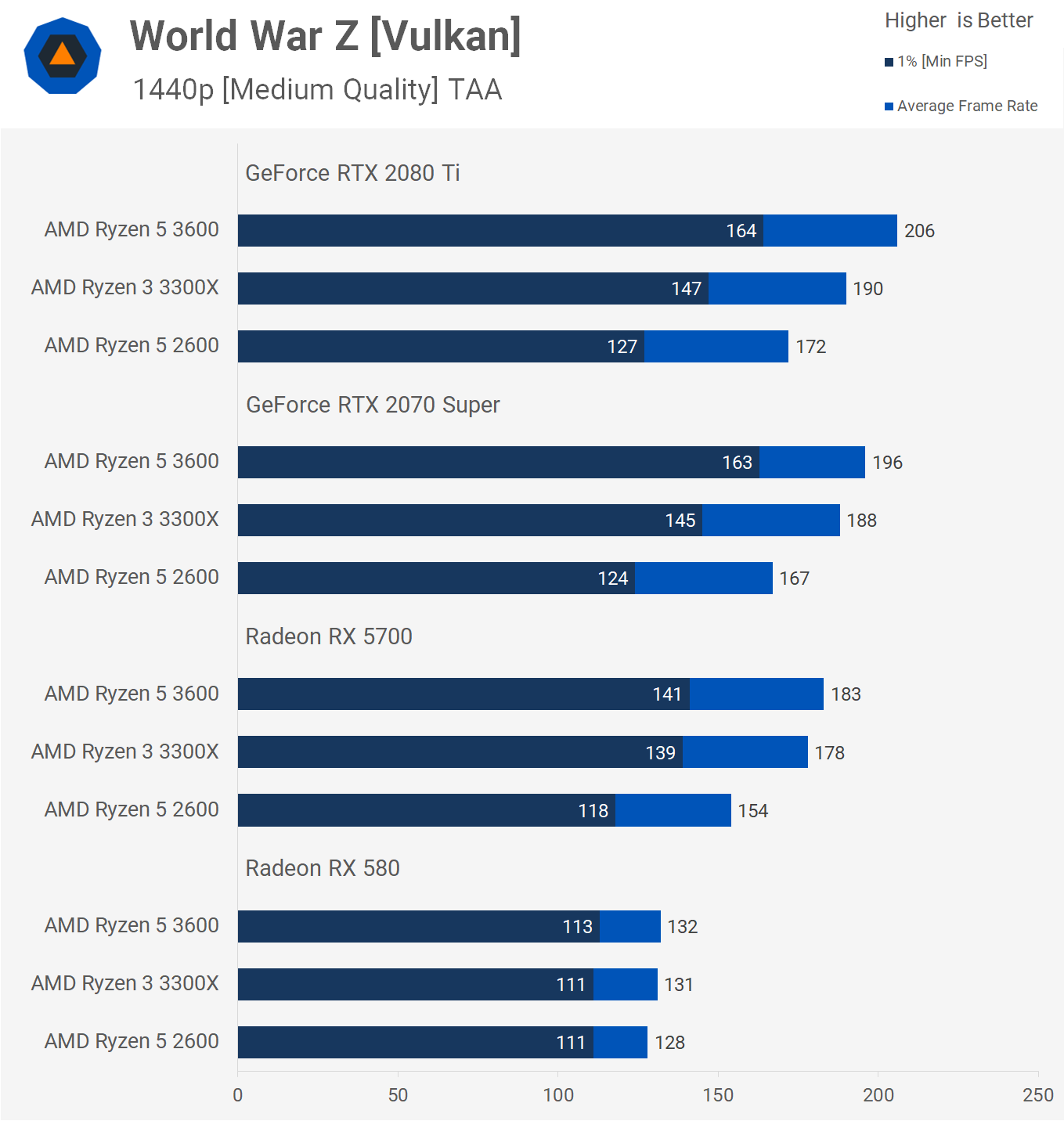

The 1440p medium quality results aren’t that different either, though we start to see the 3300X and 3600 come together with the Radeon RX 5700.

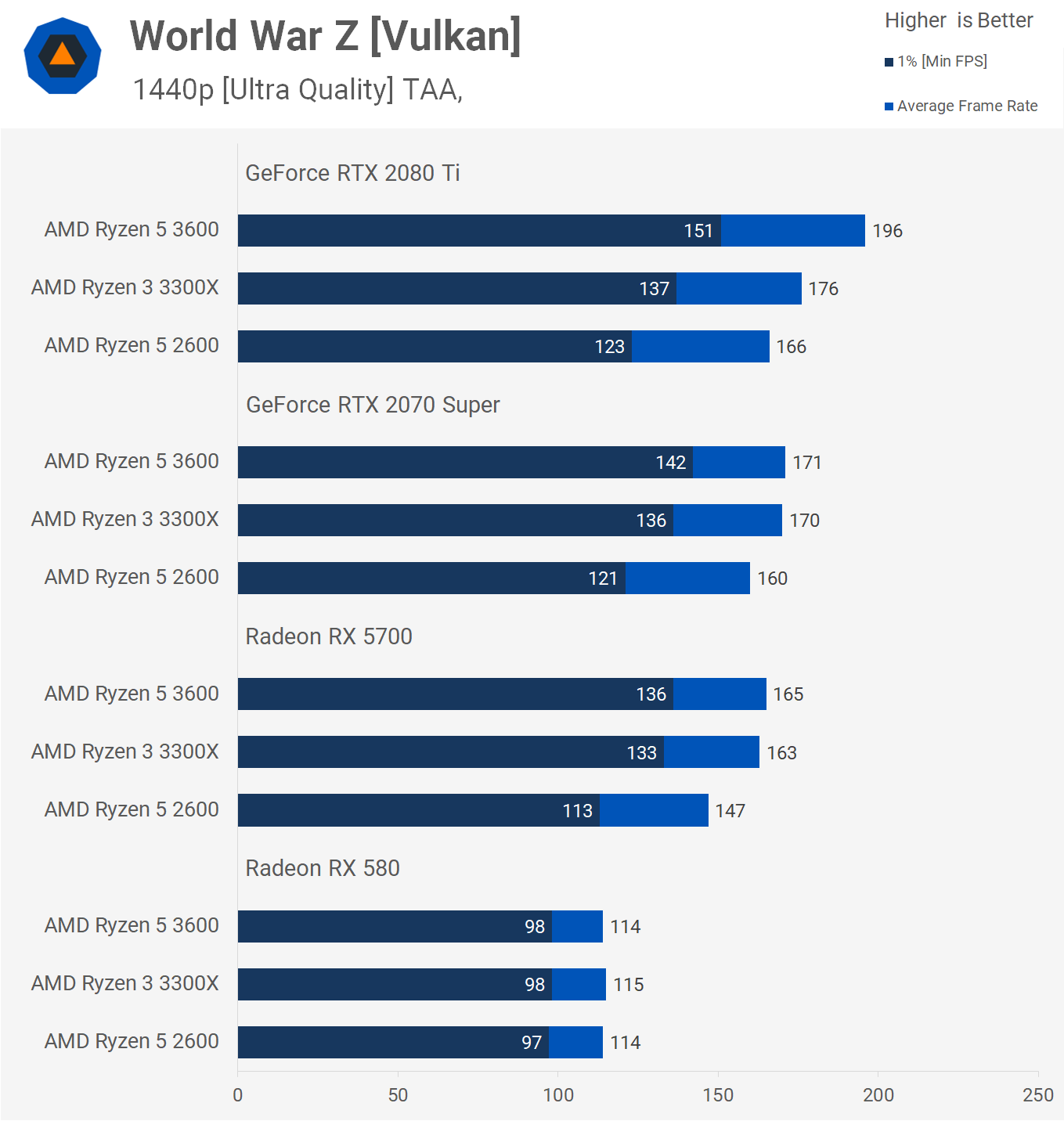

It’s not until we tested at 1440p with ultra graphics that the game starts to become a little more GPU bound, so we’re now seeing similar performance from the 3300X and 3600 with the RTX 2070 Super.

Performance Summary

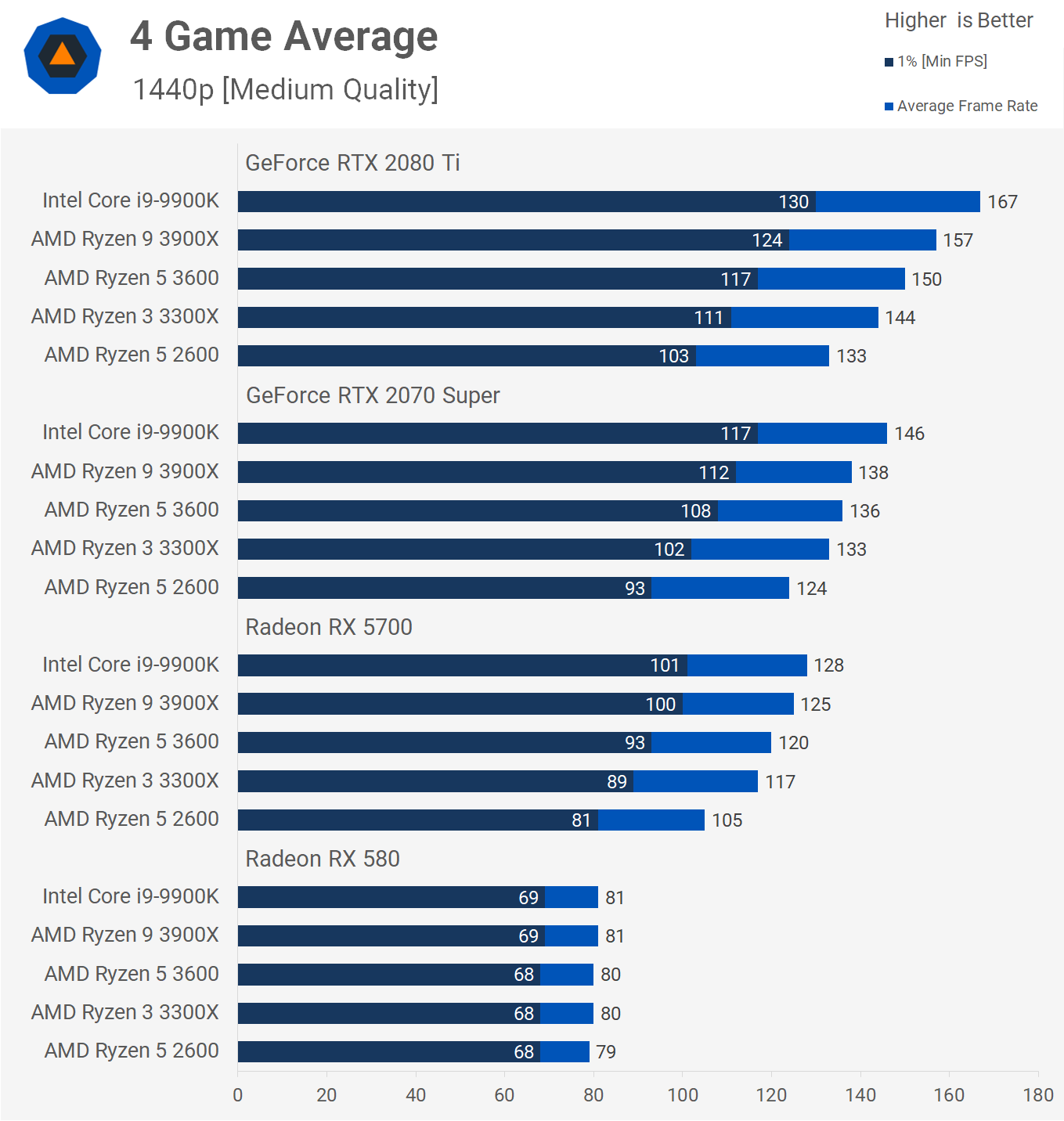

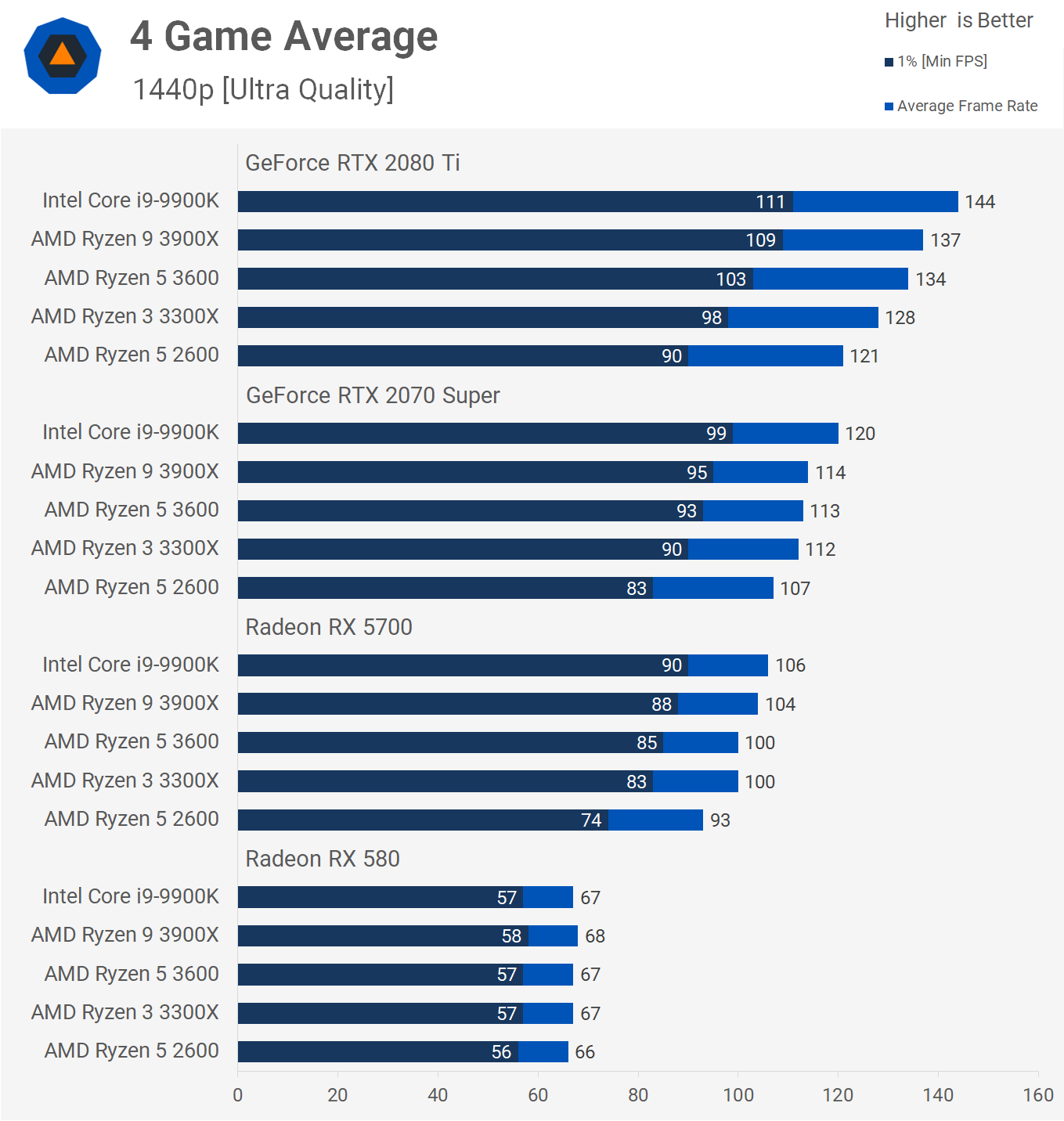

We know four games isn’t a lot, but it took almost 300 benchmarks just to add the Ryzen 3 3300X into this comparison. The games used should also cover most performance scenarios. That being the case, let’s see what the average performance looks like and for drawing more reference comparisons, we’ll be adding in the Ryzen 9 3900X and Core i9-9900K to the following graphs.

At 1080p with medium graphics settings, when using the fastest available RTX GPU today, the Ryzen 5 3600 is ~11% faster than the 3300X when comparing 1% low data. That’s a reasonable performance uplift but when you consider we’re talking about a 50% increase in core count, it’s really not that significant. Moreover, the 3300X was averaging 120 fps at all times in this testing.

Now if you’re not using an RTX 2080 Ti and instead have a $500 graphics card like the RTX 2070 Super, then that 11% margin shrinks to just 4%, or 6% with a Radeon RX 5700. With a more modest GPU like a sub-$200 RX 580, there’s no margin to speak of.

If we increase the games’ resolution to 1440p while still playing on medium settings, we see that the 3600 is now just 5% faster than the 3300X with the RTX 2080 Ti. Similar margins are seen with the RTX 2070 Super and RX 5700.

Looking at the ultra quality preset, we see pretty typical scaling as we go down the GPU stack. With the RTX 2080 Ti the 3600 is 8% faster than the 3300X when looking at the 1% low data, then 6% faster with the RTX 2070 Super, and just 5% faster with the Radeon RX 5700.

Finally, here’s a look at the 1440p ultra data, probably the most realistic scenario for someone rocking a high-end graphics card like the RTX 2070 Super or RTX 2080 Ti. Here we’re looking at just a 5% difference between the 3600 and 3300X and that means in the games just tested, the 1% low performance of the Ryzen 9 3900X was just 11% better than the 3300X.

Those margins shrink considerably with the RTX 2070 Super, where the 3600 was a mere 3% faster than the 3300X, while virtually no difference can be seen with the Radeon RX 5700.

What We Learned

Two months ago we called the Ryzen 3 3100 and 3300X the new budget champions. Since then Intel has released newer 10th-gen Core chips, including the Core i3 range, but those new parts have failed to dethrone Ryzen 3. The only problem at the moment is that you can buy the Core i3-10100, but you’re unlikely to find a 3300X anywhere.

The R3 3100 and 3300X have been out of stock for weeks, which is disappointing, and it’s unclear when stock will return. Needless to say, we’re seeing stock shortages for a whole host of PC parts, so it’s not just the 3300X that’s been affected by the global pandemic, it’s everything.

Unless stock levels were high prior to going into 2020, there’s a good chance many products are either overpriced or simply unavailable. The Ryzen 5 3600, for example, went on sale mid-2019 and with high demand for that part, you can bet AMD was spitting them out as fast as they could, building up a sizable inventory that they’re now able to burn through.

As a result the Ryzen 5 3600 is not only available today, but it’s available at a discount, typically selling for around $175, down from the $200 MSRP. That makes the 3600 the cheapest Zen 2-based processor you can buy right now.

But what if the Ryzen 3 3300X was available? That may be the case when this article goes live, or two weeks after… but if it were available at $130, should you buy it for gaming?

It’s a quad-core after all, and those are useless for gaming aren’t they? Well, as we explained last year in a feature titled “Are Quad-cores Finally Dead in 2019?”, it depends. We found that 4-core/4-thread quad-core processors struggle in a number of modern games, often suffering from poor frame time performance that leads to a noticeably poor gaming experience. However, 4-core/8-thread processors like the 3300X featuring SMT (simultaneous multithreading) are much better in that regard and generally avoid stuttery performance in modern titles. This is why processors like the Core i7-4790K, 6700K and 7700K still work quite well even today, while its Core i5 equivalents do not.

Another big consideration is price. With the Ryzen 5 3600 dropping down to just $175, that’s only a $45 saving (25%) when opting the 3300X. You’re taking a 33% core cut, but as we just saw in a lot of instances, the impact on gaming performance is smaller than either of those figures. Basically, if you’re looking at upgrading an old system and you want to save as much money as possible, the ability to save $45 on the CPU might be a pretty big deal — especially if you won’t notice a performance difference.

Even when compared to a class-leading gaming CPU like the Core i9-9900K (now the Core i7-10700K), in a lot of games the Ryzen 3 3300X isn’t that much slower. Talking realistic conditions — so not an RTX 2080 Ti at 1080p using medium settings where the 3300X was ~20% slower than the 9900K, that margin shrunk to just 13% when playing at ultra or 12% at 1440p, where it enabled over 100 fps on average.

All that said, if you can afford to step up to the Ryzen 5 3600, then we suggest you do. The 3300X is a solid CPU and a good value, but it’s right on the edge where there’s a potential for 4-core/8-thread processors to become problematic down the track. It’s far more obvious to us that the R5 3600 will become noticeably better than the 3300X within the next few years, whereas we’re doubtful we’ll see the same happening with the 3700X vs. 3600, for example. It’s our opinion that we’re a while off before 6-core/12-thread processors won’t cut it for gamers, regardless of how many cores the new consoles have.

Shopping Shortcuts:

[ad_2]

Source link