[ad_1]

A hot potato: Machine learning algorithms have taken the world by storm, and the world will likely suffer from the increasingly popular generative services available through online subscriptions. For the first time, scientists have calculated how much energy these services need; it’s a lot.

Generative AI services are truly spectacular energy-wasting machines, and AI-based image “creation” is the worst activity possible when it comes to carbon emissions. A recently published study from AI startup Hugging Face and Carnegie Mellon University tries to understand the impact of AI systems on the planet, analyzing different activities and generative models.

The paper examined the average quantity of carbon emissions produced by AI models for 1,000 queries, finding that generating text is a significantly less-intensive activity compared to image generation. A chatbot answering up to 1,000 queries consumes about 16% of the energy needed for a full smartphone charge, while image generation through a “powerful” AI model can take as much power as a full recharge.

Study lead Alexandra Sasha Luccioni said that people think about AI as an “abstract technological entity” that lives on a “cloud,” with no environmental impact whatsoever. The new analysis demonstrates that every time we query an AI model, the computing infrastructure sustaining that model has a substantial cost for the planet.

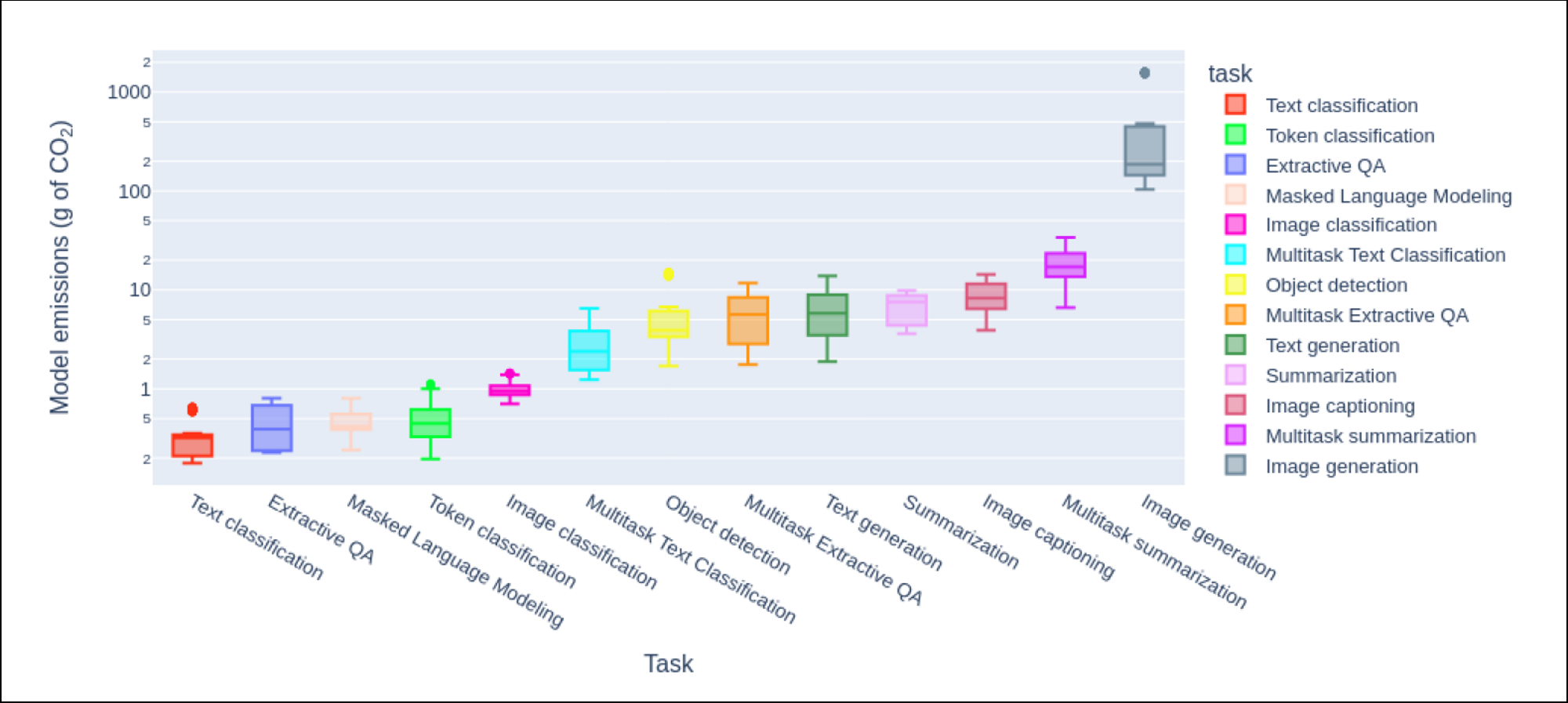

Luccioni’s team calculated carbon emissions associated with 10 popular AI tasks, using the Hugging Face platform for question answering, text generation, image classification, and more. The scientists developed a tool to measure the energy used by those tasks called Code Carbon, which calculates the power used by a computer running the AI model.

Using a powerful model like Stable Diffusion XL to generate 1,000 images, the study found, causes as much carbon emissions as driving an “average” gasoline-powered car for 4.1 miles. The least carbon-intensive model for text generation was responsible for as much CO2 as driving 0.0006 miles in a similar vehicle.

Using large, complex generative models is a much more energy-intensive affair than employing smaller AI models trained on specific tasks, the study further explains. Complex models have been trained to do many things at once, therefore they can consume up to 30 times more energy compared to a fine-tuned, task-oriented and simpler model.

The researchers also calculated that day-to-day emissions coming from AI services are significantly higher than the emissions associated with AI model training. Popular generative models like ChatGPT are used millions of times per day, and they would need just a couple of weeks to exceed the CO2 emissions associated with their training. Vijay Gadepally, a research scientist at the MIT Lincoln lab, said that the companies profiting from AI models must be held responsible for greenhouse emissions.

[ad_2]

Source link