[ad_1]

A lot has been said about 8GB graphics cards over the past year, and much of that discussion was sparked by our 8GB vs. 16GB VRAM comparison, which looked at the Radeon RX 6800 and GeForce RTX 3070. If you’re interested, we suggest checking it out. In summary, we found numerous instances where the 16GB RX 6800 outperformed the RTX 3070 in modern games, a difference directly tied to the larger 16GB VRAM buffer.

Since then, we have found additional cases where games perform better with 16GB of VRAM compared to just 8GB, either by delivering smoother frame rates or providing higher image quality through rendering all textures at full resolution.

The purpose of that feature was to raise awareness among gamers of the importance of VRAM. And armed with that knowledge, gamers could begin to push AMD and Nvidia to include more VRAM in future GPU generations. Historically, we’ve seen models like the RTX 3070 and 3070 Ti launch with inadequate VRAM capacities, despite their high prices.

Also read: Why Are Modern PC Games Using So Much VRAM? Explained.

Sadly, this generation has seen a product priced at $400 offering just 8GB of VRAM in the RTX 4060 Ti, though a 16GB version is now available for $450.

The reality is that, soon, 8GB of VRAM will be inadequate for many new games, a scenario we’ve already begun to witness. Thus, we strongly advise purchasing the 16GB version of the RTX 4060 Ti, if you’re considering an RTX 4060 Ti, as it represents a small 13% premium to avoid potential performance or visual issues down the track.

More recently, we’ve also seen the arrival of the 16GB Radeon RX 7600 XT – essentially a 16GB variant of the RX 7600 which only came with 8GB of VRAM. In this case, gamers are looking at a 22% premium for the 16GB model, making it a harder buy, though still encouraged for the same reasons.

Interestingly, when the 16GB RTX 4060 Ti was released, we saw a lot of people claiming that the 16GB buffer was unnecessary for such a product. The argument was the card was too slow, and that the 128-bit wide memory bus resulted in bandwidth of just 288 GB/s, which would prevent effective use of the larger buffer. However, we proved in our review of the RTX 4060 Ti 16GB that it was indeed useful, showing examples where performance and or image quality was substantially better.

Similar skepticism was directed at the Radeon RX 7600 XT, given its slower speed compared to the RTX 4060 Ti. Here, we’re also looking at a 128-bit memory bus with a bandwidth of 288 GB/s. To validate such claims would suggest that GPUs like the Radeon RX 6500 XT can’t effectively utilize an 8GB buffer, given its narrower 64-bit memory bus and half the bandwidth of the 7600 XT.

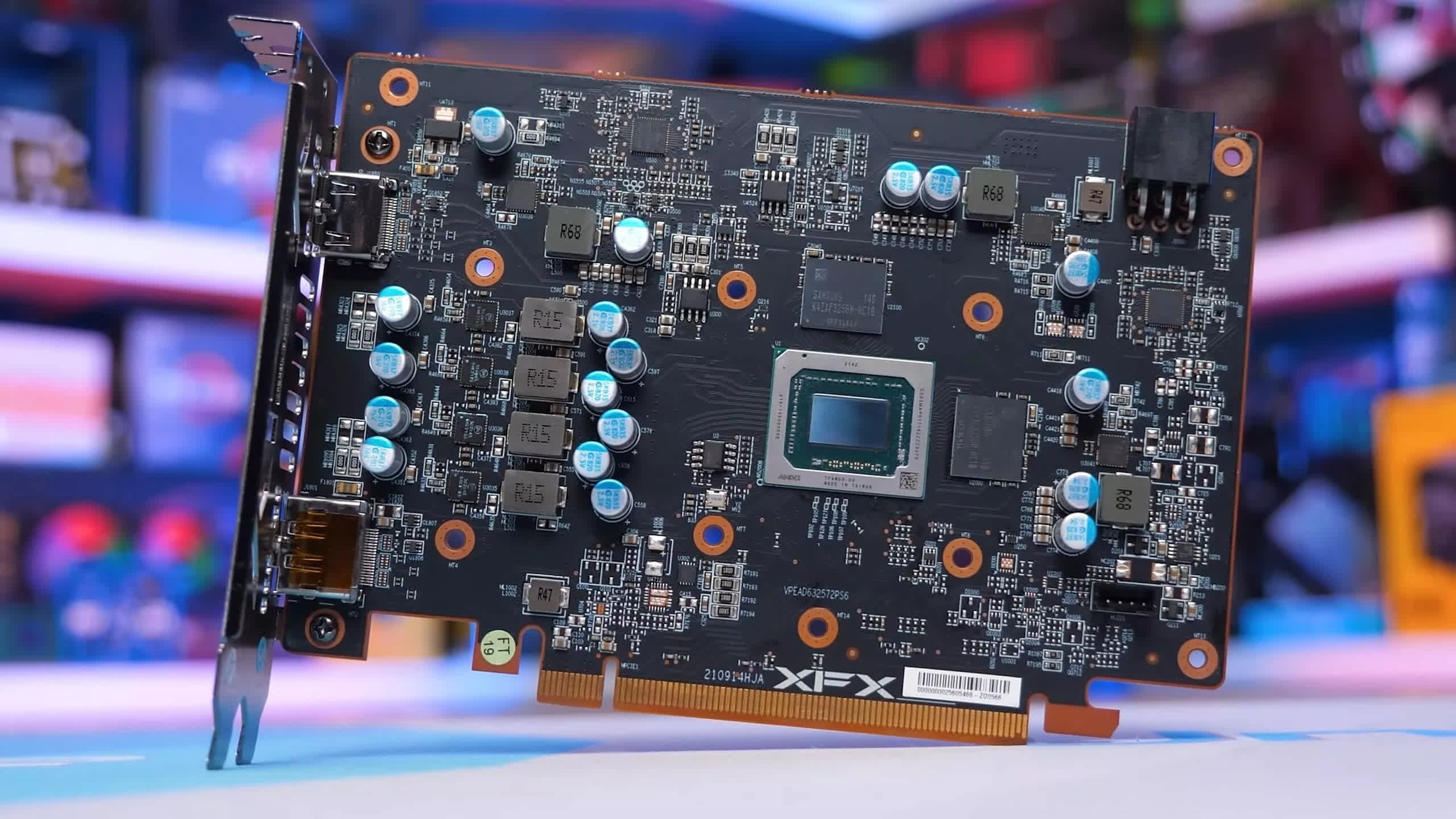

As it so happens, we have both the 4GB and 8GB versions of the Radeon 6500 XT, and aside from memory capacity, both versions are identical. Like the Radeon 7600 XT and 16GB RTX 4060 Ti, the 8GB 6500 XT doubles the number of GDDR6 chips used, attaching additional chips to the backside of the PCB using the clamshell method.

This makes the 6500 XT the most recent and most potent GPU available in both 4GB and 8GB configurations. Despite the Radeon 6500 XT being a weaker GPU, this is advantageous for our testing, providing insight into how less powerful GPUs utilize larger memory buffers. The comparison between 4GB and 8GB can also give us a glimpse into the future dynamics between 8GB and 16GB configurations.

For our testing, we’ll examine over a dozen games, all tested at 1080p using a variety of quality settings. The test system is built around the Ryzen 7 7800X3D, with DDR5-6000 CL30 memory, and we’ll use PCIe 4.0 with the 6500 XT to ensure maximum performance. Let’s take a look at the results…

Gaming Benchmarks

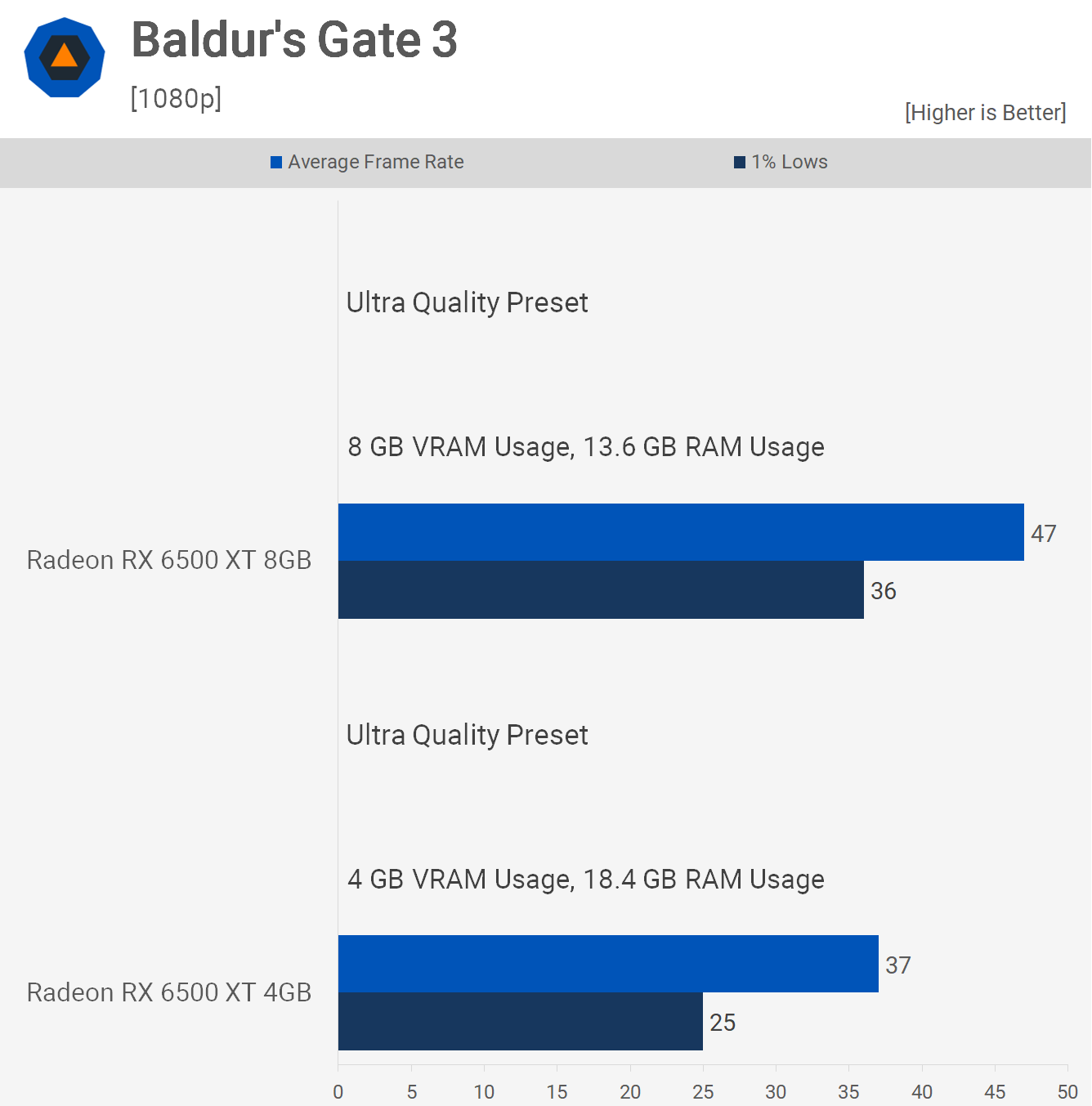

First up, we have Baldur’s Gate 3, which might not be the first game you think of when considering heavy VRAM usage. By today’s standards, it’s not particularly demanding, but it can still fully utilize an 8GB buffer, posing a challenge for 4GB graphics cards. Utilizing the ultra-quality preset, we observed a 27% increase in the average frame rate and a significant 44% improvement in the 1% lows with the 8GB 6500 XT.

Additionally, because data designated for local video memory didn’t overflow into system memory, RAM usage decreased by 26% with the 8GB 6500 XT configuration.

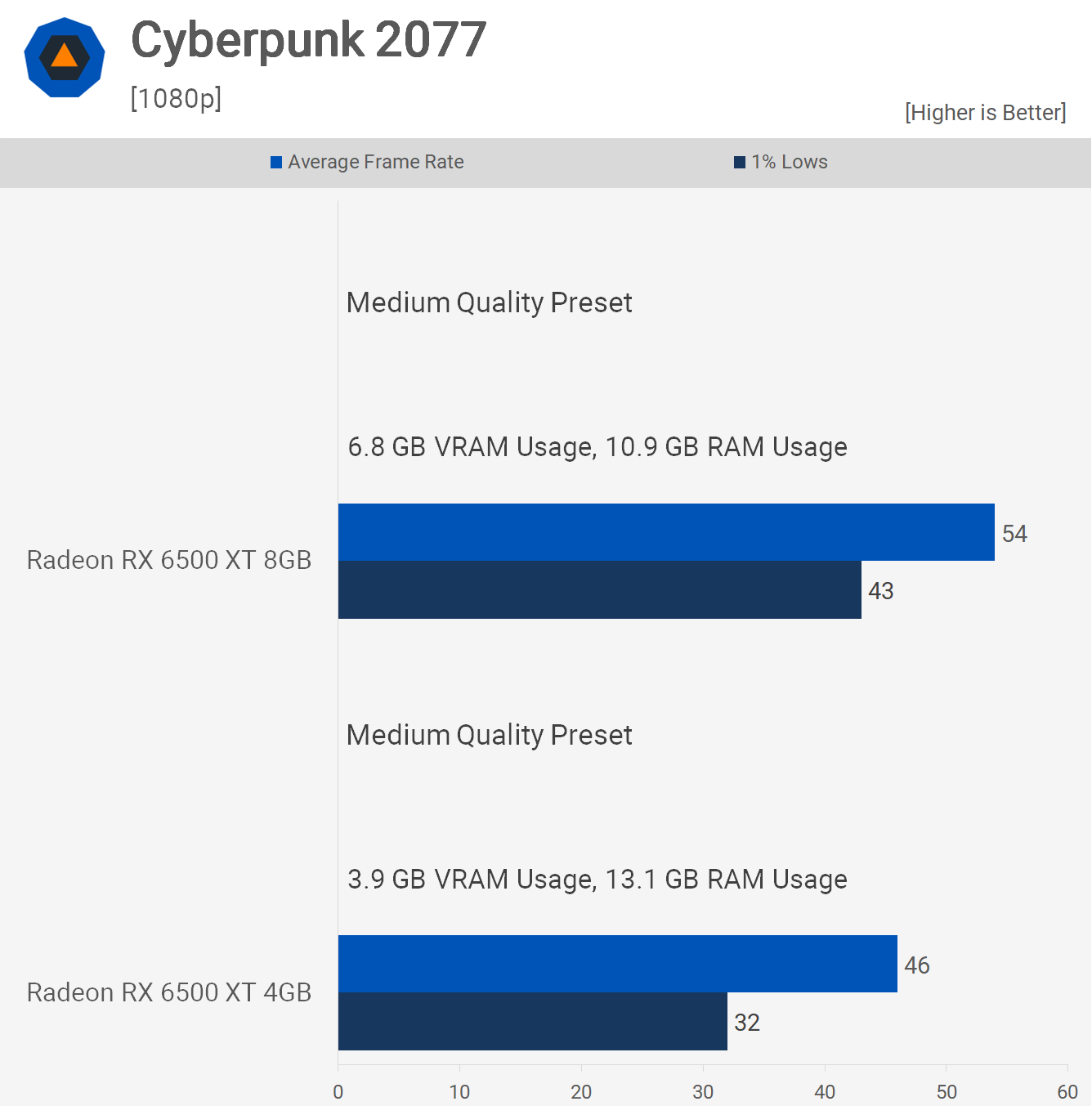

Next, we examined Cyberpunk 2077, using the medium quality preset at the native 1080p resolution. It’s important to note that all testing for this article avoided any kind of upscaling.

With a 4GB VRAM buffer on the 6500 XT, we recorded 46 fps on average. The 8GB model was 17% faster. However, the most notable increase was in the 1% lows, improving by 34% in this case. Memory usage rose to 6.8GB, and RAM usage decreased by 17% to 10.9GB, underscoring the importance of sufficient VRAM for performance.

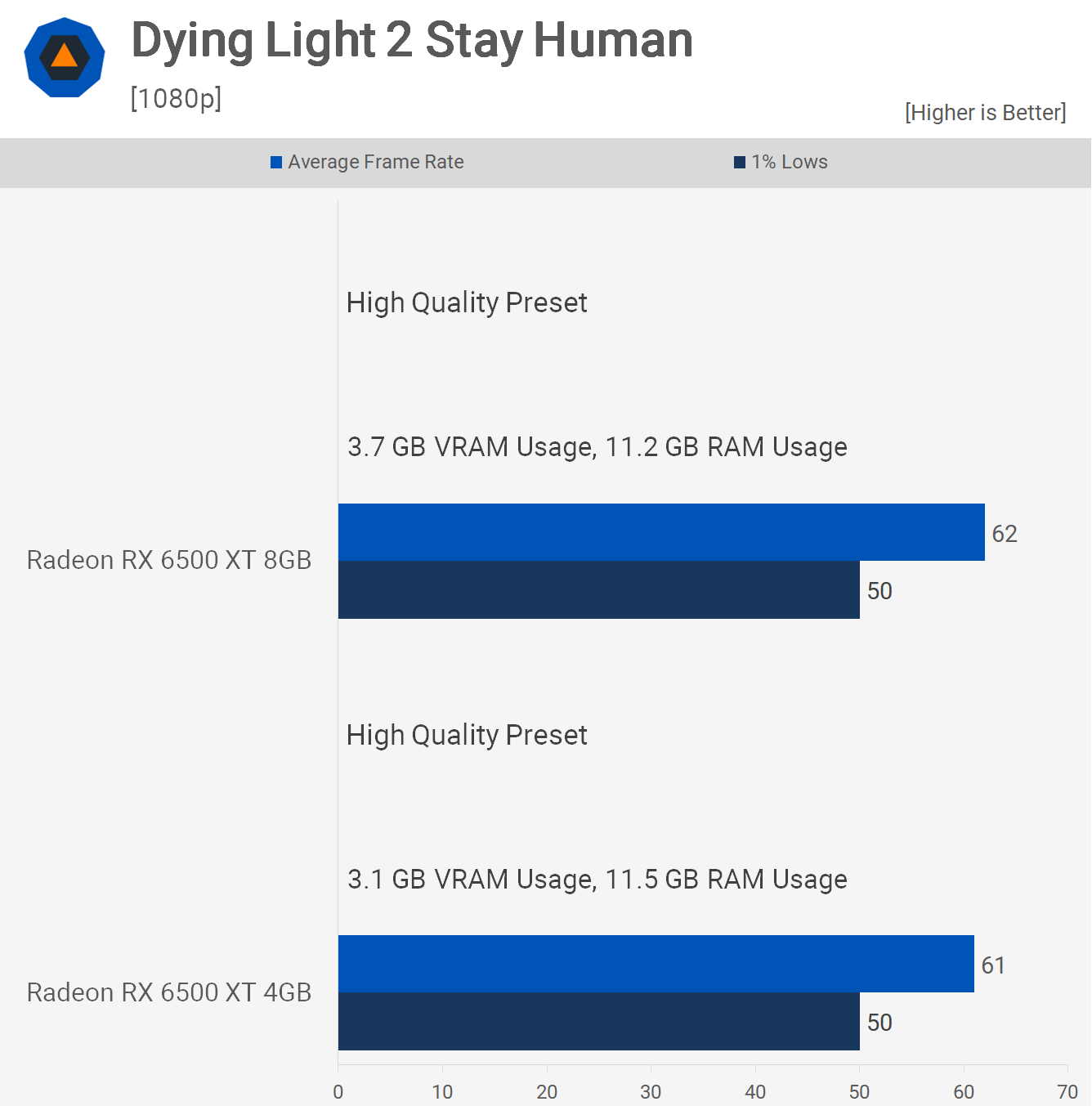

Dying Light 2 was tested using the ‘high preset,’ enabling 60 fps gameplay. These quality settings do not exceed a 4GB VRAM buffer, so both the 4GB and 8GB versions of the 6500 XT delivered identical performance levels. When 8GB graphics cards were initially introduced, most results resembled this scenario, leading gamers to believe purchasing a 4GB version of a product like the RX 480 was sensible.

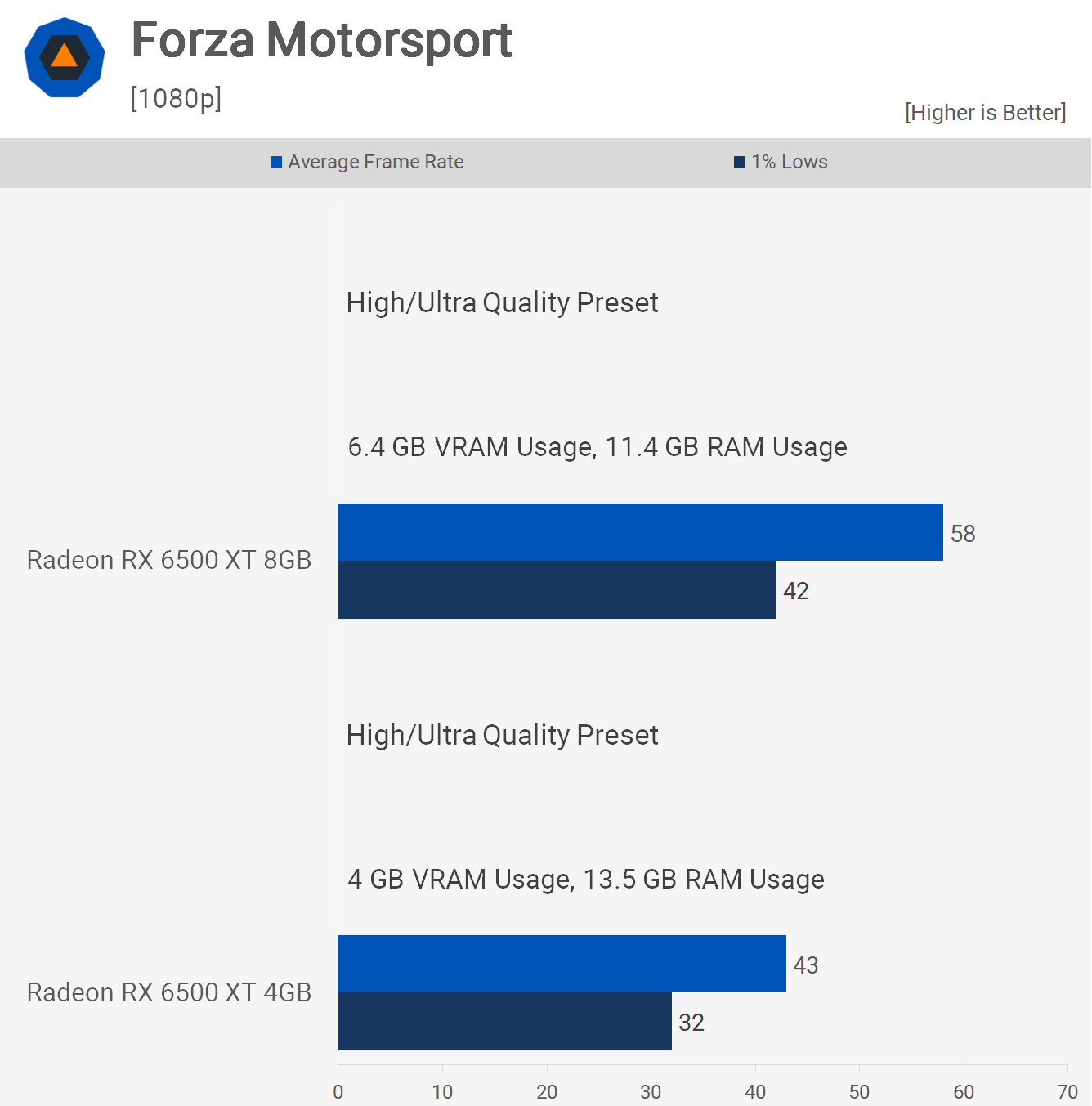

In Forza Motorsport, using a mix of high and ultra settings, we found that the game could utilize up to 6.4GB of VRAM at 1080p. This led to a 35% increase in average performance for the 8GB model.

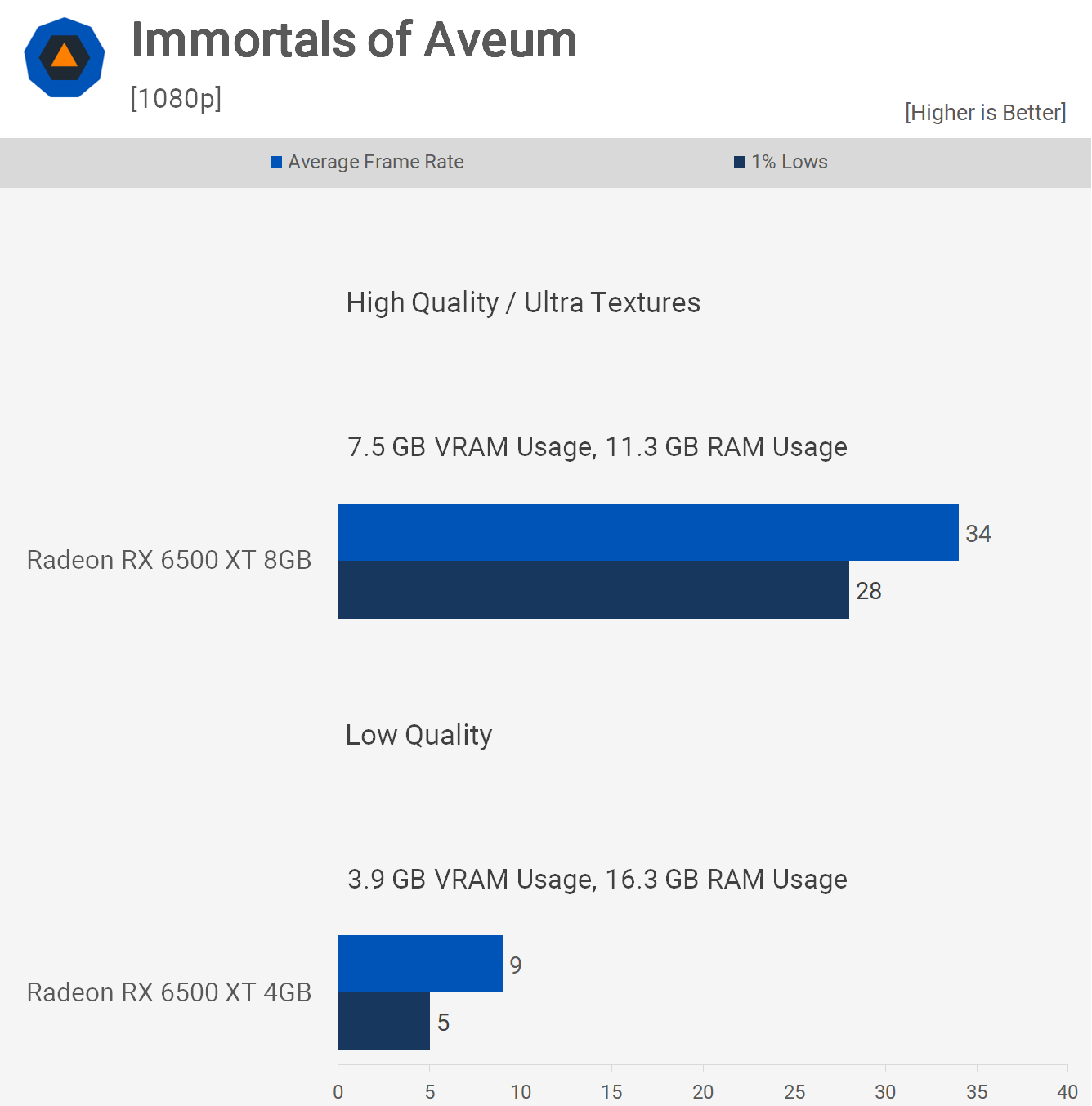

Immortals of Aveum, a visually stunning title, demonstrated that with enough VRAM, enabling the high-quality preset and manually adjusting the texture quality to ultra still yielded over 30 fps on average. While not exceptional performance, especially for a 6500 XT, the key point is that without sufficient VRAM, the game becomes completely unplayable. Even on the lowest quality settings, the 4GB 6500 XT struggled, achieving only 9 fps on average, making the game unplayable. The performance difference here, using the same GPU but with varying VRAM capacities, is remarkable.

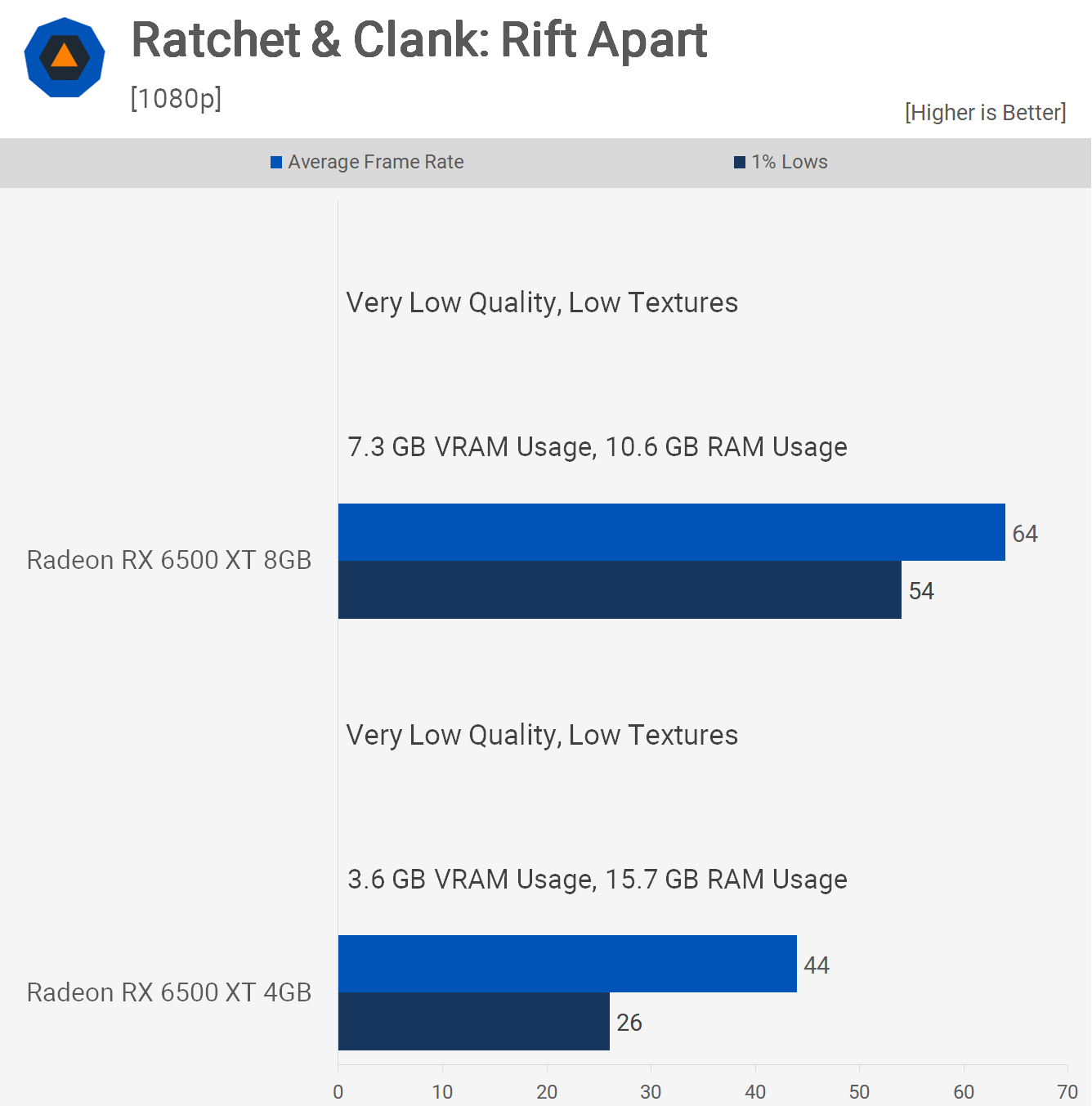

Another game that demands substantial VRAM is Ratchet & Clank: Rift Apart. Testing at 1080p using the lowest possible quality preset resulted in the 4GB model achieving only 44 fps on average. In contrast, the 8GB version was 45% faster, or an incredible 108% faster when comparing 1% lows. This is attributed to the game requiring at least 8GB of VRAM, using 7.3GB in our tests. Additionally, having enough VRAM on the 8GB card reduced system memory usage by 32% compared to the 4GB model.

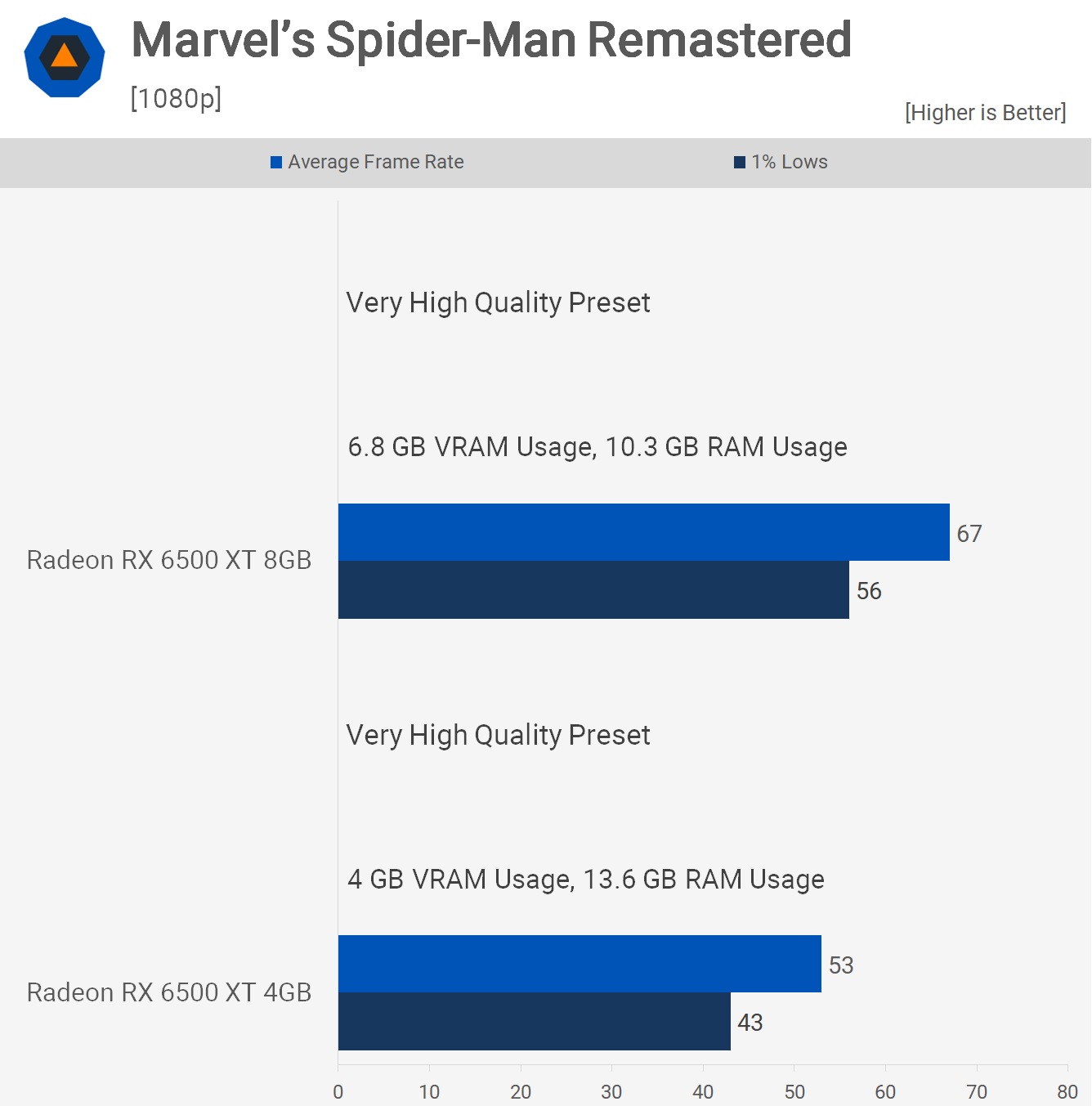

Lastly, we tested Spider-Man Remastered, enabling the highest quality preset, labeled ‘very high’. With 8GB of VRAM, the 6500 XT managed to render over 60 fps, marking it 26% faster than the 4GB version. This was because the game consumed up to 6.8GB of VRAM in our test scene, and having sufficient VRAM with the 8GB model decreased RAM usage by 24%.

Ultra vs. Low Benchmarks and Visual Comparisons

Assassin’s Creed Mirage

In this next section, we’re going to make several comparisons, many accompanied by image quality comparisons. There’s a lot to cover, but we believe there’s content here that you may not have seen before. We’ll start with Assassin’s Creed Mirage.

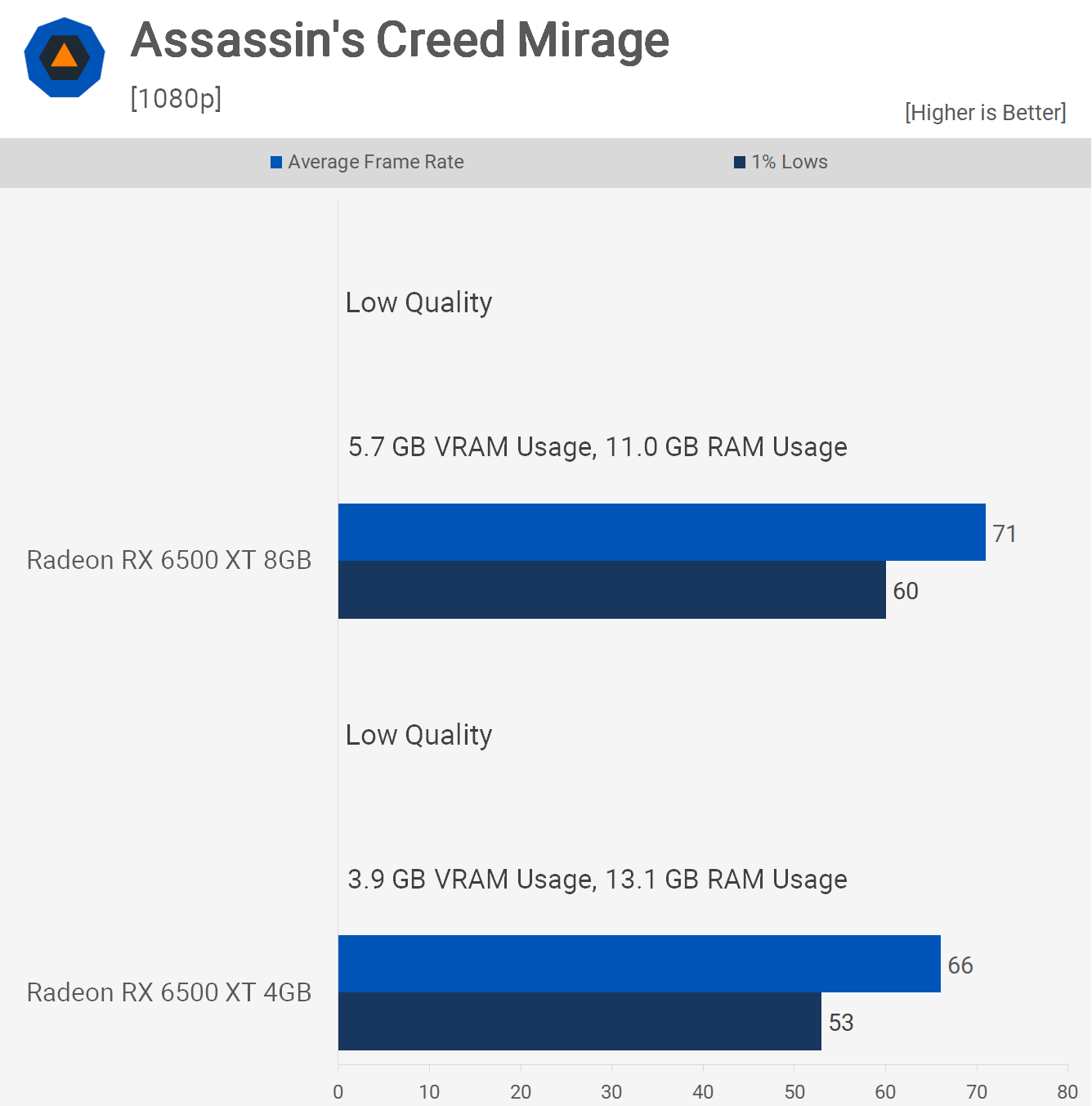

Using the low-quality preset, the 8GB model was still 8% faster as the game used up to 5.7GB of VRAM.

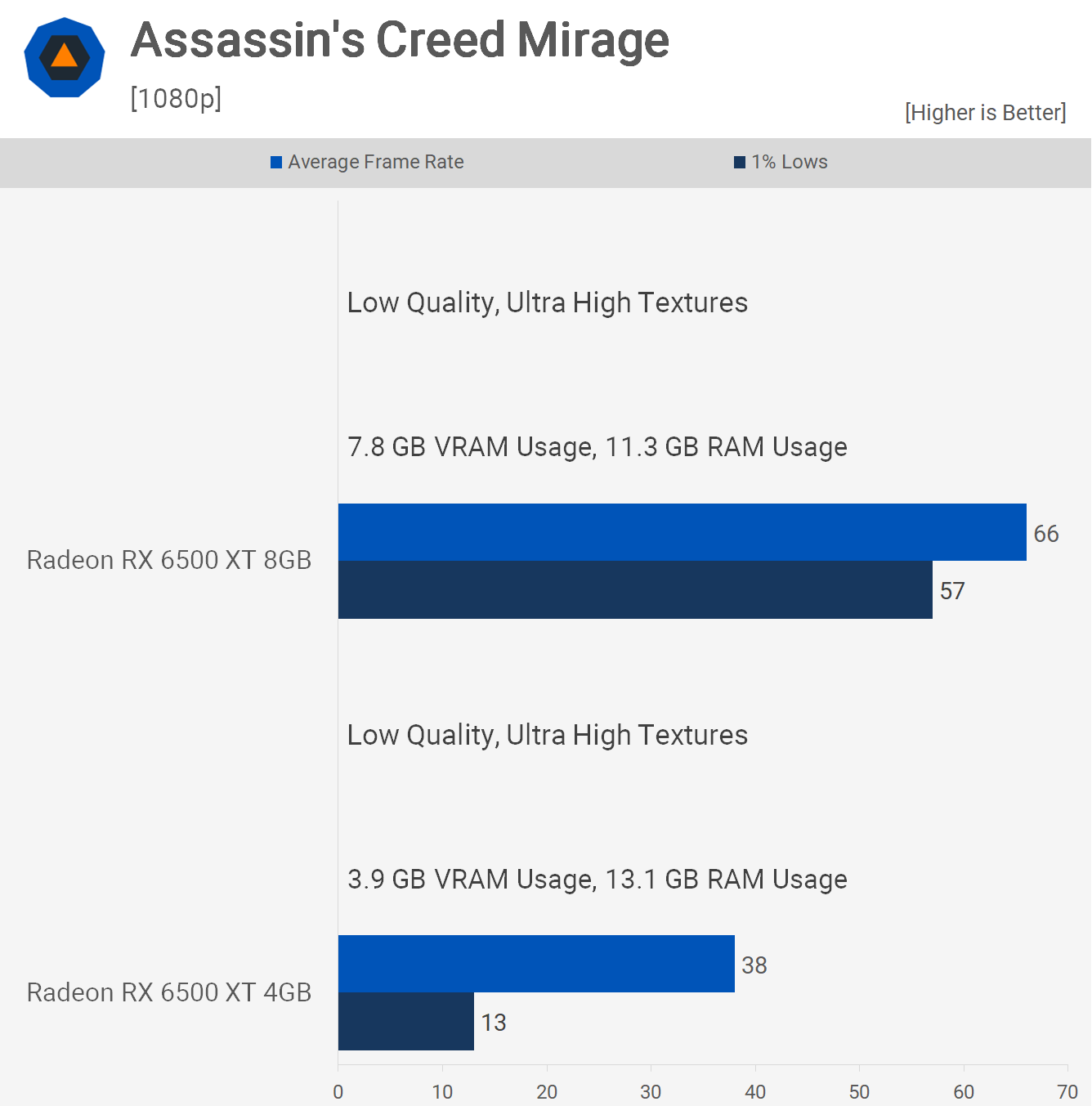

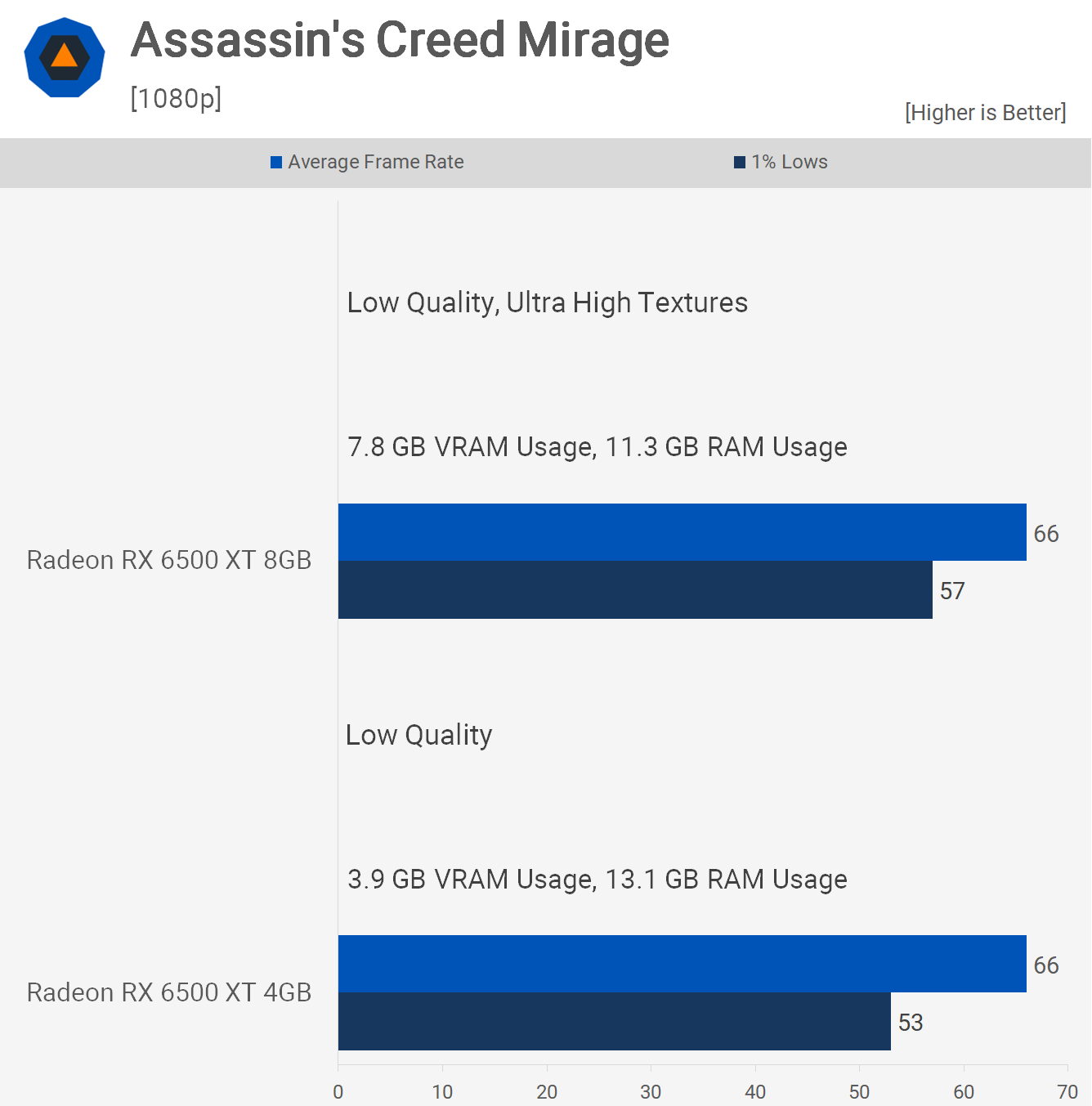

For these results, we kept the low-quality preset enabled but manually increased the texture quality to ultra-high. As a result, the 8GB configuration saw a 7% reduction in performance, but the 4GB model experienced a massive 42% decrease, or a 75% decrease when looking at the 1% lows. The game is now close to maxing out the 8GB buffer, which is why the 8GB model saw a slight decrease in performance and a slight increase in RAM usage.

The end result was a playable 66 fps on average for the 8GB card, making it 74% faster than the 4GB model, or 338% when comparing 1% lows.

Essentially, this means that for roughly the same level of performance, you can enable ultra-high textures with the 8GB model, while 4GB cards are limited to low texture quality. Even then, we are still observing an increase in RAM usage for the 4GB model.

Visual Comparison

Having seen the numbers, let’s look at how this translates visually. On the left, we have ultra-high textures, and on the right, low textures. At a glance, they appear very similar, but as you pay more attention while playing, you’ll notice differences. It’s important to remember we are using the lowest quality settings, so aspects like level of detail, shadows, lighting, and other visuals are compromised here. However, you can clearly see distant elements rendering at a higher quality with ultra-high textures.

The brickwork on the ground, for example, is noticeably better, and this difference becomes apparent when playing the game. We hope YouTube compression hasn’t diminished the quality of these visual comparisons. It’s challenging to discern in this test, but the foliage on trees, for instance, looks better, although, in this title, the differences are often subtle. The key point is that with the 8GB model, you receive the visual upgrades without any performance penalty.

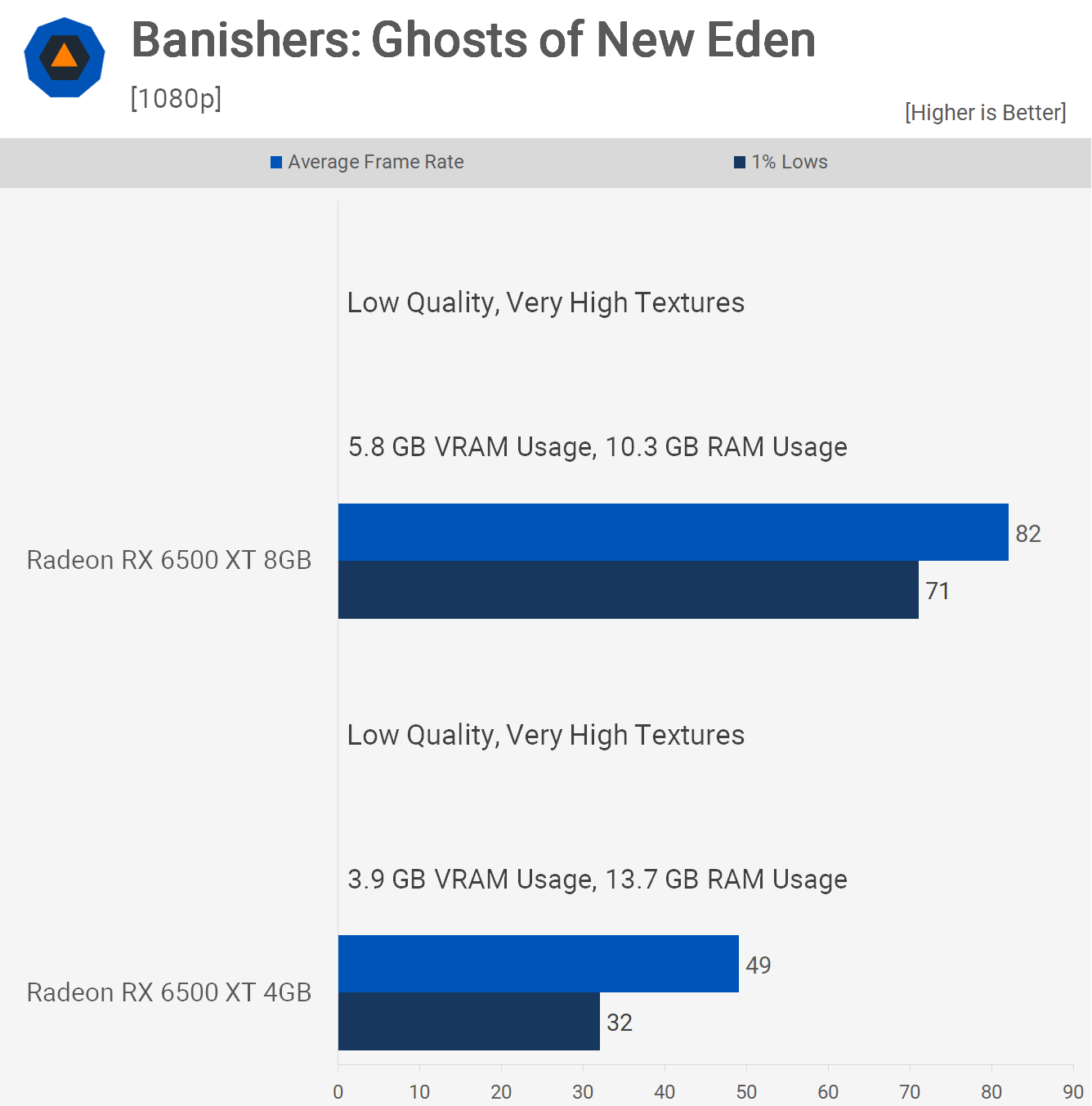

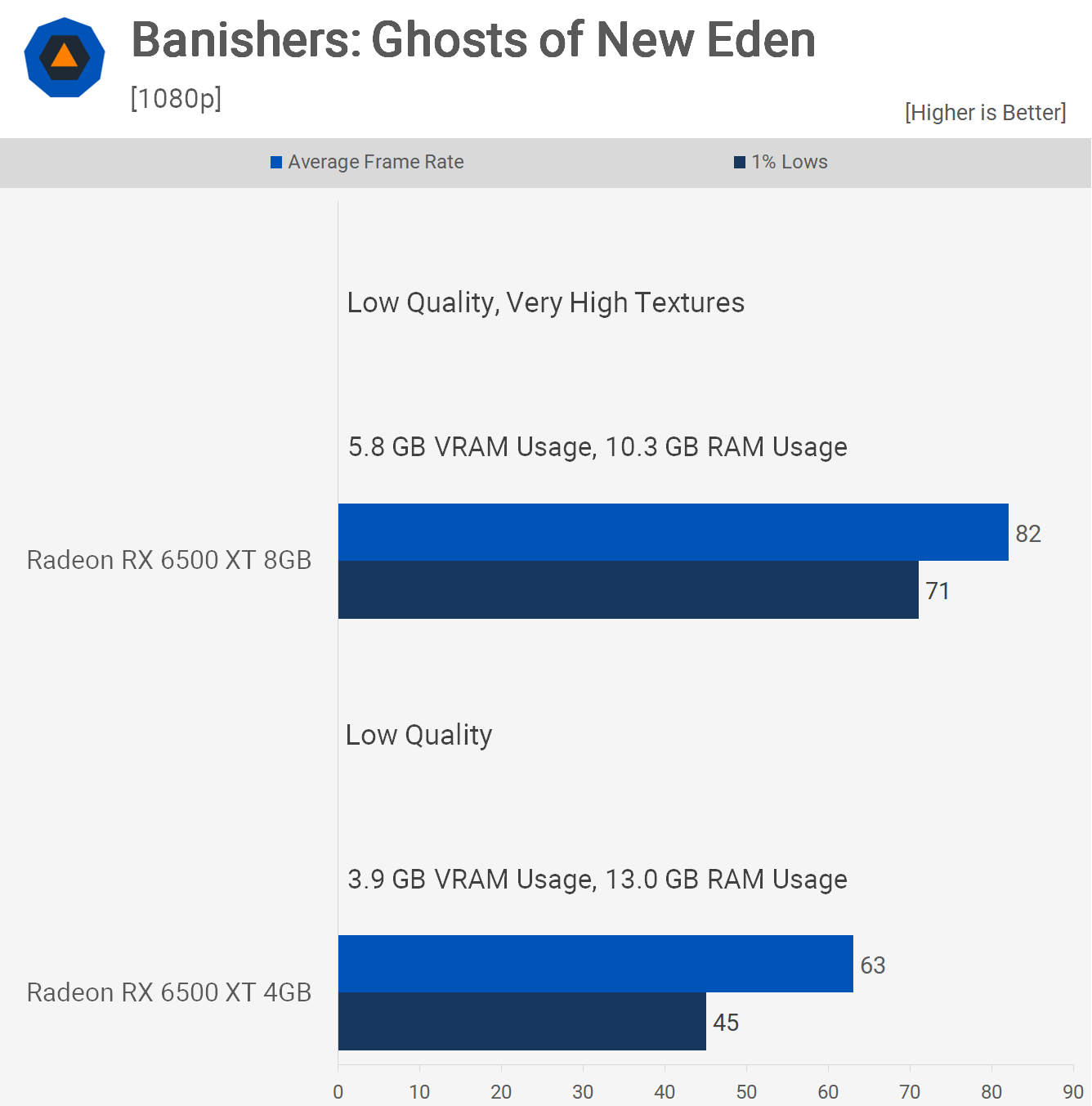

Banishers: Ghosts of New Eden

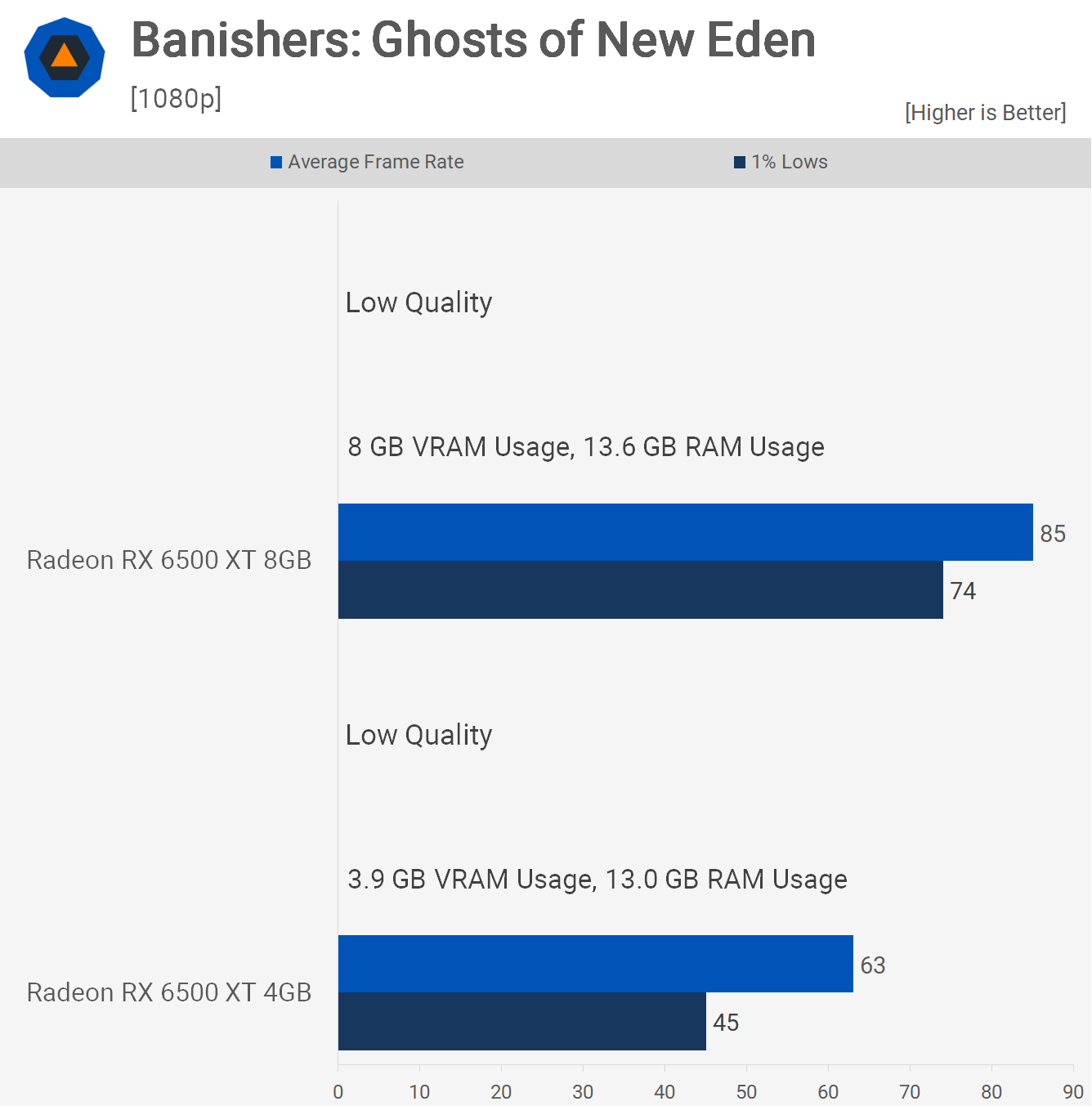

Banishers: Ghosts of New Eden requires more than 4GB of VRAM at 1080p, even using the lowest quality preset. The 8GB 6500 XT was a massive 35% faster when comparing the average frame rate and 64% faster for the 1% lows. This is due to the game using 4.7GB of VRAM, slightly over the 4GB buffer, but clearly enough to significantly impact performance.

Maintaining the low-quality settings and adjusting the texture quality to very high, we saw VRAM usage increase to 5.8GB on the 8GB model. This resulted in almost no performance loss, making it 67% faster than the 4GB version and 122% faster when comparing 1% lows.

This means you can maximize the texture quality on the 8GB card and still achieve up to 58% better performance than the 4GB model, which used low-quality textures. Thus, you’re getting significantly better performance with enhanced visual quality.

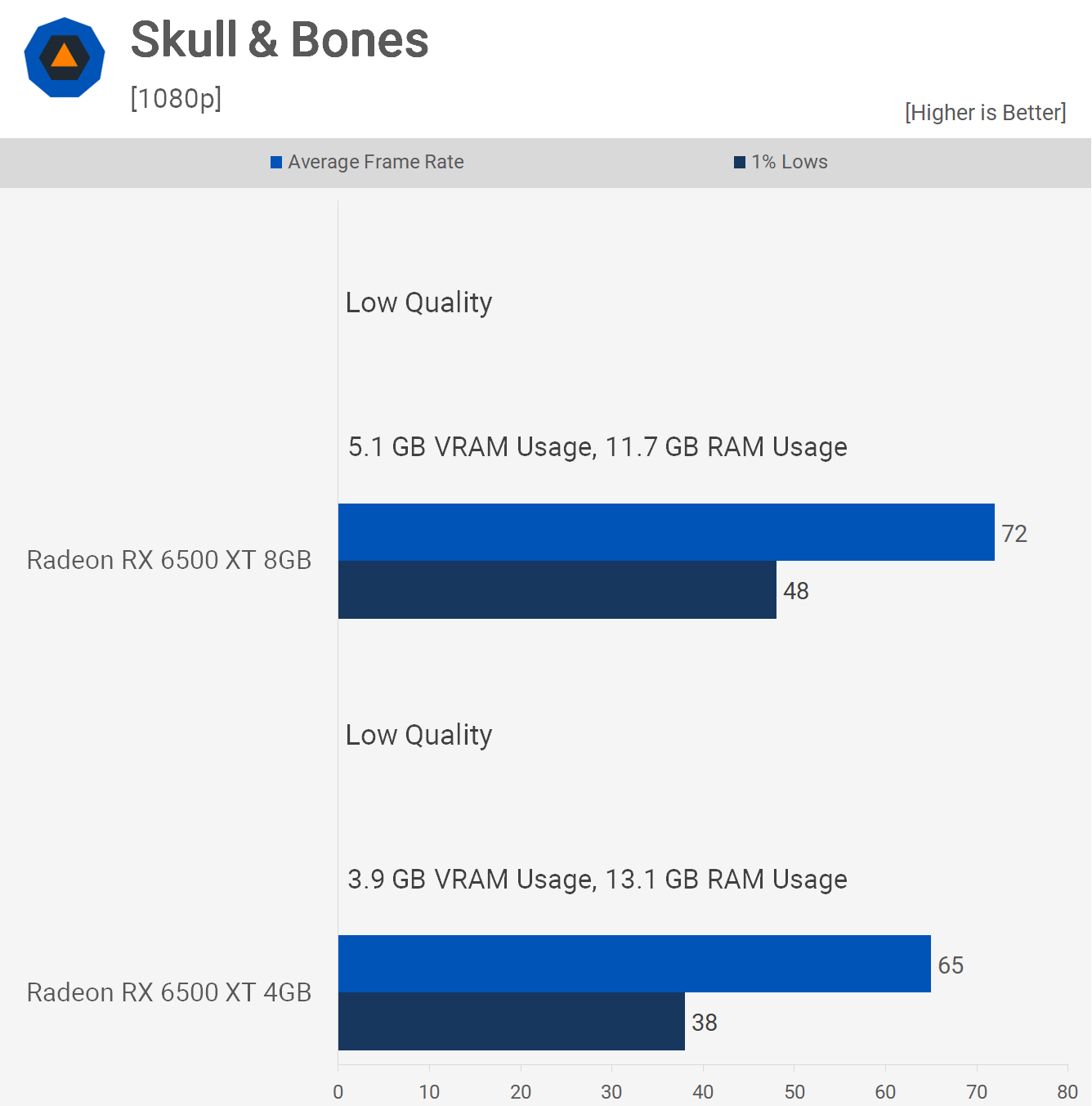

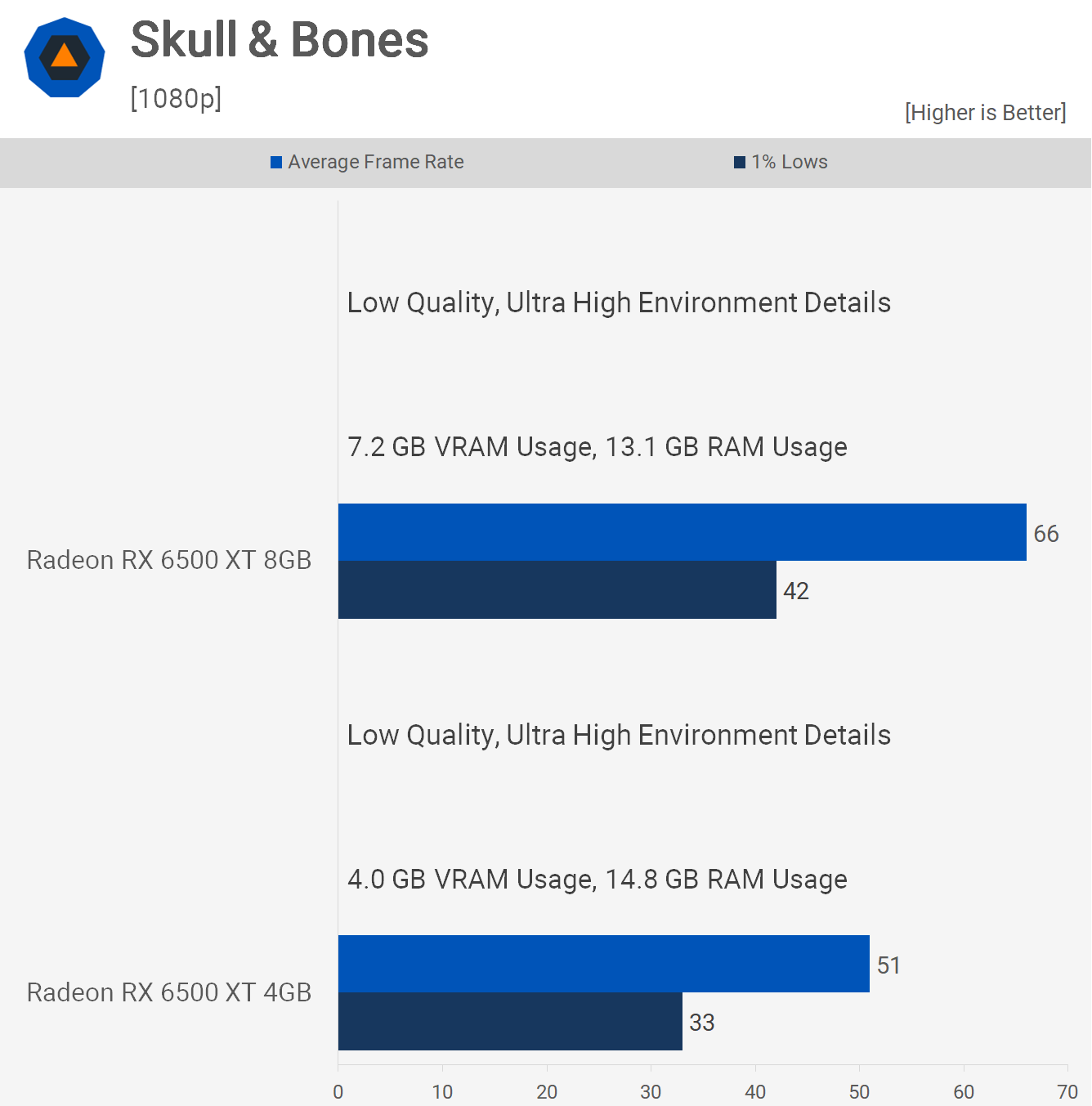

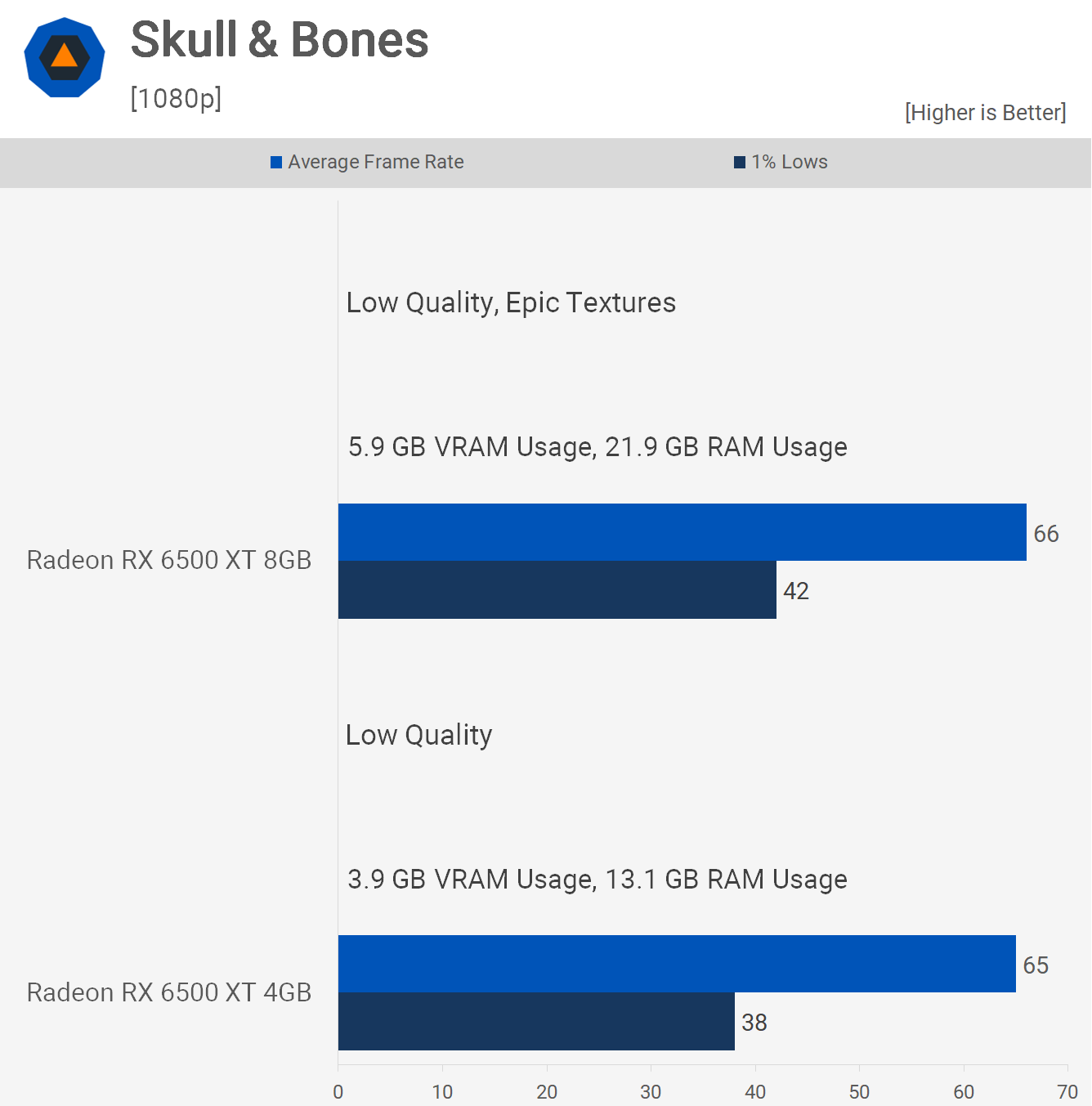

Skull & Bones

Skull & Bones, another recent purchase which we have tested using the built-in benchmark, at least initially. With the low-quality settings enabled, the 8GB model was 11% faster on average and 26% faster when comparing 1% lows as the game used up to 5.1GB of VRAM.

Increasing the environment details from low to ultra-high, VRAM usage increased to 7.2GB on the 8GB model, giving it almost a 30% performance advantage when comparing the average frame rate.

This means that for a similar level of performance, the 8GB model can run with the environment details setting maxed out, while the 4GB model is limited to the low setting.

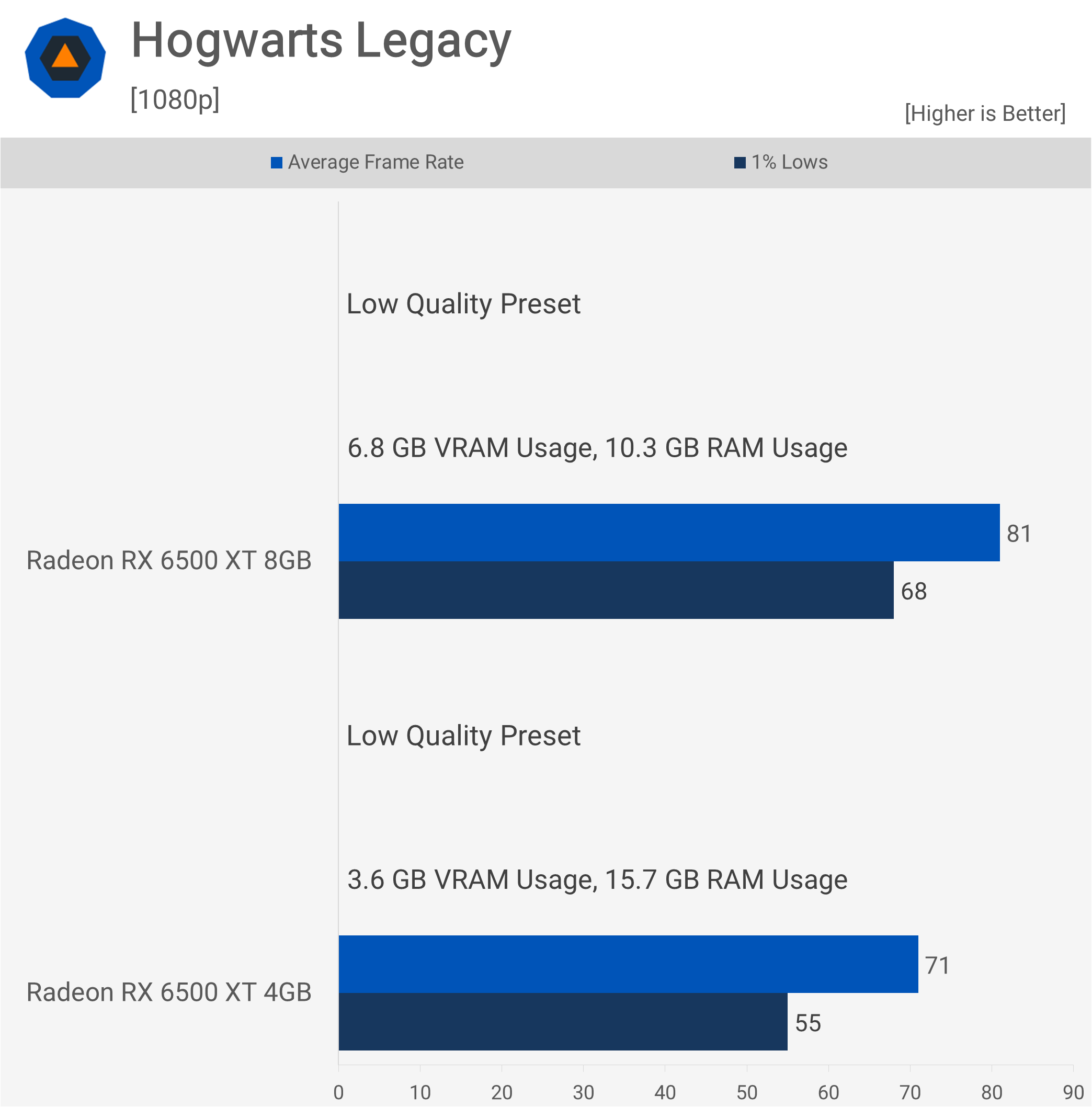

Hogwarts Legacy

Next, we have Hogwarts Legacy, not the most optimized modern triple-A title, but an extremely popular game nonetheless. Using the lowest possible quality settings, the 4GB 6500 XT managed just 71 fps on average, which seems decent until you discover that with 8GB of VRAM, it’s capable of 81 fps, a 14% improvement though it’s the 24% improvement in 1% lows that you’ll notice the most.

The game indeed requires more than 4GB of VRAM. Even using the lowest possible settings at 1080p, we’re not far from maxing out the 8GB buffer with 6.8GB used. We even observed a 52% increase in RAM usage for the 4GB configuration as data intended for the video memory overflowed into system memory.

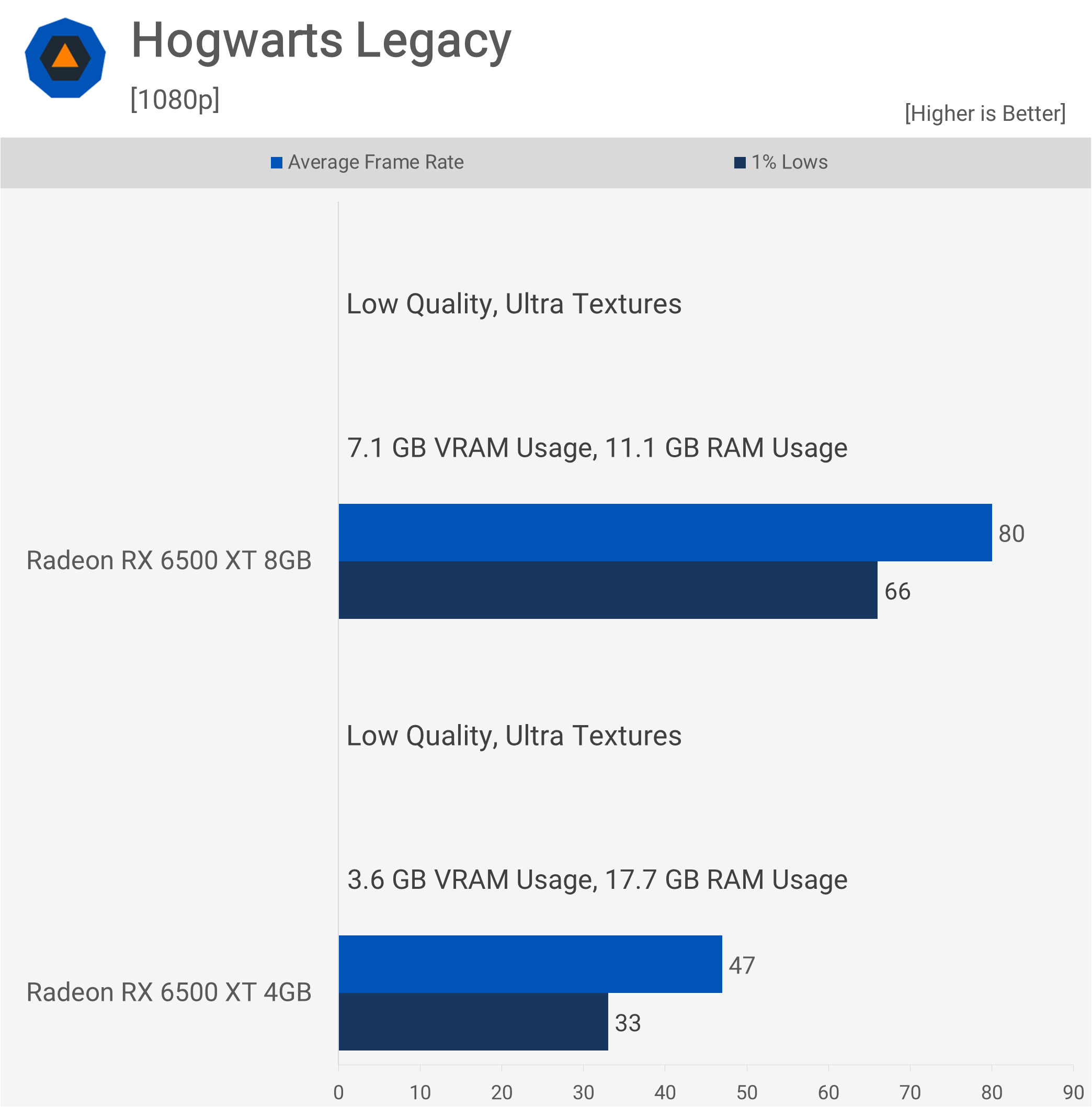

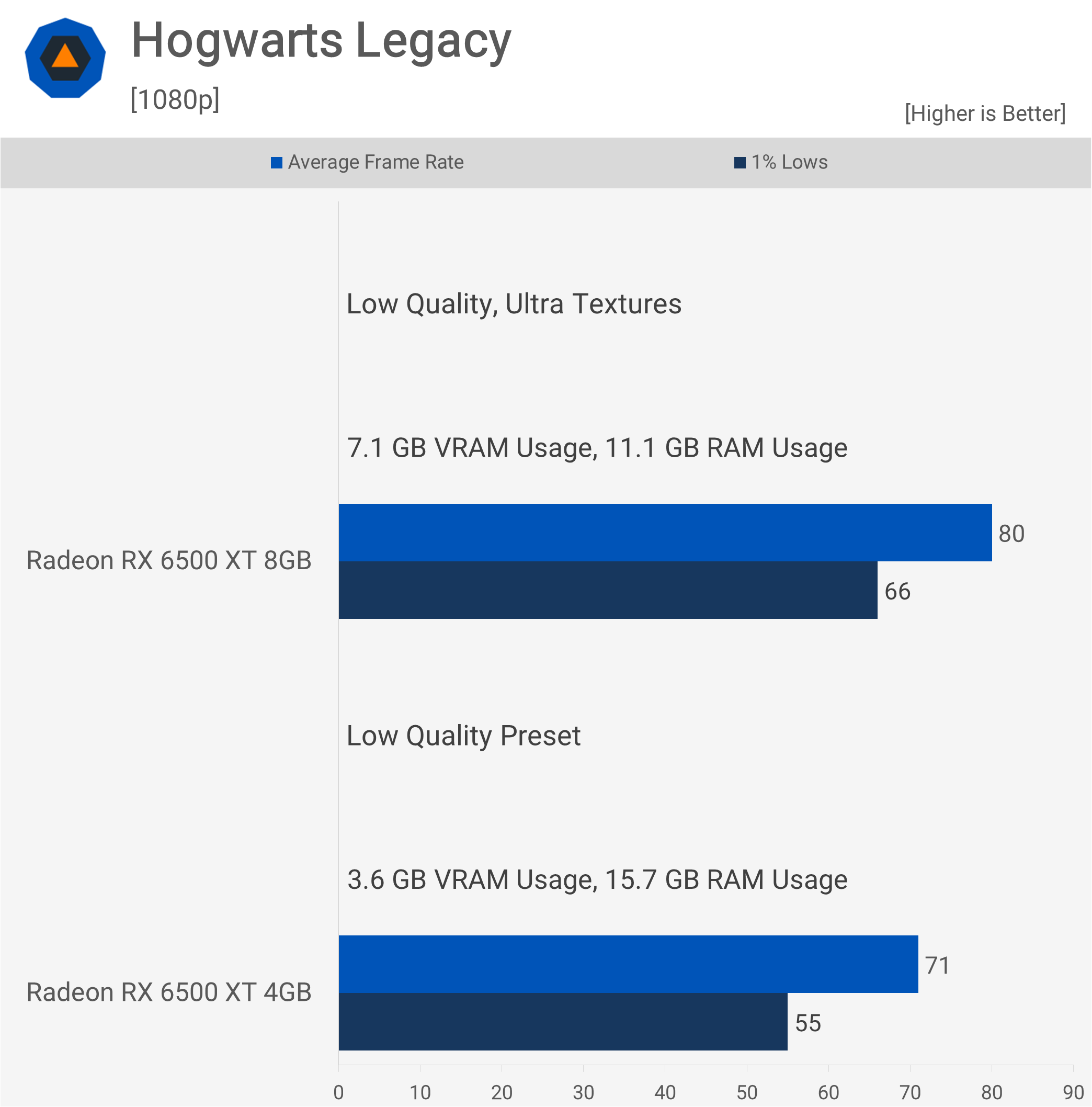

Of course, if we enable ultra-quality textures, the situation worsens for the 4GB model, with system memory usage increasing to 17.7GB, while frame rates drop by 33%. This made the 8GB model 70% faster on average as the game was now using 7.1GB of VRAM.

This means you can play Hogwarts Legacy on the 8GB 6500 XT using ultra-quality textures and, in fact, with 80 fps, you could increase the quality of a few other settings. But for the sake of this comparison, the 8GB model was 13% faster when using ultra textures vs. the 4GB model with low textures.

Visual Comparison

The visual difference between the low-quality preset using low textures vs. ultra textures is night and day, very noticeable when gaming. Even in these static scenes, you can see the difference. We chose to compare Hogwarts Legacy this way as it was extremely difficult to get exact matching scenes when moving.

You can see it here: the stack of pots on the right of the screen features much higher detail when using ultra textures. Looking at the A-frame building in the distance, you can see that the bricks making up the facade are rendered at much higher quality.

In this scene at the food cart, simply increasing texture quality results in significantly higher image quality. The shape of the vegetables looks more realistic, and the textures, ground texture, and even the crates on the cart look worlds better.

Another issue we found with the 4GB model was texture popping, and it wasn’t just slow-loading textures. Rather, textures would constantly de-spawn, even when standing still. A good example of this is most notable with the bricks on the right-hand side of the scene. It’s remarkable that the comparison on the left side of the screen, which looks worlds better, also resulted in significantly better performance for the 8GB version of the 6500 XT.

Even the hair quality of this bull looks much better. Something else that will stand out when playing is text quality. For example, it’s almost impossible to read this rather large sign when using low-quality textures.

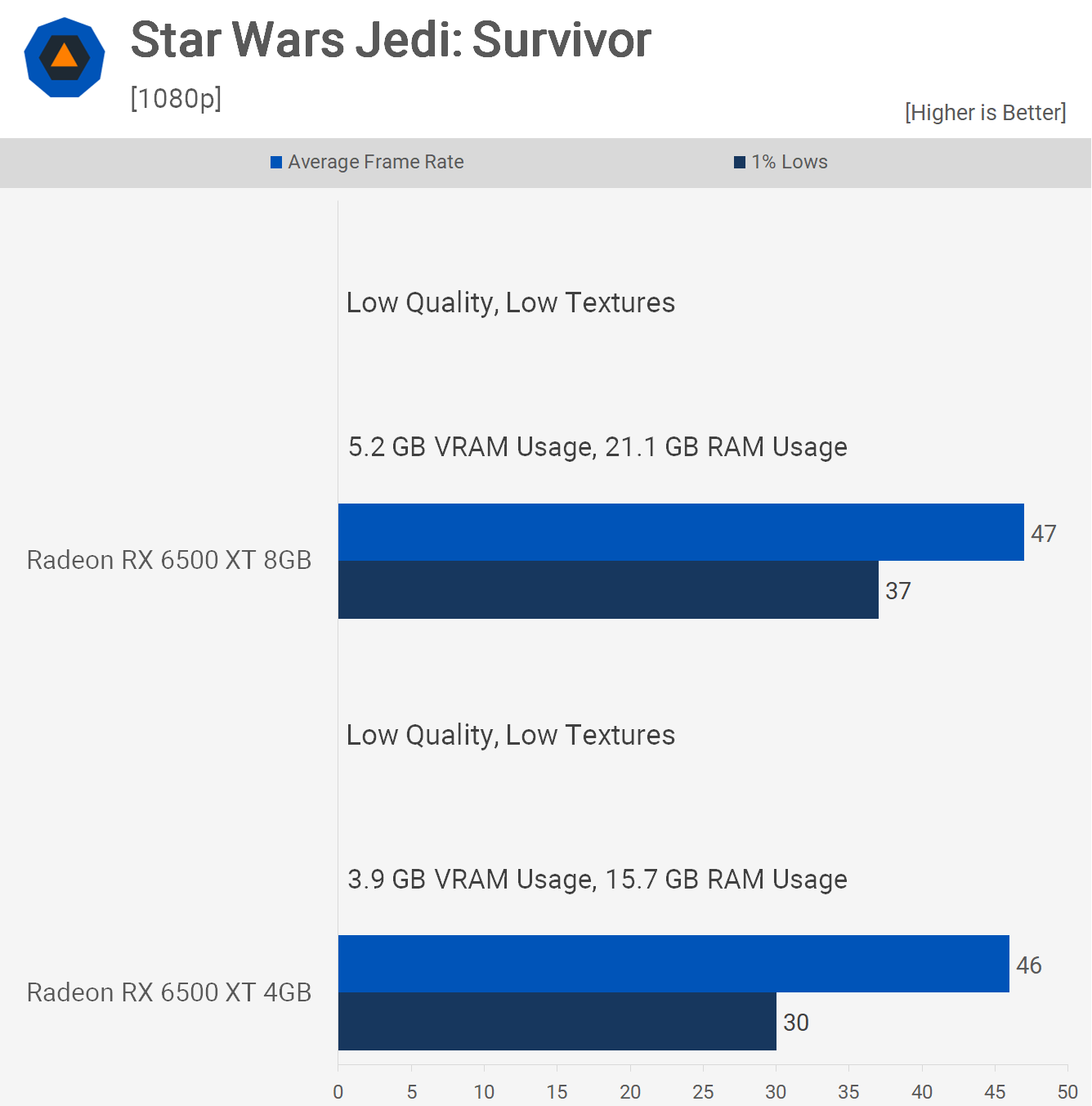

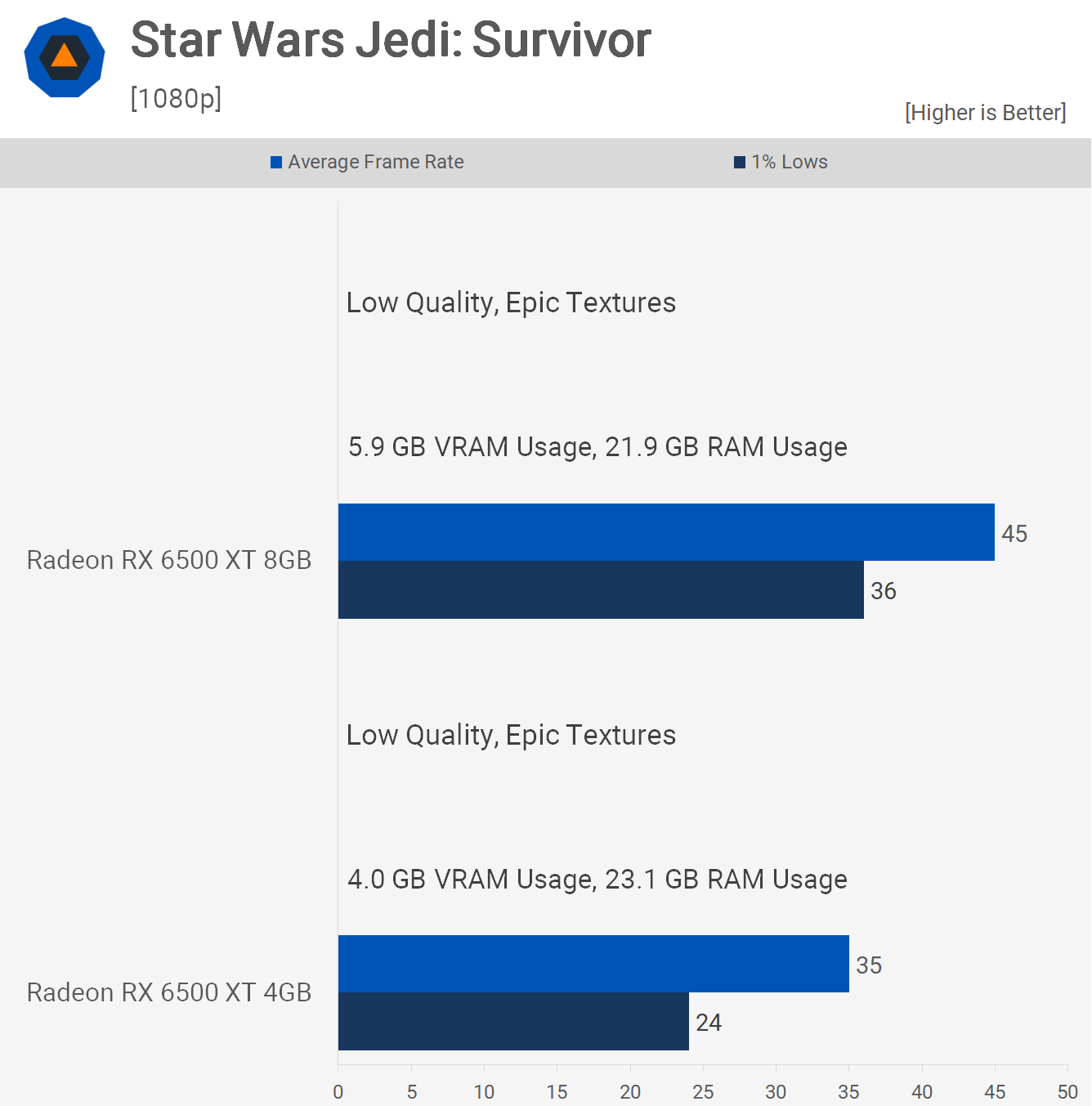

Star Wars Jedi: Survivor

Moving on to Star Wars Jedi: Survivor, here we’re using the very low-quality preset, which is still very heavy on VRAM usage. Although the average fps performance is much the same, the 8GB model provided much better 1% lows, improving performance by 23%.

Then, if we enable epic quality textures while keeping everything else on low, the 8GB model only drops a few frames, while the 4GB model saw a 24% decline, rendering just 35 fps.

This meant it was possible to achieve better performance with the 8GB model using epic quality textures compared to the 4GB model with everything set to low, including textures.

Visual Comparison

Visually, this didn’t always make a big difference, though admittedly, I didn’t examine much of the game. But even here, you can see that distant texture quality is much higher when using the epic textures settings, and these visual improvements don’t come at a performance cost, at least relative to the 4GB model.

Things that stood out included the quality of these crates; the epic texture version looked much better, and any distant textures appeared much clearer. Overall, it’s fair to say that the epic texture version looked much better overall.

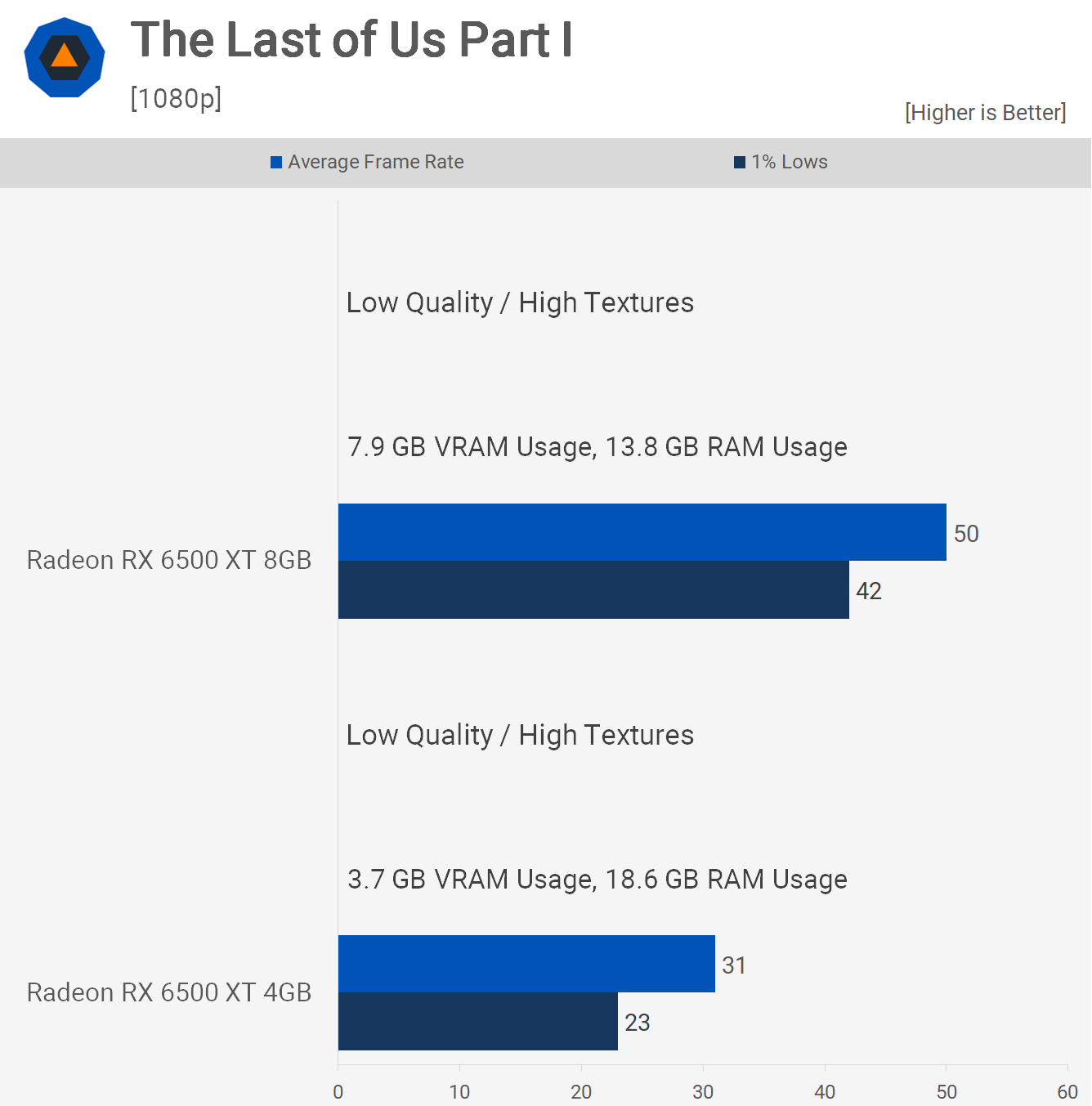

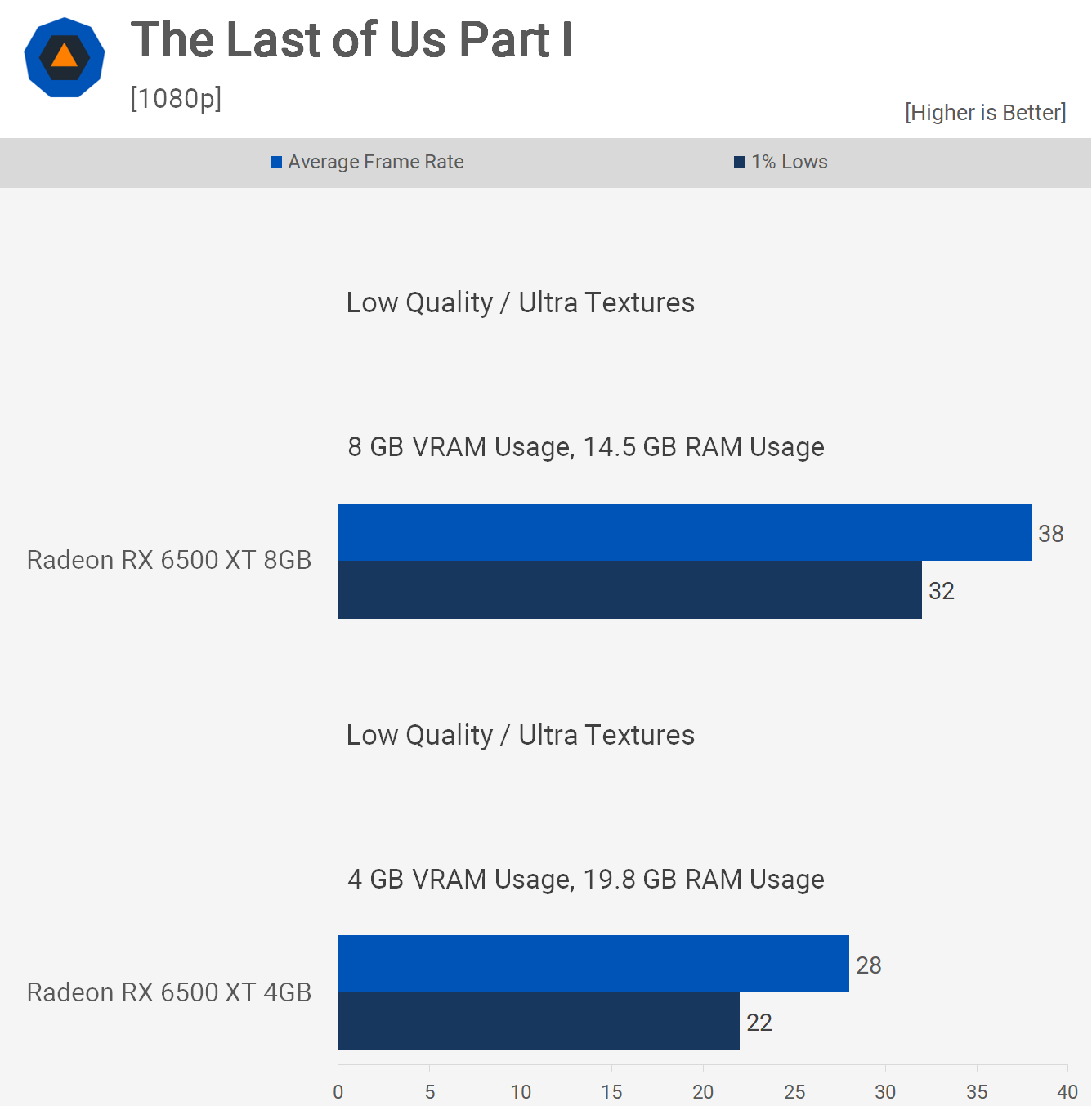

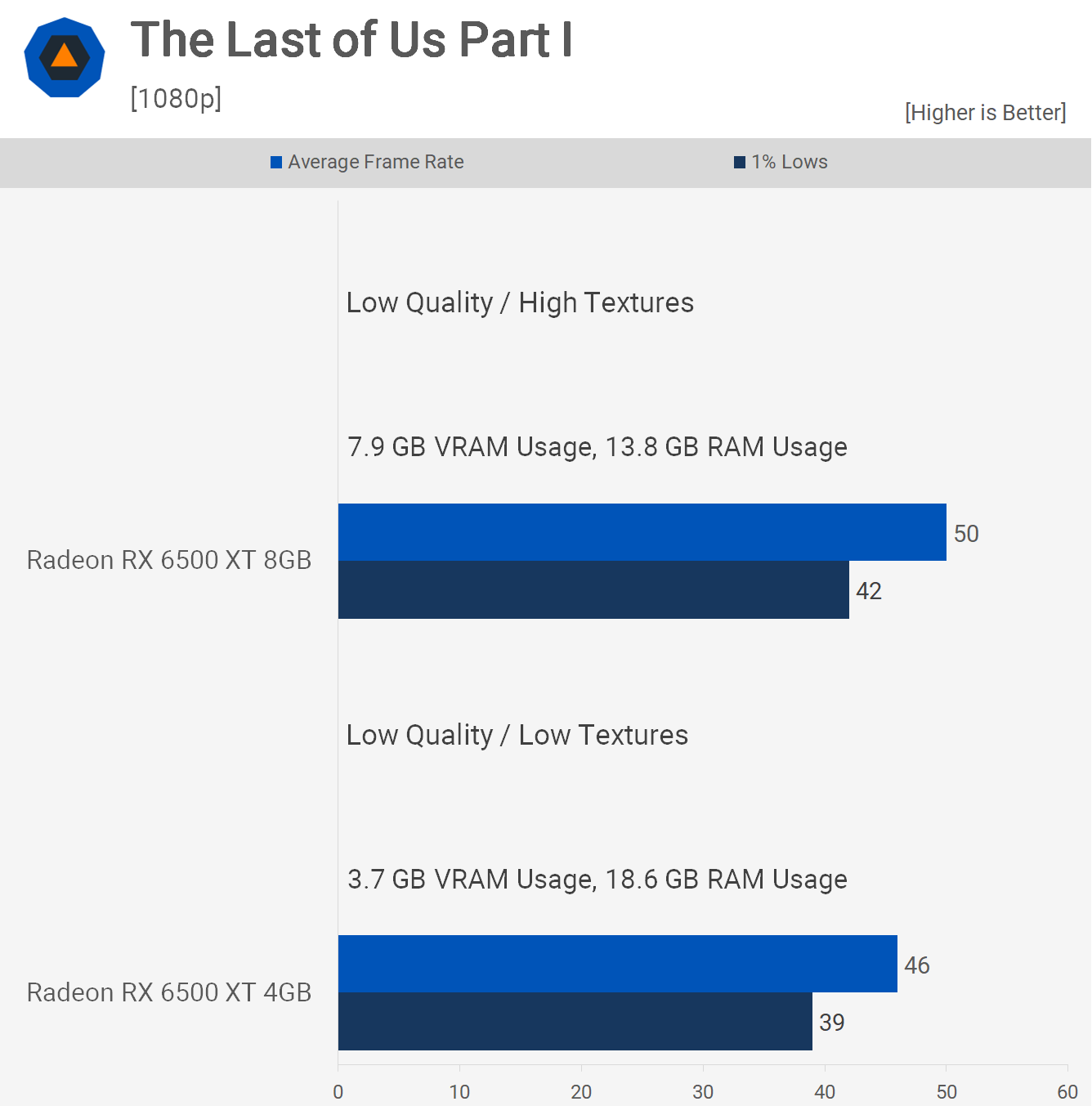

The Last of Us Part 1

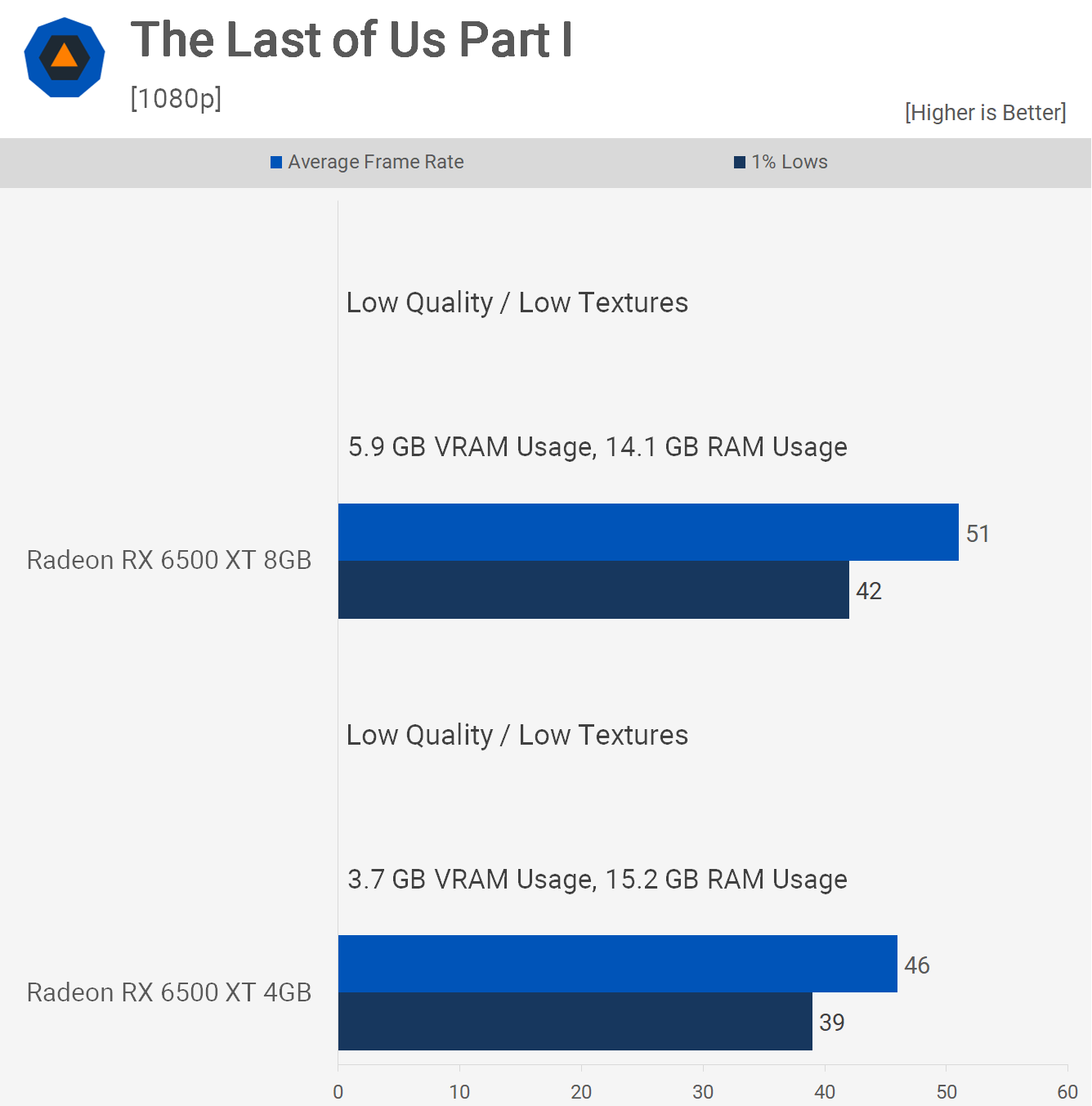

Next up, we have The Last of Us Part 1, a title known for its heavy VRAM usage. Even after multiple updates, it can max out 8GB buffers. The most significant optimization improvements have been in texture quality for the low and medium settings.

Even with the low-quality settings, the 8GB card is still 11% faster, which is a notable increase. We attribute this uplift to the game using more than 4GB of VRAM, as the 4GB model experienced a 1GB increase in RAM usage.

Increasing the texture setting to high, we were able to max out the 8GB 6500 XT at 1080p, with usage hitting 7.9GB. That said, fps performance remained unchanged, whereas the 4GB model saw a 33% decrease, dropping to 31 fps on average.

Then, enabling ultra-quality textures, the 8GB model does run out of VRAM, resulting in a 24% performance decrease. However, 38 fps on average is still significantly better than the 28 fps observed from the 4GB model.

Comparing their most optimal configurations, the 8GB model with high textures and the 4GB model with low, we find that the 8GB version is still a substantial 61% faster. Performance aside, the visual upgrade is quite significant.

Visual Comparison

High-quality textures offer several visual enhancements. From the opening cutscene, one noticeable improvement is on Sarah’s t-shirt. Text quality is a significant issue with low-quality textures. With high-quality textures, you can actually read the names, whereas it’s impossible with low. The front of her t-shirt and the box she hands Joel feature noticeably higher textures as well.

The eagle-eyed among you might have noticed that even the hair on Joel’s arm looks much better using the higher textures. Playing the game, you will notice the characters’ faces, for example, Sara’s freckles, are far more noticeable using the higher textures.

In this scene, the bedside table, as well as Sarah’s face and arms, look significantly better using high textures. In-game, the difference is even more noticeable; character models look far more detailed, and the ground textures really stand out, offering a significantly better experience with the 8GB model.

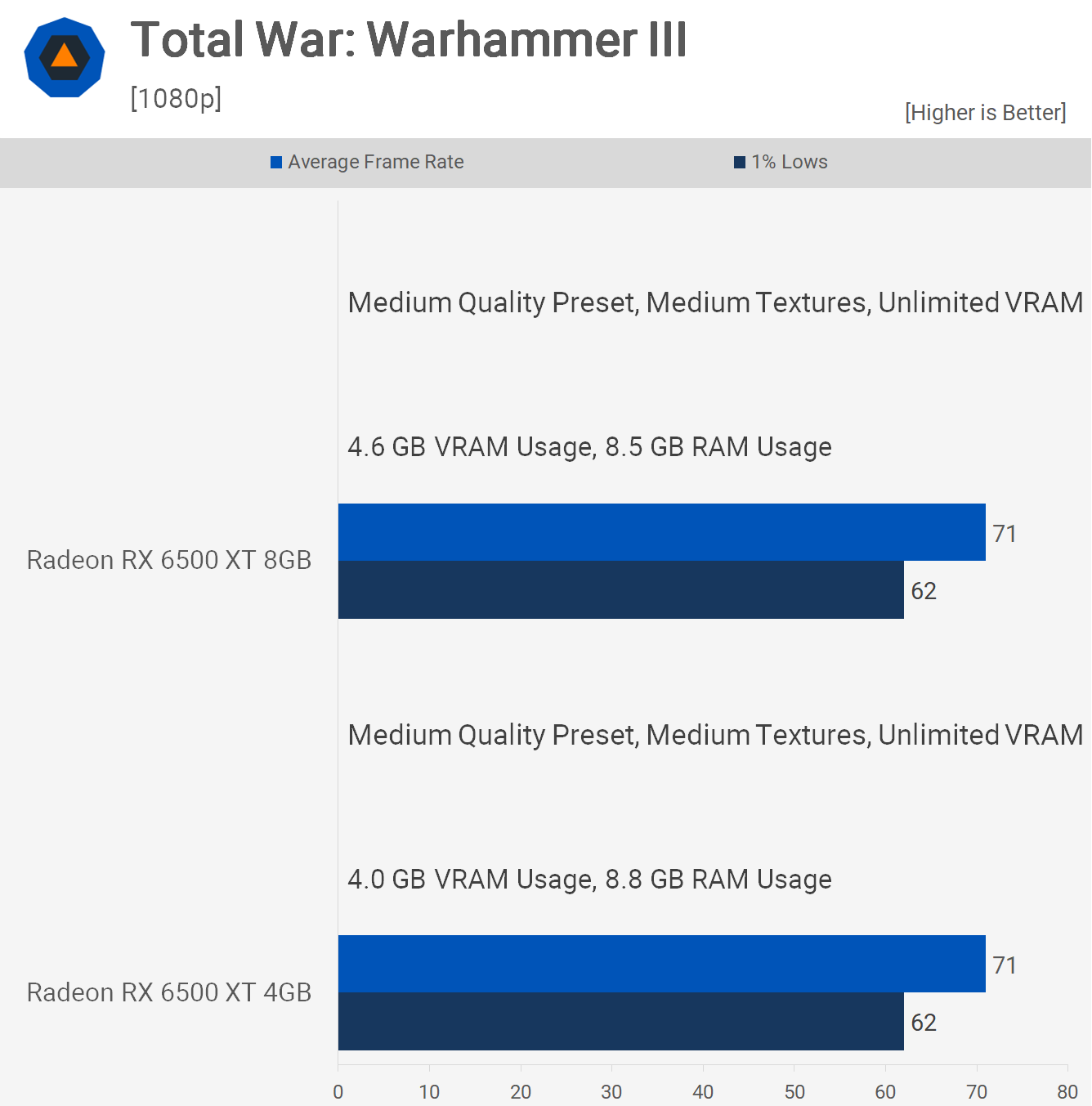

Total War: Warhammer III

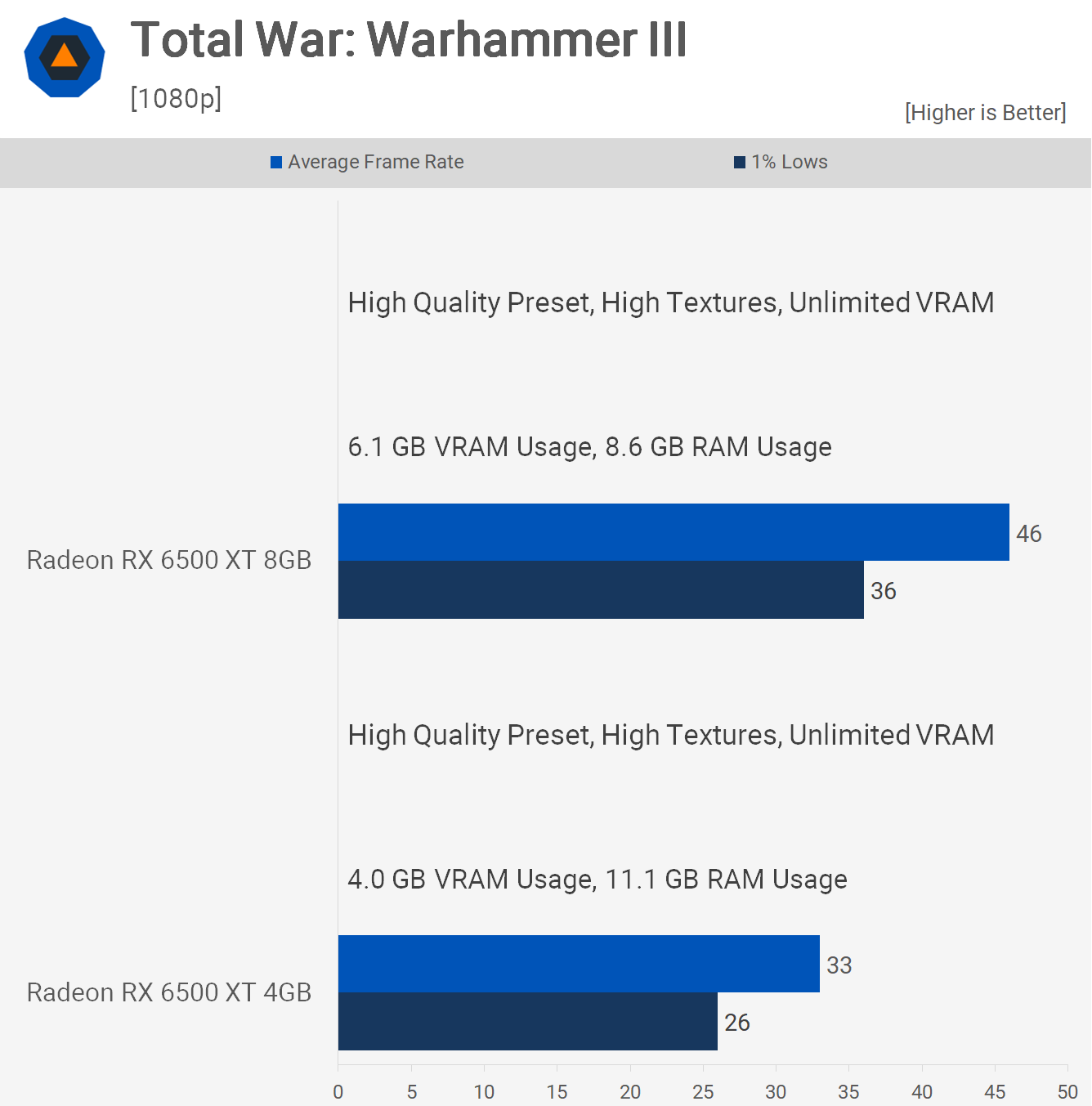

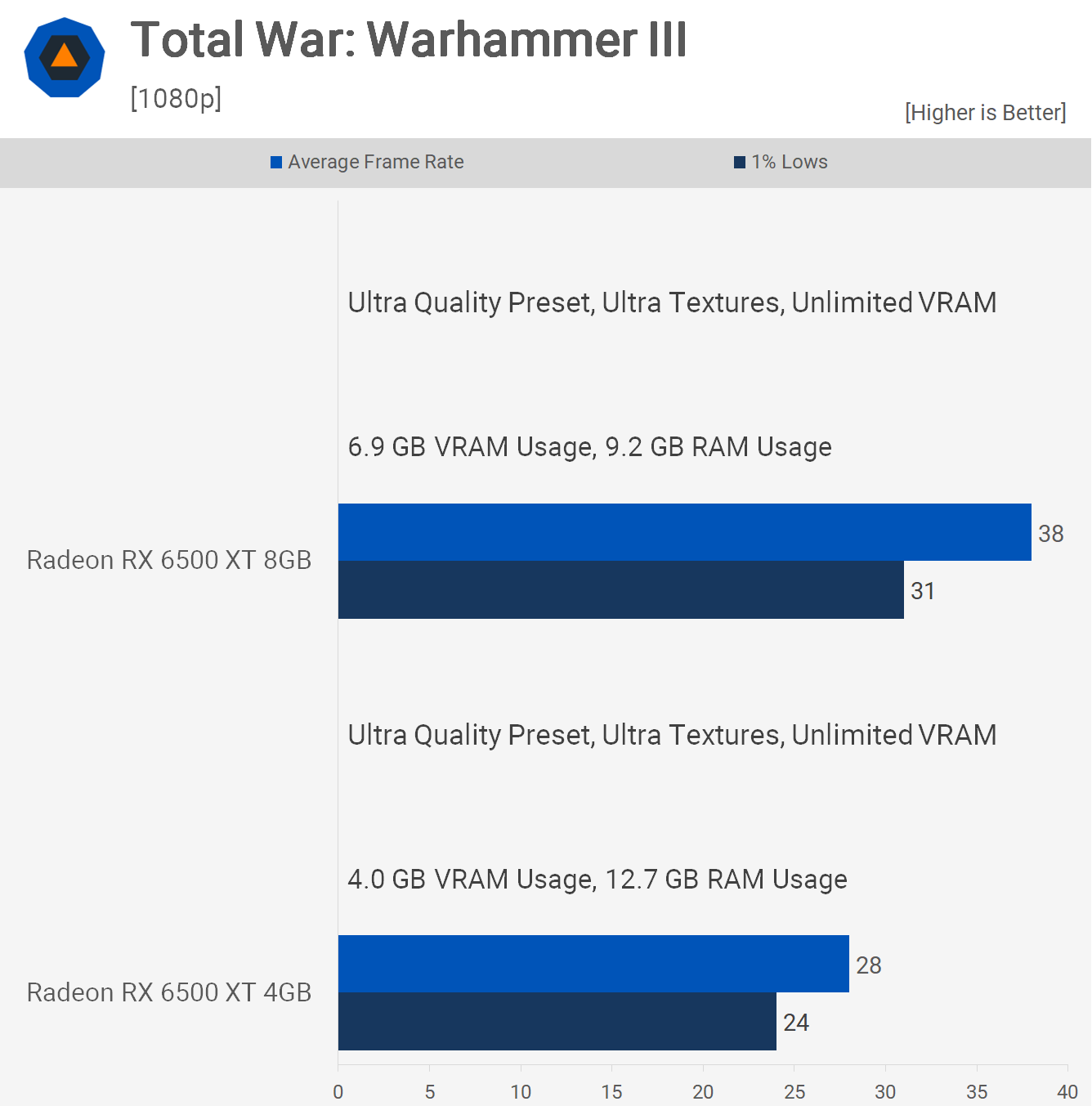

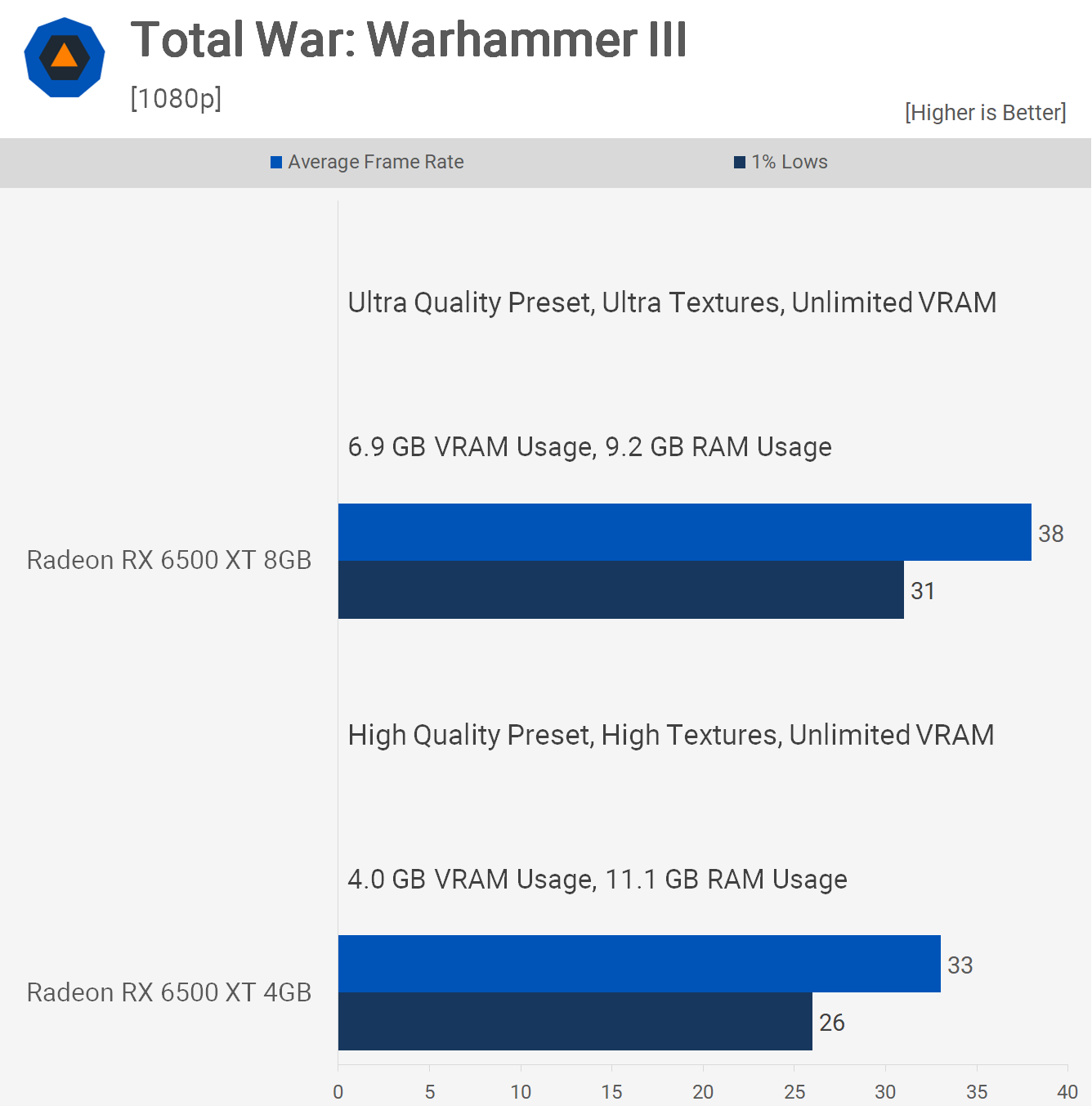

The last game we’ll look at is Total War: Warhammer III. It’s important to note that every preset applies Ultra Texture quality but will automatically reduce texture quality if there’s insufficient VRAM unless the ‘unlimited VRAM’ option is selected. Therefore, we’ve ticked the unlimited VRAM box for all our testing.

Starting with the medium quality preset and textures, both models delivered the same level of performance, so 71 fps on average, which is satisfactory.

However, if you want to enable the high preset with high textures, the 4GB model will struggle, dropping to 33 fps on average. This makes the 8GB card almost 40% faster as VRAM usage hits 6.1GB.

Then, with the ultra preset and textures, the 8GB model drops to 38 fps on average, which is still 36% faster than the 4GB model, with VRAM usage reaching 6.9GB.

This means the 8GB model using ultra settings is still faster than the 4GB model using high settings, by 15% in fact.

Visual Comparison

Given we’re only going from high to ultra, the visual difference isn’t massive, but noticeable differences include improved shadow detail, higher quality foliage on trees, and more visual effects.

The game certainly looks better using the ultra settings, and 38 fps on average is probably sufficient for this title. However, if you desire over 60 fps, you’ll have to use the medium settings with the 6500 XT, which only requires a 4GB buffer.

What’s Not Cutting It Anymore?

It’s no surprise that 4GB of VRAM isn’t sufficient in 2024; the news won’t shock anyone. However, just how inadequately it performs may surprise you, especially considering that in almost all instances, we were using the lowest possible visual quality settings.

For us, this comparison was enlightening as it provided a clear view of what happens when you run out of VRAM, not just in a few edge cases, but almost universally. This should give us some clear indications of where 8GB graphics cards are heading. It wasn’t long ago that 8GB of VRAM was considered pointless and even overkill.

Looking back, the first Radeon GPU to offer an 8GB VRAM buffer was the RX 390 and 390X in mid-2015, which at the time, was essentially a refreshed 290 and 290X model with a price hike. Then there was the RX 590, technically the first AMD GPU to use 8GB of VRAM exclusively in late 2018, though it was really just an overclocked RX 580 on a slightly newer process node.

Vega 56 and 64 were truly AMD’s first new GPUs to adopt an 8GB VRAM buffer without the option of a 4GB model, which happened in late 2017. Mainstream affordable 8GB AMD graphics cards didn’t arrive until late 2021 with the Radeon RX 6600 series.

On the Nvidia camp, the first GeForce GPU to exceed 6GB of VRAM was the GTX Titan X in early 2015. The card packed a 12 GB buffer but cost $1,000, which back then was insane, so not sure we can count that one. Mainstream models arrived in mid-2016 with the Pascal-based GTX 1070 and GTX 1080, priced at $380 and $600, respectively. Then we saw the RTX 2070 in late 2018 for $500, Nvidia’s most affordable 8GB model until the RTX 2060 Super arrived the following year for $400.

We didn’t see a cheaper GeForce GPU until the crappy RTX 3050 in early 2022 for $250, though it used to cost a lot more than that, but it was the first affordable 8GB model from Nvidia as the RTX 3060 Ti still cost $400.

So, in short, we first encountered 8GB-enabled GPUs back in 2015, and at the time, they were deemed unnecessary, a sentiment I admittedly shared, which in hindsight was short-sighted, though I think I loved just how affordable the R9 290 had become back then.

Even with the release of the Radeon RX 480 in mid-2016, the 8GB buffer still seemed largely unnecessary, despite the 8GB option costing just $40 more. We recall being slightly annoyed that AMD only sampled 8GB versions of the RX 480 to reviewers, as the 4GB model offered much better value in terms of cost per frame.

By the time the RX 580 rolled around, not much had changed, though for just $30 more, we were recommending you to opt for the 8GB model. Then, in late 2019, after comparing the 4GB and 8GB versions of the Radeon 5500 XT, we concluded that if you’re a single-player gamer who prioritizes visual quality, you’ll want to avoid 4GB graphics cards like the plague.

Based on that timeline, by 2017, when the RX 580 and Nvidia’s Pascal GPUs were on the scene, we were recommending 8GB options when available. By 2019, we strongly advised against GPUs with less than 4GB, budget permitting. That’s a relatively quick turnaround. We went from considering 8GB in 2015 as unnecessary to seeing signs by 2017 that investing in an 8GB model might be wise, and by 2019, it was a strong recommendation before 4GB became almost unusable for modern gaming by 2022.

Therefore, it makes sense that today, you’d want to avoid 8GB GPUs, especially when spending $300 or more. This was the point we were trying to make last year in our 8GB vs 16GB VRAM review. We’ve seen numerous examples where 8GB GPUs just aren’t sufficient, even at lower resolutions such as 1080p and 1440p. So, if we’re seeing several examples now, history suggests it won’t be too many more years before 8GB GPUs become almost unusable outside of the very lowest quality settings.

This article also highlights the importance of VRAM. With 4GB, the Radeon 6500 XT is a steaming pile, but with 8GB it actually becomes usable. Sadly though, the 8GB models aren’t priced at $140, and they aren’t easy to find. They also suffer from the same shortcomings as the 4GB models, such as PCIe 4.0 x4 bandwidth, just two display outputs, and no hardware encoding. But I tell you what, with 8GB of VRAM, it’s worlds better.

Shopping Shortcuts:

[ad_2]

Source link